Copyright pressure mounts as OpenAI battles over newspapers and pirate libraries

Key Points

- Nine regional newspapers in the US have sued OpenAI and Microsoft, alleging that their content was used without permission to train AI models.

- The newspapers are seeking billions in damages and, in some cases, want the models and training data destroyed, arguing that the models can reproduce their articles nearly word for word.

- In a separate proceeding, OpenAI must disclose internal communications about the deletion of pirated books. In a similar case, Anthropic paid $1.5 billion to settle.

Nine US regional newspapers have slapped OpenAI and Microsoft with a massive copyright lawsuit, seeking damages that could top $10 billion. At the same time, a federal court has ordered OpenAI to hand over internal communications about book datasets it allegedly sourced from a pirate library.

The core allegation: OpenAI's models and Microsoft services like Copilot were trained on unlicensed news articles and can regurgitate them almost word-for-word. The complaint was filed in New York by a group of publications including the Boston Herald, Hartford Courant, San Diego Union-Tribune, and Los Angeles Daily News.

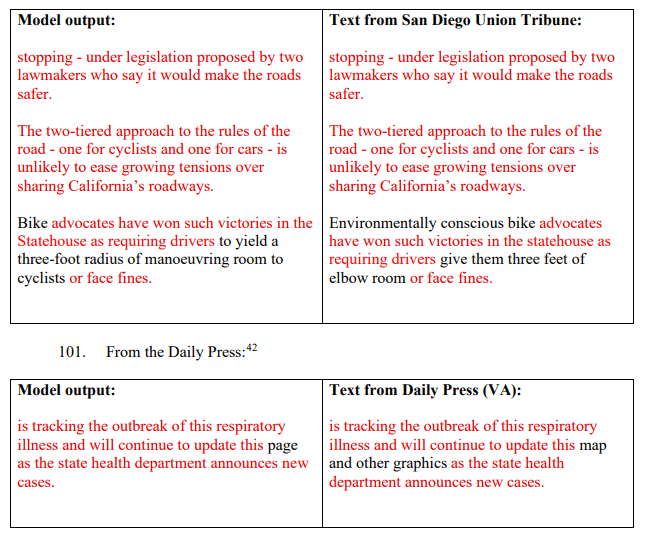

The case relies on specific receipts: instances where ChatGPT variants reproduce the plaintiffs' reporting nearly verbatim. The publishers submitted side-by-side comparisons showing the model's output matching original articles with only minor differences. They argue these aren't glitches, but proof the models "memorized" the data and will spit it back out when prompted.

In its similar dispute with the New York Times, OpenAI argued that the newspaper manipulated the model with specific prompts until it reproduced content. The AI company claimed memorizing was a "bug," not an intended feature.

Courts around the world are still split on how to handle this. A Munich court recently ruled that an AI infringes copyright specifically because it memorizes and reproduces song lyrics. On the flip side, a British judge dismissed a claim against the Stable Diffusion image model, arguing the technology is transformative.

Beyond simple copying, the publishers accuse OpenAI of violating the Digital Millennium Copyright Act. They claim OpenAI systematically removed copyright management information—such as bylines, titles, and links to terms of use. The alleged strategy: scrub the data, present the output as generated text, and trick users into thinking it's free to use.

AI training threatens news business models

The plaintiffs argue they are often the sole source for local news in their regions, investing heavily in reporting funded by ads and subscriptions.

To protect that investment, they use paywalls and terms of use that explicitly ban scraping. These clauses specifically prohibit using content to train language models or for retrieval-augmented generation (RAG). The content is strictly for personal, non-commercial use.

The lawsuit alleges OpenAI and Microsoft simply ignored these rules. The companies supposedly scraped the sites, stripped the copyright notices, and used the text without a license for both training and direct search results. Microsoft is targeted not just as an infrastructure provider, but as a co-designer of the models and a direct beneficiary of the alleged theft.

The plaintiffs are seeking damages exceeding $10 billion, citing US laws that allow for up to $150,000 per work for willful infringement and up to $25,000 for removing copyright information. They argue that because higher-quality datasets were sampled more frequently during training, professional press content had a disproportionate impact on the models.

They also want the nuclear option: the destruction of all GPT models and training sets containing their work, a demand the New York Times also made in late 2023.

The mystery of the deleted book datasets

It’s not just newspapers. OpenAI faces ongoing litigation from authors and publishers over the books used to train its AI. The dispute focuses on internal datasets dubbed "Books1" and "Books2," which allegedly contain massive amounts of e-books downloaded from the pirate library Library Genesis (LibGen).

According to an opinion and order by Magistrate Judge Ona T. Wang, an OpenAI employee downloaded the collections from LibGen in 2018. These files formed internal corpora initially labeled "LibGen1" and "LibGen2," later renamed Books1 and Books2. Court docs indicate these datasets trained GPT-3 and GPT-3.5.

In mid-2022—roughly a year before the lawsuits started landing—OpenAI deleted Books1 and Books2. The company claims the deletion was "due to non-use," but has tried to shield details behind attorney-client privilege. Judge Wang rejected that argument, ordering OpenAI to hand over the documents by early December 2025.

Anthropic has already paid US authors to settle a similar case. In that instance, Judge William Alsup ruled that using pirated data was not permitted, regardless of "transformative use" arguments. However, he noted that training AI models on legally acquired books could potentially qualify as fair use.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now