Paris-based Mistral releases Large 3, a major new open-source AI model

Key Points

- Mistral AI has introduced the Mistral 3 family, a suite of open, multimodal models ranging from efficient edge solutions to a high-performance flagship.

- The leading "Mistral Large 3" utilizes a sparse Mixture-of-Experts architecture with 675 billion total parameters and is released under the open Apache-2.0 license.

- For local deployment, the company released three smaller "Ministral" variants that offer specialized reasoning and instruction capabilities while maintaining high efficiency.

Mistral AI is rolling out a new family of open, multilingual, and multimodal models called Mistral 3. The lineup ranges from compact options for edge deployments to a large Mixture-of-Experts model.

According to Mistral AI, the series includes three "Ministral" models with 3, 8, and 14 billion parameters, plus the flagship "Mistral Large 3." The flagship uses a sparse Mixture-of-Experts architecture and was trained on about 3,000 Nvidia H200 GPUs. Mistral lists 41 billion active parameters and 675 billion total parameters for the model.

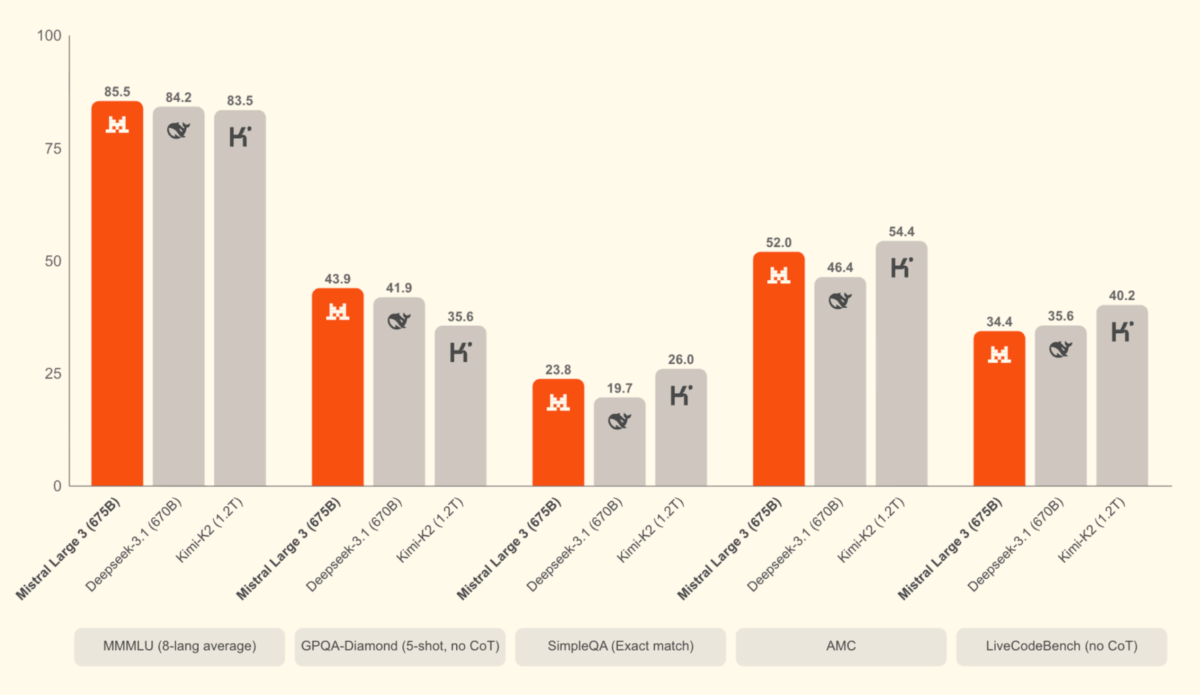

Mistral Large 3 is fully open source under the Apache-2.0 license. The company says it aims to match other leading open models on general language tasks while also handling images. On the LMArena leaderboard, it currently ranks second among open-source non-reasoning models and sixth among open-source reasoning models. In published benchmarks, its performance lines up with other open models like Qwen and Deepseek. Still, Deepseek released V3.2 yesterday, and that update shows clear improvements over the previous version in several tests.

What the new edge models mean for efficiency

The smaller "Ministral 3" variants target local and edge use. All three sizes - 3B, 8B, and 14B - come in base, "Instruct," and "Reasoning" versions, each with image understanding. These models are also released under the Apache-2.0 license.

Mistral says the instruction-tuned models perform on par with similar open-source options while generating far fewer tokens. The reasoning versions are built for deeper analytical tasks. According to the company, the 14B model reached 85 percent on the AIME-25 benchmark.

The models are available through Mistral AI Studio, Hugging Face, and cloud platforms including Amazon Bedrock, Azure Foundry, IBM WatsonX, and Together AI. Support for Nvidia NIM and AWS SageMaker is planned. Mistral says it worked closely with Nvidia while developing the new model.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now