Google Deepmind gives Gemini 3 Flash the ability to actively explore images through code

Key Points

- Google Deepmind extends Gemini 3 Flash with "Agentic Vision": The model can actively zoom, crop, and manipulate images by generating and executing Python code - instead of just passively processing them once.

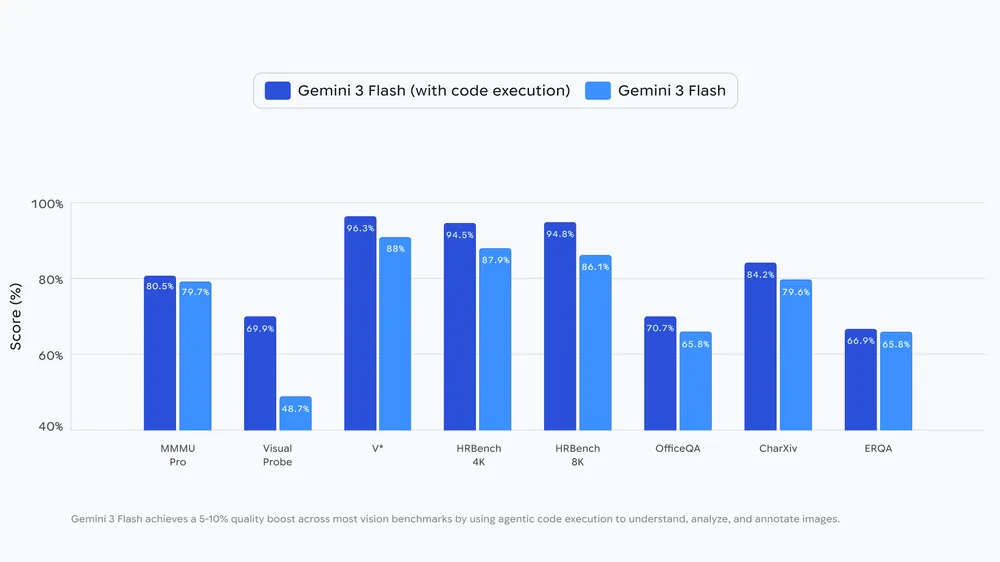

- Google says the technology improves benchmark results by 5 to 10 percent. A construction planning startup is already using the feature to iteratively check high-resolution blueprints for code compliance.

- Many features like image rotation or visual math still require explicit instructions in the prompt. Google plans to release updates and expand to other models.

Google Deepmind is adding a new capability called "Agentic Vision" to its Gemini 3 Flash model. Instead of passively viewing images, the model can now actively investigate them - though not all features work automatically yet.

Traditional AI models process images in a single pass. If they miss a detail, they're stuck guessing. Google Deepmind wants to change that with Agentic Vision. The model can now zoom, crop, and manipulate images step by step by generating and running Python code.

The system works through a think-act-observe loop. The model first analyzes the request and image, then formulates a plan. Next, it generates and executes Python code - for cropping, rotating, or annotating images, for example. The result gets added to the context window, letting the model inspect the new data before responding. According to Google, code execution delivers a 5 to 10 percent quality improvement across various vision benchmarks.

The concept isn't entirely new, though - OpenAI introduced similar capabilities with its o3 model.

Blueprint analysis startup reports accuracy gains

As a real-world example, Google points to PlanCheckSolver.com, a platform that checks construction blueprints for code compliance. The startup says it improved its accuracy by 5 percent by having Gemini 3 Flash iteratively inspect high-resolution plans. The model crops areas like roof edges or building sections and analyzes them individually.

For image annotation, the model can draw bounding boxes and labels on images. Google demonstrates this with finger counting - the model marks each finger with a box and number to avoid counting errors.

For visual math problems, the model can parse tables and run calculations in a Python environment instead of hallucinating results. It can then output the results as charts.

Many features still require explicit instructions

Google acknowledges that not all capabilities work automatically yet. While the model already handles zooming into small details on its own, other features like rotating images or visual math still need explicit prompts. The company plans to address these limitations in future updates.

Agentic Vision is also currently limited to the Flash model. Google says it plans to expand to other model sizes and add tools like web search and reverse image search.

Agentic Vision is available through the Gemini API in Google AI Studio and Vertex AI. The rollout has started in the Gemini app - users can select "Thinking" in the model dropdown. A demo app and developer documentation are also available.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now