Are Google Gemini and OpenAI's GPT-4 peak LLM?

Key Points

- Google's Gemini LLM outperforms OpenAI's GPT-4 in 30 out of 32 benchmarks, but the difference between the two models is often minimal, raising questions about the limitations of current LLMs.

- Google CEO Sundar Pichai believes that there is still a lot of room for improvement in scaling language models, and that further progress will be made as models become larger and more complex.

- The race between Google and OpenAI continues, with the question being how quickly and strongly OpenAI can respond with GPT-5, which could be ready in early 2024.

With Gemini, Google is the first company to offer a more powerful LLM than OpenAI's GPT-4, if current benchmarks are to be believed.

However, the fact that the Ultra version of Gemini beats GPT-4 in 30 out of 32 benchmarks is not the big news about Google's LLM release. The big news is that Gemini barely beats GPT-4.

Even the more compact Gemini Pro variant is only on par with OpenAI's year-old GPT-3.5 model. This begs the question: Couldn't Google do better - or have LLMs already reached their limits? Bill Gates, who's still talking to Microsoft and OpenAI, thinks the latter.

Google CEO Pichai still believes in LLM scaling and sees "a lot of headroom"

In an interview with MIT, Google CEO Sundar Pichai says, "The scaling laws are still going to work." Pichai expects AI models to become more powerful and efficient as they grow in size and complexity. Google still sees "a lot of headroom" for scaling language models, according to Pichai.

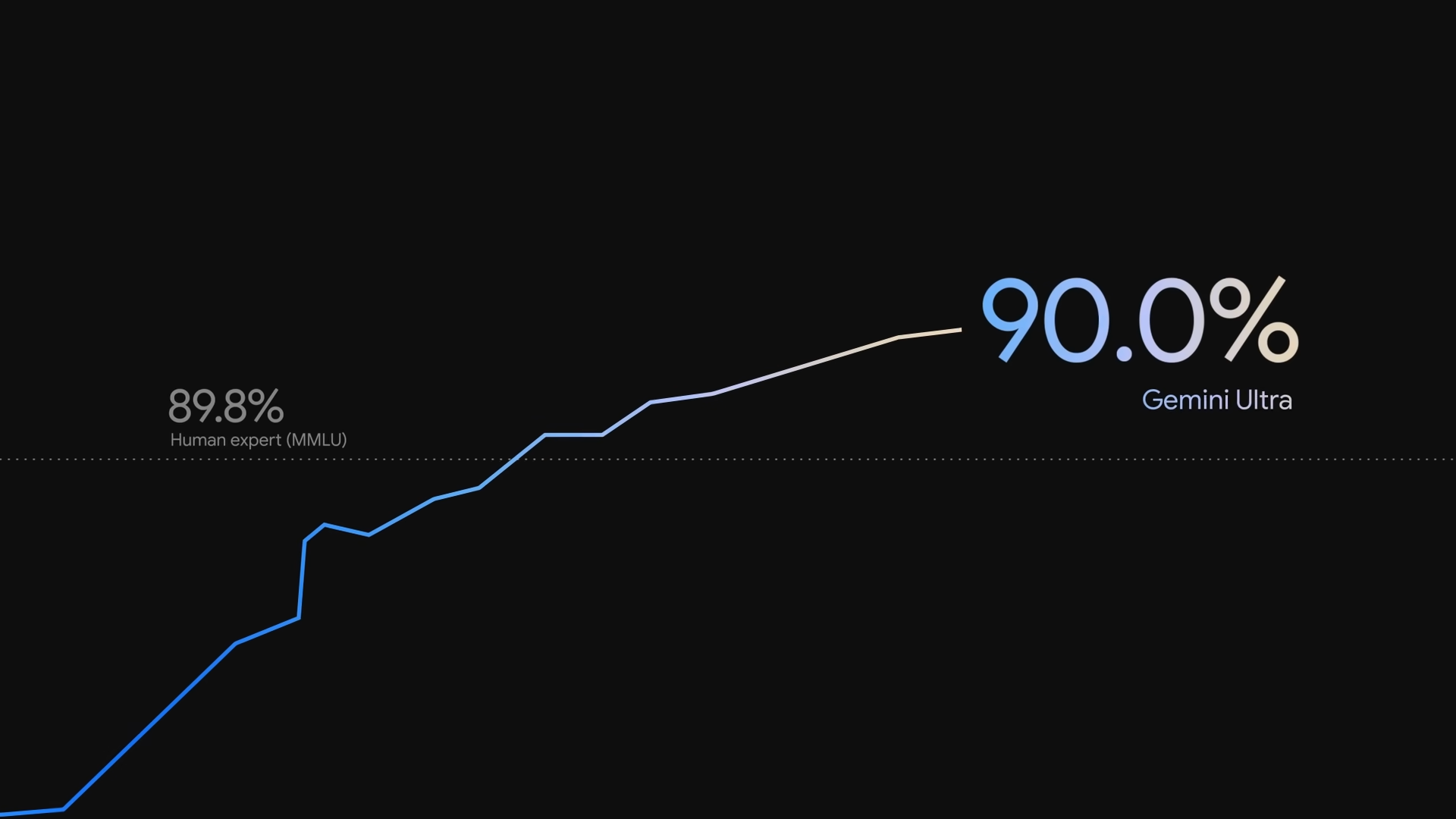

To make this progress measurable, new benchmarks are needed. Pichai points to the widely measured MMLU (massive multi-task language understanding) benchmark, in which Gemini breaks the 90 percent barrier for the first time, outperforming humans (89.8 percent). Just two years ago, the MMLU standard was 30 to 40 percent, Pichai says.

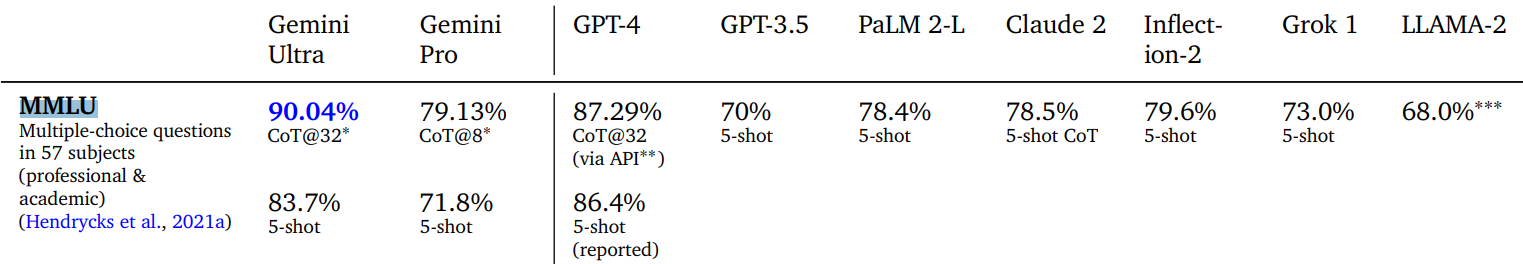

However, if you look at the MMLU numbers in Gemini's technical report, which Google does not put on the big stage, you can see that Google performs better than GPT-4 only for one of the two prompting methods, the more complex one (CoT@32). For the prompting method reported by OpenAI (5-shot, five examples), Gemini Ultra performs worse than GPT-4.

This shows how close GPT-4 and Gemini are in many areas. Even older language models like PaLM 2 are not completely left behind in the MMLU benchmark.

According to Pichai, many current benchmarks have already reached their limits. This also affects the perception of progress. "So it may not seem like much, but it’s making progress," Pichai said.

There is more room for improvement in other areas, such as multimodal processing and task handling. This includes, for example, the ability to respond to an image with appropriate text. This is where Gemini makes bigger leaps, but it's still not groundbreaking.

"As we make the models bigger, there’s going to be more progress. When I take it in the totality of it, I genuinely feel like we are at the very beginning," Pichai says.

Waiting for GPT-5

If Pichai's prediction is correct, it also means that despite years of investment in AI R&D and massive research and computing capabilities, it took Google several months to adapt its internal processes and structures to LLM scaling in a way that could at least narrowly beat GPT-4. About nine months have passed since the release of GPT-4. The merger of Google's AI division with Deepmind may have added time.

With GPT-3, OpenAI had already brought a large, ready-to-use language model to market in 2020, and had been building corresponding structures in the years before. But Google had also launched large LLMs with PaLM and its predecessors. So scaling language models was nothing new to them.

Larger leaps may require advances in the underlying LLM architecture first, which requires more research time. The last big leap, the Transformer architecture, which enabled the scaling principles later used by OpenAI, came from Google.

So the race continues, and the most interesting question right now is: How fast and how strong can OpenAI counter Gemini with GPT-5?

So far, OpenAI has officially stated that the next large language model is far from being ready for market, but CEO Sam Altman also said in early November that today's AI will look "quaint" by next year. The latest leak is that GPT-5 could be ready in early 2024. Maybe GPT-4.5 is enough.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now