AI software fixes eye contact in videos for 10 cents a minute

Sieve, an AI startup, has launched a new API that claims to fix eye contact in videos with a single call. The company says its technology can adjust gaze direction automatically in real-time to make videos more engaging.

Poor eye contact can make video content feel impersonal. Sieve aims to solve this with an API it says integrates quickly into existing apps.

The company claims its solution improves on previous approaches that often gave poor results or needed complex setups. Sieve sees uses in screen recording, video editing, and broadcasting, as well as other cases where speakers must look directly at the camera.

Real-time gaze correction

The technology uses an AI model that first examines the eye area. It uses facial recognition to find key features and work out head position in 3D.

The AI then separates the eye region and runs it through a neural network. This estimates the current viewing angle and adjusts the eyes to make it look like direct eye contact.

To look natural, the amount of correction changes based on head position. The AI also spots blinking and brief eye covering, pausing the adjustment at these times.

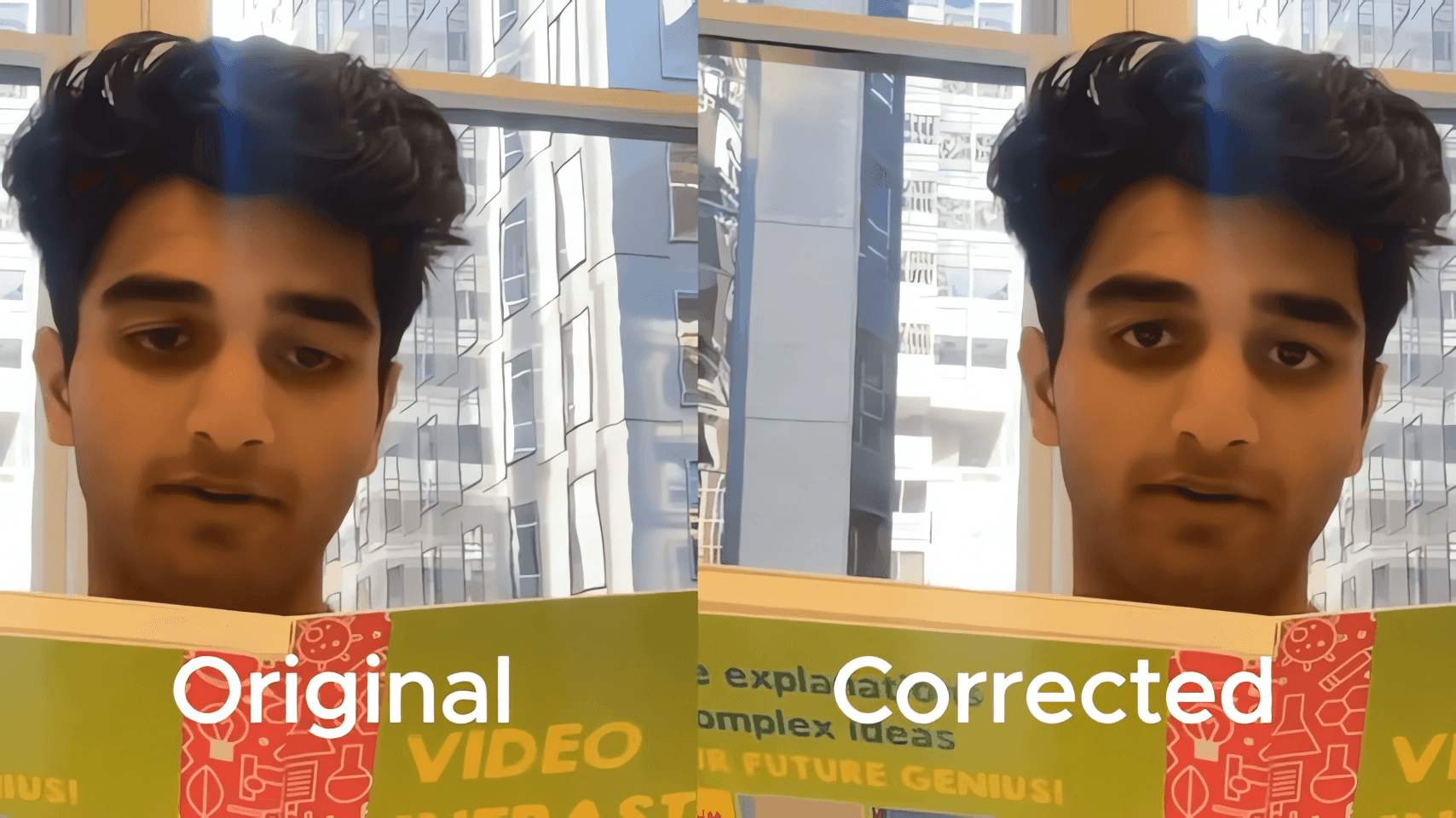

Sieve says its gaze correction feels more natural than existing solutions. | Video: Sieve

Sieve claims the entire process runs with minimal latency, enabling real-time gaze correction. This is similar to the company's SieveSync system from September, which lets users adjust lip movements in videos after filming.

Users can try the model on their own videos in a test area. The API costs 10 cents per minute of video processed. Sieve also gives instructions for adding the technology to Python apps.

The company says it took inspiration from Nvidia's broadcast tech and LivePortrait's ability to change parts of the face.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.