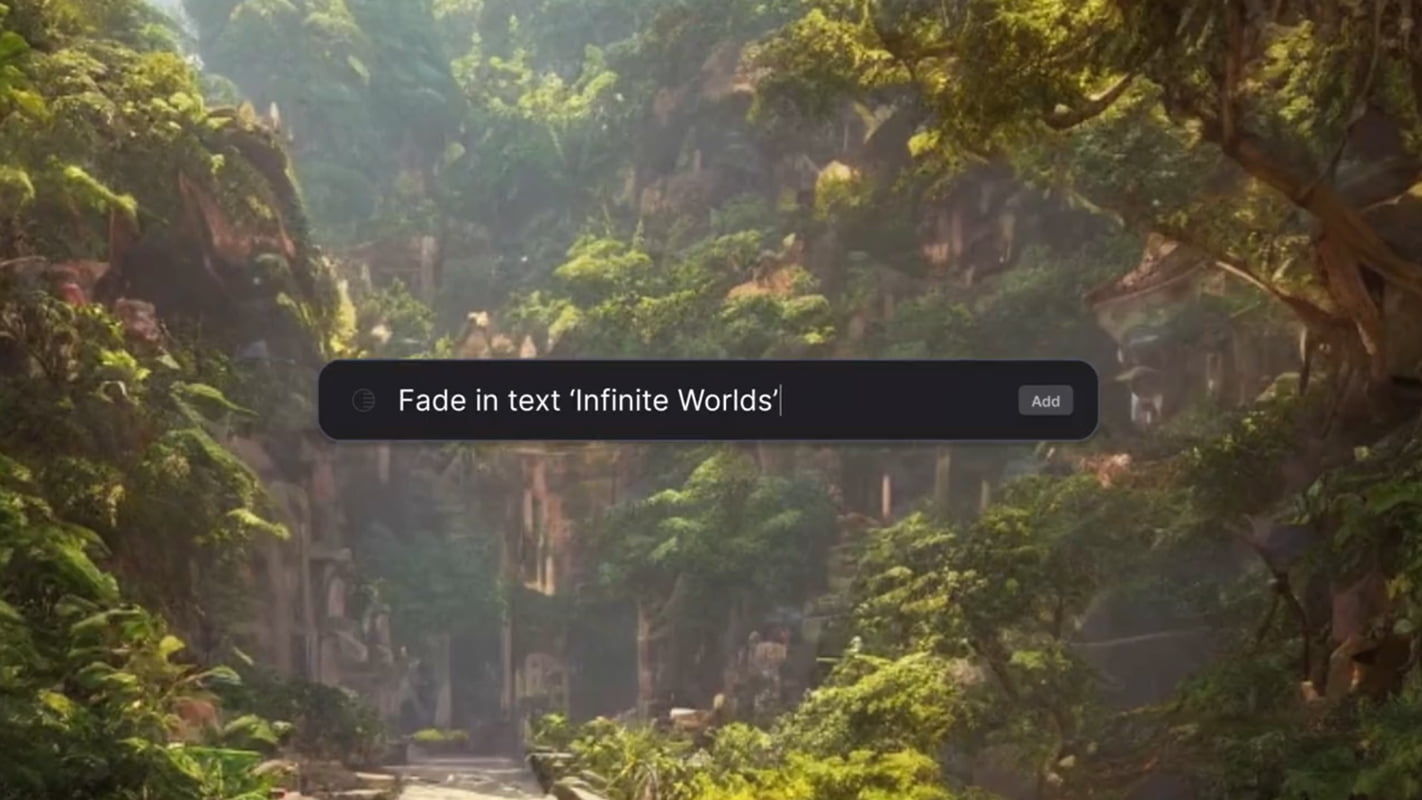

Startup Runway shows off an impressive AI video editor controlled by text input. The editor is using the open-source AI Stable Diffusion.

New York-based startup Runway offers an online editor that aims to make content creation accessible to all. To achieve this, the company relies on automation through artificial intelligence.

AI can currently, for example, replace backgrounds with a digital green screen, adjust the style, or remove objects from a video. The company is quickly incorporating new tools for AI-supported content creation into its platform.

Video: RunwayML

Runway has raised more than $45.5 million in funding from investors since its founding in 2018, including $35 million in December 2021 alone. The increasing maturity of AI applications for images and videos may have been helpful in talks with investors.

Runway shows off new 'text-to-video' feature

The AI startup offers a whole range of different AI models for different features in its backend, including GAN models for generating images that can be used as backgrounds in a video, for example.

Recently, Runway started implementing Stable Diffusion, a powerful open-source model for images. Runway was already actively involved in the creation of Stable Diffusion: Staff member Patrick Esser was a researcher at the University of Heidelberg before joining Runway and was involved in the development of VQGAN and Latent Diffusion.

In a new promotional clip, Runway now shows an impressive text control of the video editor.

Make any idea real. Just write it.

Text to video, coming soon to Runway.

Sign up for early access: https://t.co/ekldoIshdw pic.twitter.com/DCwXcmRcuK

— Runway (@runwayml) September 9, 2022

Using simple text commands, users can import video clips, generate images, change styles, cut characters, or activate tools.

Text-to-video feature is actually a text control

Runway markets the new feature as text-to-video, an apt description that can nonetheless be confusing: In technical jargon, AI systems like DALL-E 2, Midjourney, or Stable Diffusion are called text-to-image systems. They receive text input and generate a matching image.

However, Runway's text-to-video feature controls existing video tools via text input. It is not a generative video model that produces video from text input.

Such systems also exist: Deepmind recently showed its Transframer model, which creates 30-second video clips based on text. But they are still far removed in quality from the now quite mature image models.

Transframer is a general-purpose generative framework that can handle many image and video tasks in a probabilistic setting. New work shows it excels in video prediction and view synthesis, and can generate 30s videos from a single image: https://t.co/wX3nrrYEEa 1/ pic.twitter.com/gQk6f9nZyg

— DeepMind (@DeepMind) August 15, 2022

The workflow of numerous creatives will nevertheless be greatly simplified by Runway's new feature. It also shows that the startup's bet on simple, AI-powered video production is working.