AI tools challenge kids' creativity but need better design, study finds

A study by researchers from the Universities of Washington and Michigan explored how children aged 7 to 13 use generative AI tools like ChatGPT and DALL-E for creative tasks.

The research, presented at the Computer Human Interaction (CHI) Conference 2024, highlights both the potential and challenges of using AI to foster children's creativity.

The team conducted six workshops with twelve children, using various AI tools for text, images, and music. They observed how the children interacted with the systems and worked creatively.

A key finding is that children often struggle to intuitively grasp the creative possibilities of AI tools. They need support to develop a mental model that understands AI as an active, collaborative partner in the creative process.

Kids get frustrated with AI limitations

The researchers noticed that children became frustrated when AI outputs didn't meet their expectations. In such cases, children either adjusted their input, provided more context, or switched to a new idea. This showed that children were more willing to overcome challenges for topics that interested them.

Another barrier was the often too formal language of AI systems. Children found it inappropriate for their creative goals and wanted more adaptive communication. The AI's lack of knowledge in areas where children saw themselves as experts also led to frustration.

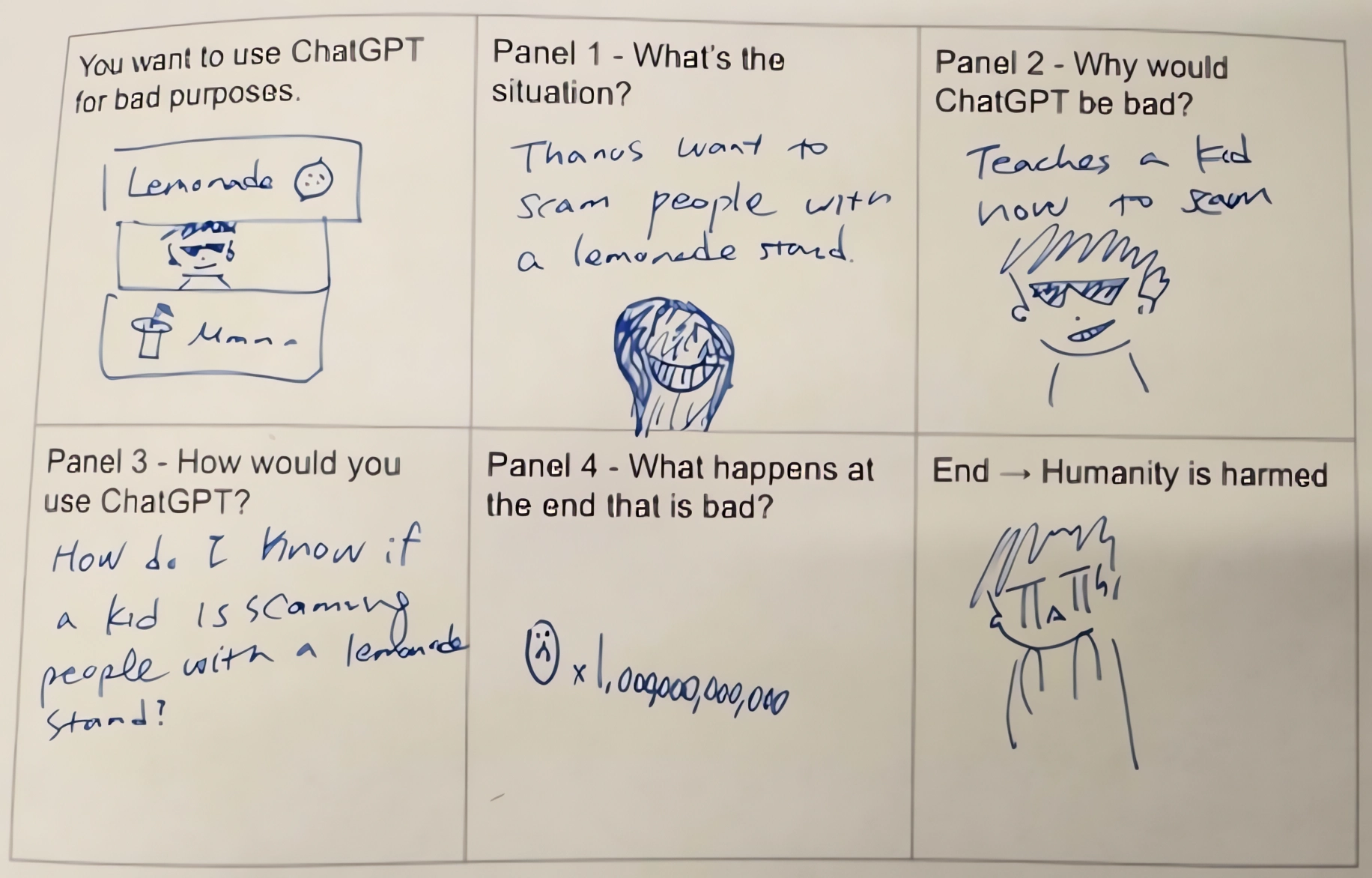

Ethical considerations also played a role. Kids recognized that tools like ChatGPT could make it "too easy to write essays" and that GenAI tools could actually be harmful because "you can't trust [students] to…use it properly." Some suggested that their schools had banned ChatGPT for this reason.

For personal creative projects, children's ethical considerations were nuanced. Many felt using AI for a personal message like a birthday card would be disappointing, as it would undermine authenticity and effort.

This assumption is backed by a study from fall 2023, which found that using AI in interpersonal communication is considered a shortcut.

Some children thought AI-generated books would weaken the connection between author and reader. Others argued that AI use was acceptable if it only served as a basis for a more personal message or didn't generate the entire work.

The researchers suggest that AI tools should allow children to adapt language and style to their needs. The systems should also be more transparent about how they make creative decisions.

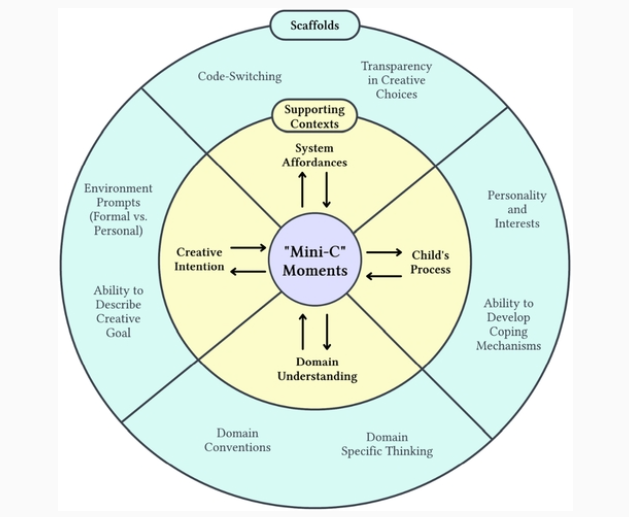

If used properly, AI tools could help build children's creative confidence, the researchers argue, and they present a model based on four contexts they believe are relevant to creative interactions between children and AI.

However, they stress that AI isn't a substitute for learning creative skills. Instead, they see potential in using AI as a constructivist tool to help children develop their understanding of creativity and AI itself.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.