A study shows how smartphone cameras display acoustic information from the environment in pixels - even when the microphone is turned off.

In a new paper, researchers from the U.S. show how smartphones with CMOS, OIS, and autofocus add acoustic information to videos through subtle artifacts. According to the team, these artifacts are caused by lens vibrations induced by ambient noise.

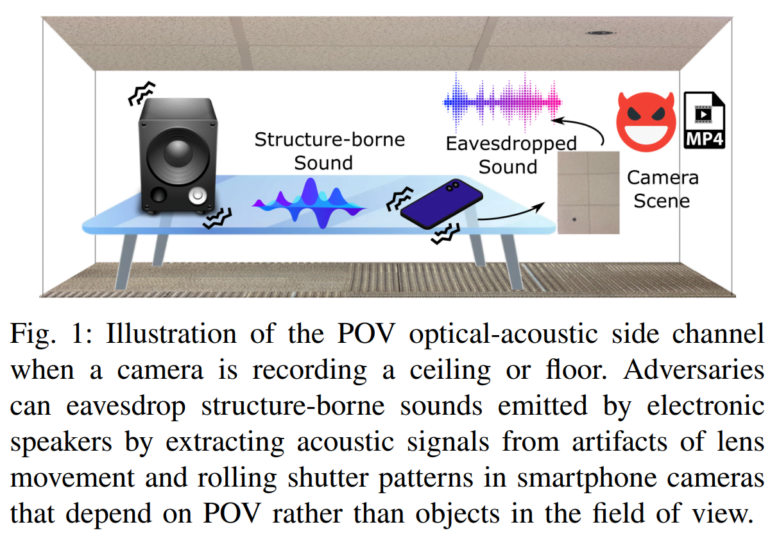

Specifically, ambient noise causes smartphone casings and moving camera lenses to vibrate. The tiny vibrations of the lens are amplified and encoded as rolling shutter artifacts in the video, creating imperceptible image distortions that contain acoustic information.

The study shows the signal path and modulation process of this "optical-acoustic side channel" and demonstrates how AI methods can be used to extract acoustic signals by tracking motion between video frames.

Side Eyes is "basically a very rudimentary microphone".

"Most of the cameras today have what's called image stabilization hardware," explains Kevin Fu, a professor of electrical engineering and computer science at Northeastern University. "It turns out that when you speak near a camera lens that has some of these functions, a camera lens will move every so slightly, what's called modulating your voice, onto the image and it changes the pixels." Thousands of such movements could be recorded per second. "It means you basically get a very rudimentary microphone," Fu says.

In their experiments with ten smartphones, the team achieved accuracies of nearly 81 percent, 91 percent, and 99.5 percent in classifying ten spoken numbers, 20 different speakers, and gender, respectively, when the smartphones were placed near speakers on a table. In this case, the microphone is turned off, the team has access only to a video stream, and the camera is pointed at the table or the ceiling.

Team suggests security measures

This method can be used, for example, to determine with nearly 100 percent accuracy the gender of a person who is in the room but not visible in the video. In addition to the obvious potential for espionage or other attacks, Fu also sees applications in law enforcement.

"For instance in legal cases or in investigations of either proving or disproving somebody’s presence, it gives you evidence that can be backed up by science of whether somebody was likely in the room speaking or not," Fu says. "This is one more tool we can use to bring authenticity to evidence."

The team also proposes hardware improvements to address the causes of rolling shutters and moving lenses. They suggest specific changes to reduce the threat, such as increasing shutter speed, randomizing shutter patterns, and mechanically blocking lens movement. A combination of defenses could reduce the method's accuracy to a random level.