With Gemini, Google also presented AlphaCode 2. The code model outperformed 85 % of human programmers in a competition.

In February 2022, Deepmind unveiled AlphaCode, an AI that writes code at a competitive level. Unlike OpenAI's Codex, AlphaCode was based on a Transformer-based encoder-decoder model. AlphaCode's largest model had 41.4 billion parameters and was trained using 715 gigabytes of code samples from GitHub. After training, AlphaCode solved 34.2 % of all problems in the CodeContests dataset and achieved an average score in the top 54.3 % in ten Codeforce competitions. This means that AlphaCode outperformed almost 46 % of the human participants.

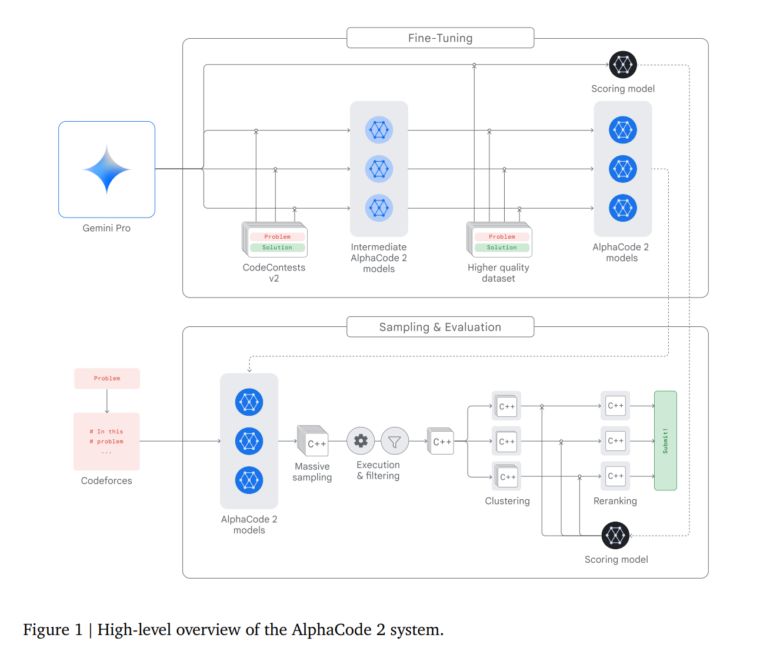

Compared to its predecessor, AlphaCode 2 shows a significant leap in performance, outperforming 85 % of the human competition. Google Deepmind attributes this increase in performance to the use of the Gemini Pro decoder-based model as the basis for all components of AlphaCode 2.

AlphaCode 2 uses Gemini Pro models and massive sampling

AlphaCode 2 consists of several components, including a family of policy models to generate code samples, a sampling mechanism to generate large numbers of code samples, a filtering mechanism to remove non-compliant code samples, a clustering algorithm to group similar code samples, and a scoring model to select the best candidates from the largest clusters of code samples.

The system then uses massive sampling, generating up to one million code examples per problem. Sampling is done evenly across the family of custom models, with only C++ examples used for AlphaCode 2.

The team retested AlphaCode 2 on the Codeforces platform. A total of 77 problems from 12 recent competitions with over 8,000 participants were evaluated. AlphaCode 2 solved 43 % of these competition problems, almost twice as many as the original AlphaCode. In the two highest-scoring competitions, AlphaCode 2 outperformed 99.5% of the entrants. The team believes that performance can be improved even further with Gemini Ultra.

Google Deepmind sees the future in human-machine collaboration

Despite the impressive results of AlphaCode 2, a lot of work is still needed to develop systems that can reliably match the performance of the best human programmers. The system also requires a lot of trial and error and is too expensive to be used on a large scale.

However, AlphaCode 2 opens up the possibility of positive interaction between the system and human programmers, who can specify additional filter properties. In this combination of AlphaCode 2 and human programmers, the system even achieves levels above 90%. The developers at Google DeepMind hope that this kind of interactive programming will be the future of programming, where programmers use AI models as collaborative tools to analyze problems, suggest code designs, and help with implementation.