AlphaCode is Deepmind's first programming artificial intelligence. In one test, the AI already reaches human levels.

With the triumph of Transformer models in natural language processing, researchers realized the usefulness of text-generating models for also generating code. For example, OpenAI's GPT-3 model showed hidden code capabilities such as converting text descriptions to HTML code in the days after its release.

OpenAI also recognized the potential of NLP models for programming and developed Codex, a transformer model specialized for generating code that is available through OpenAI's API. Microsoft has implemented a variant in the AI code assistance software Copilot for GitHub.

OpenAI's contribution to machine programming is more than an autocomplete for code: Artificial Intelligence can write simple programs from simple instructions. However, Codex is a far cry from the human ability to solve more complex problems beyond simply translating instructions into code.

Deepmind's AlphaCode aims to find novel solutions

Deepmind, Alphabet's AI company behind AlphaZero, MuZero, AlphaFold, and AlphaStar, is now introducing AlphaCode, an AI model designed to write "competition-level" code. Deepmind tested AlphaCode for this with programming competitions from Codeforces, a platform specializing in code competitions.

Like OpenAI's Codex, Deepmind's AlphaCode relies on a Transformer architecture. Unlike Codex, however, AlphaCode is an encoder-decoder model. Codex, like GPT-3, is merely a decoder model.

The main difference between the two models is how they process the context of the input data: A decoder model like GPT-3 considers only the context before or after a word when processing an input, such as a single sentence.

An encoder model, such as Google's BERT, processes both directions when representing a word. Therefore, an encoder better represents the meaning of a whole sentence, and a decoder is better suited for text generation.

When the encoder and decoder are combined, as in the case of translations, the decoder can use the representation generated by the encoder, e.g., of the complete sentence, in addition to the initial input, e.g., a word.

Similar to translations, programming contests can be thought of as a sequence-to-sequence translation task: For a problem description X in natural language, create a solution in a programming language Y.

Deepmind, therefore, resorts to a Transformer-based encoder-decoder model for AlphaCode, with adjustments in the model and training methods. Salesforce CodeT5 also relies on such a model.

AlphaCode is four times larger than Codex

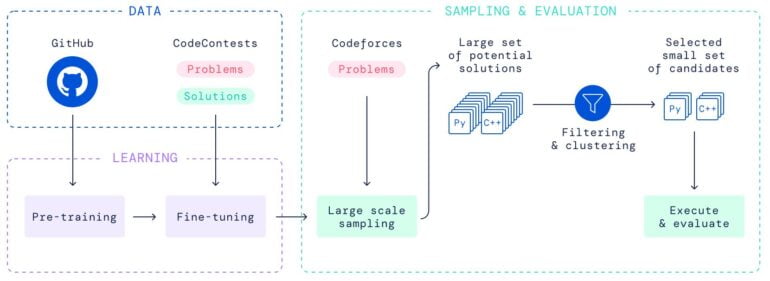

Deepmind trained AlphaCode with nearly 715 gigabytes of code samples from GitHub in the programming languages C++, C#, Go, Java, JavaScript, Lua, PHP, Python, Ruby, Rust, Scala, and TypeScript.

The largest AlphaCode model is 41.4 billion parameters in size. By comparison, OpenAI's code is just under 12 billion parameters in size.

Deepmind then additionally trained AlphaCode with CodeContests, a dataset of problems, solutions, and tests from Codeforces competitions compiled by Deepmind.

After training, AlphaCode's encoder processes problem descriptions, and the decoder generates lines of code. For each problem, AlphaCode can generate millions of possible solutions - a large part of which, however, do not work.

Deepmind filters the numerous possible solutions with sample tests provided in the problem descriptions. However, this process still leaves thousands of possible program candidates.

Therefore, AlphaCode employs a second, smaller model that generates more tests and groups all remaining program candidates according to equal outputs for the generated tests. From these groups, AlphaCode subsequently selects ten solutions for a problem description.

Deepmind's AlphaCode programs at the human level

Using this method, AlphaCode solved 34.2 percent of all problems in the CodeContests dataset. In ten recent Codeforce competitions with more than 5,000 participants, AlphaCode achieved an average ranking in the top 54.3 percent. This puts AlphaCode ahead of nearly 46 percent of human participants. According to the AlphaCode team, its newest AI is the first to reach this level.

An examination also shows no evidence that AlphaCode copies lines of code from the training data set. This demonstrates that the AI model can indeed solve new problems it has never seen before - even if those problems require significant thinking.

AlphaCode does not write perfect code, however: Deepmind says the AI struggles with C++ in particular. The system is expected to spur further research, and improvements to AlphaCode are also planned by Deepmind.

Those who want deeper insights into AlphaCode can explore the model on the AlphaCode website. More information is available on Deepmind's blog.