Apple released OpenELM, a set of open-source language models optimized for efficiency and better performance with less training data.

OpenELM (Open-source Efficient Language Models) is a family of open-source language models with up to three billion parameters. The models use a layer-wise scaling strategy that more efficiently distributes parameters within the transformer model layers, the researchers write. As a result, OpenELM achieves higher accuracy than comparable models.

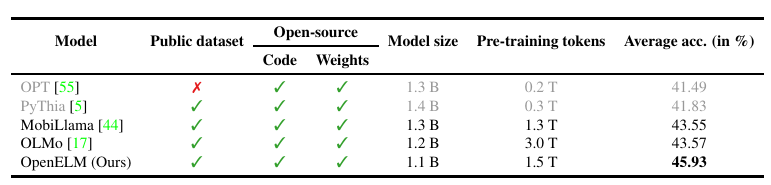

The OpenELM model with 1.1 billion parameters outperforms AI21 Labs' OLMo model with 1.2 billion parameters by 2.36 percent, despite using half as many training tokens for pre-training. Simply put, OpenELM achieves slightly better performance with less data and compute.

The OpenELM models come in four sizes: 270 million, 450 million, 1.1 billion and 3 billion parameters. All models are also available in a version that has been fine-tuned with instructions. They are available on Github and Huggingface.

Apple provides the entire training and fine-tuning framework as open source. This includes the training protocol, multiple checkpoints, and pre-training configuration. In addition, Apple releases code to convert the models to the MLX library to enable inference and tuning on Apple devices.

For training, the tech company used publicly available datasets such as RefinedWeb, deduplicated versions of The PILE, parts of RedPajama and Dolma 1.6. In total, the training dataset contains approximately 1.8 trillion tokens.

Safe, private, on-device

OpenELM is likely another building block in Apple's AI strategy, which focuses on privacy, efficiency, and control, with generative AI primarily on the device.

This could mean improvements to the Siri voice assistant or new generative AI features in apps like Mail or News. Apple wants to show that it can build leading AI systems without exploiting user data.

For advanced cloud AI applications, Apple could partner with Google, OpenAI, and others. Details of Apple's generative AI strategy are expected to be announced at its WWDC developer conference, which begins June 10.

![Black Forest Labs opens its AI image model FLUX.1 context [dev] for private use](https://the-decoder.com/wp-content/uploads/2025/07/blackforestlabs_examples-375x207.png)