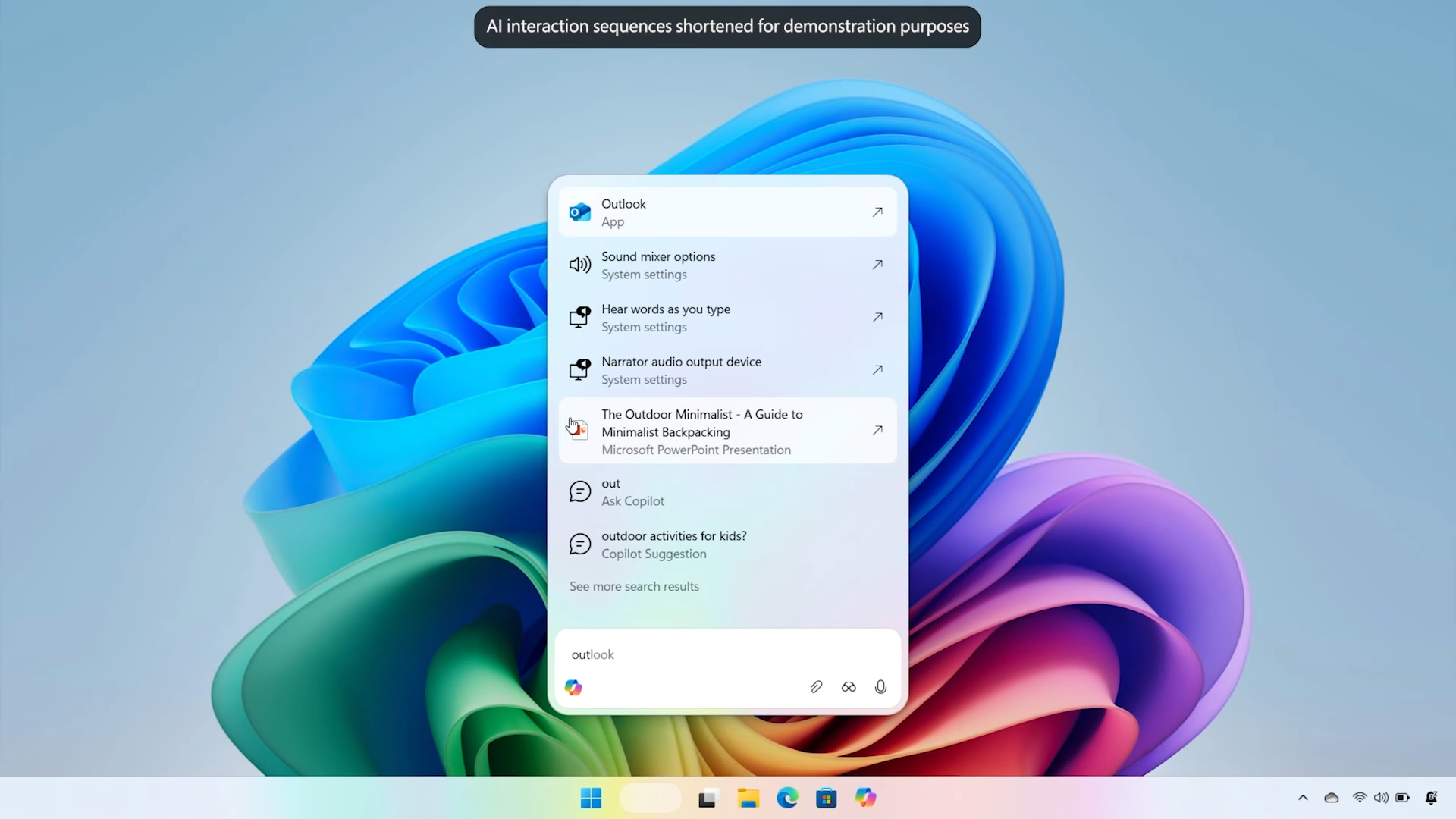

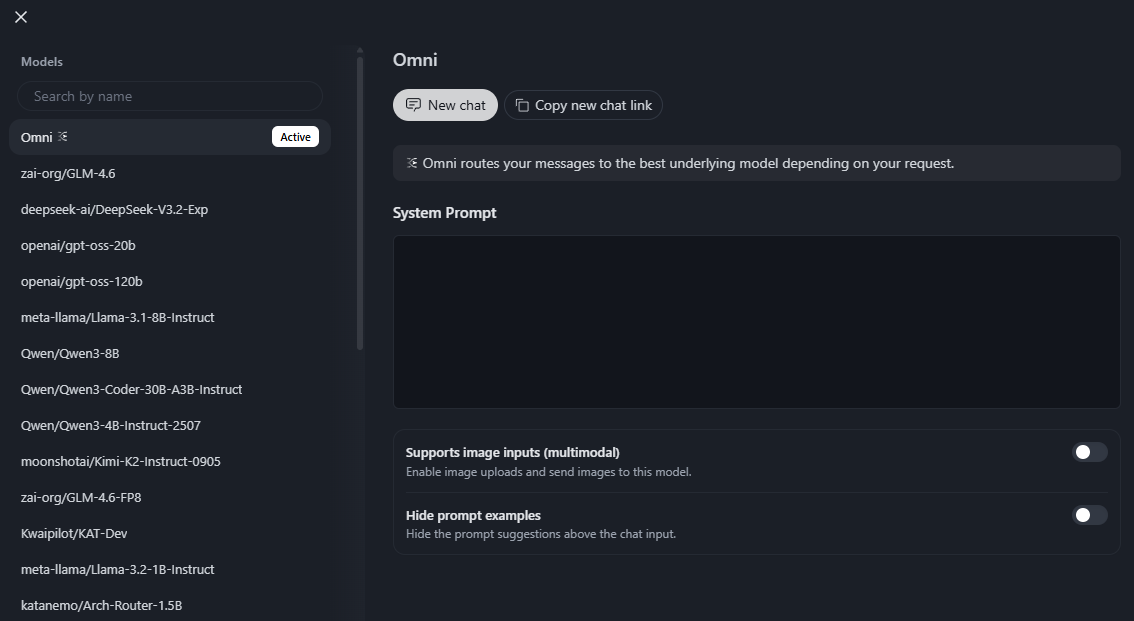

Hugging Face has launched HuggingChat Omni, an AI router that selects the best open source model for each user prompt from more than 100 available models. The system automatically chooses the fastest, cheapest, or most suitable model for each task, using an approach similar to the new GPT-5 router. Supported models include gpt-oss, qwen, deepseek, kimi, and smolLM.

Hugging Face co-founder Clément Delangue says HuggingChat Omni is just the beginning. The platform already offers access to more than two million open models, spanning not only text, but also images, audio, video, biology, chemistry, time series, and more.

The routing system is built on Arch-Router-1.5B from Katanemo, a lightweight 1.5 billion-parameter model that classifies queries by topic and action. Arch-Router claims it outperforms other models at matching human preferences and is fully open source. Details are available in the research paper on arXiv.