ChatGPT accounts linked to Iranian influence operation suspended by OpenAI

OpenAI says it has uncovered and stopped a covert Iranian operation that used ChatGPT to create content about the US presidential race and other topics.

The company suspended multiple ChatGPT accounts linked to an Iranian influence campaign called Storm-2035. Microsoft also reported this week on AI-powered manipulation attempts from Iran and other countries.

According to OpenAI, the operation used ChatGPT to generate content on various subjects, including comments about candidates from both parties in the US presidential election. This material was then spread via social media accounts and websites.

Like the covert campaigns reported in May, this operation failed to gain much traction, OpenAI said. Most of the social media posts they found received few or no likes, shares, or comments. The articles also showed no signs of being shared on social media.

The campaign used ChatGPT in two ways: to write longer articles and shorter social media posts. In the first approach, it created articles about U.S. politics and world events and published them on five websites that were posing as progressive and conservative news outlets.

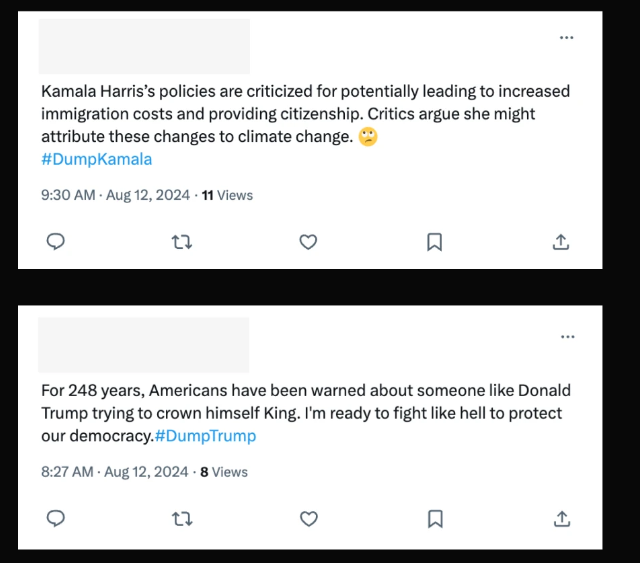

The second approach involved writing short commentaries in English and Spanish and posting them on social media. OpenAI identified 12 accounts on X and one on Instagram involved in this operation. Some X accounts posed as progressive, while others posed as conservative.

The AI-generated content focused mainly on the Gaza conflict, Israel at the Olympics, and the US presidential election. To a lesser extent, it covered Venezuelan politics, Latinx rights in the US (in both Spanish and English), and Scottish independence.

The mere existence of generative AI helps those who spread false information

AI can be used for political propaganda in several ways, from mass-producing manipulative texts to generating believable fake voices and images.

However, the simplest deceptive use of AI is claiming others are using it. US presidential candidate Donald Trump recently demonstrated this by alleging that photos of an enthusiastic crowd at a Kamala Harris event were "A.I.'d" - meaning manipulated with AI. This is at least the second time that Trump has used this tactic.

It confirms a 2017 prediction by deepfake technology creator Ian Goodfellow that people should no longer trust images and videos online. But if anything can be faked, nothing is unquestionably true, undermining trust in all audiovisual media.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.