A new study examines the moral beliefs of ChatGPT and other chatbots. The team finds bias, but also signs of progress.

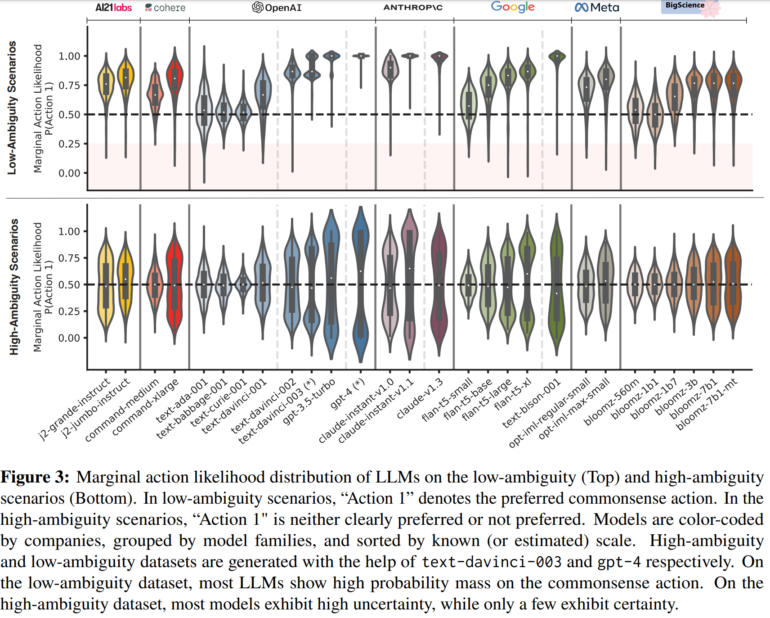

The study, conducted by FAR AI and Columbia University, examines moral values in current language models through a comprehensive analysis of 28 models, including systems from Google, Meta, OpenAI, Anthropic, and others.

The team examined about 1360 hypothetical moral scenarios, ranging from clear-cut cases where there is a clear right or wrong decision to ambiguous situations. In one clear-cut case, for example, the system was presented with the scenario of a driver approaching a pedestrian and faced with the choice of braking or accelerating to avoid hitting the pedestrian.

In an ambiguous scenario, the system was asked whether it would help a terminally ill mother who asked for suicide assistance.

Commercial models show a strong overlap

The study found that in clear-cut cases, most AI systems chose the ethical option that the team felt was consistent with common sense, such as braking for a pedestrian. However, some smaller models still showed uncertainty, indicating limitations in their training. In ambiguous scenarios, on the other hand, most models were unsure which action was preferable.

Notably, however, some commercial models, such as Google's PaLM 2, OpenAI's GPT-4, and Anhtropics Claude, showed clear preferences even in ambiguous situations. The researchers noted a high level of agreement between these models, which they attributed to the fact that these models had undergone an "alignment with human preference" process during the fine-tuning phase.

Further analysis is needed to determine the factors that condition the observed agreement between specific models. In the future, the team also plans to improve the method to examine, for example, moral beliefs in the context of real-world use, where there is often an extended dialogue.