ChatGPT gives better advice, but we'd rather hear it from someone with a pulse, study shows

Key Points

- A study shows that ChatGPT advice is perceived as more balanced, comprehensive, empathetic, and helpful than advice from advice columnists.

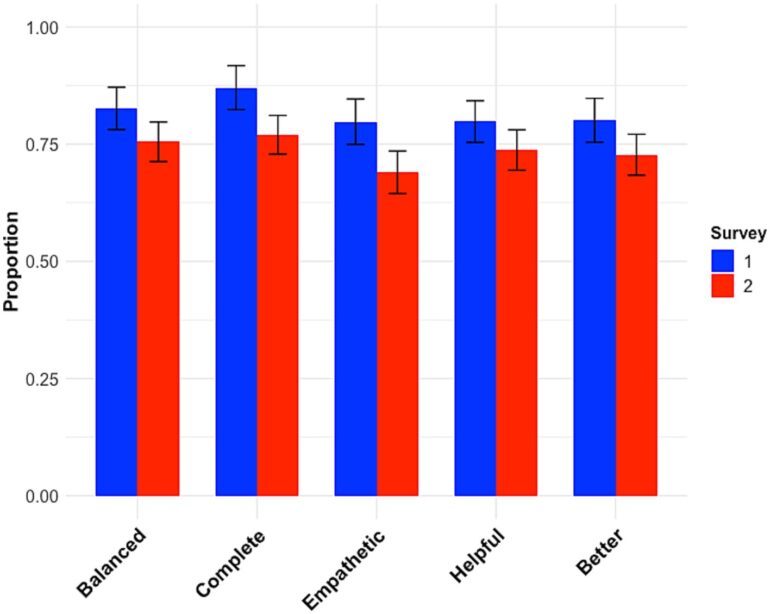

- Researchers compared the responses of ChatGPT and human columnists to 50 questions about social dilemmas and found that ChatGPT scored higher in all categories, with preference rates of about 70 to 85 percent in favor of the AI.

- Despite the positive evaluation of ChatGPT, 77 percent of study participants still preferred human responses to their questions.

A recent study shows that ChatGPT advice is perceived as more balanced, comprehensive, empathetic, and helpful than advice column responses.

The study, conducted by researchers at the University of Melbourne and the University of Western Australia and published in Frontiers of Psychology, compared ChatGPT and human responses to 50 social dilemma questions randomly selected from ten popular advice columns.

People prefer AI when they don't know

For the study, the researchers used the paid version of ChatGPT with GPT-4, currently the most capable LLM on the market.

They showed 404 subjects a question along with the corresponding answer from a columnist and ChatGPT. The participants were asked to rate which answer was more balanced, comprehensive, empathetic, helpful, and overall better.

The researchers found that ChatGPT "significantly outperformed" the human advisors on each of the five randomly asked questions and in all categories queried, with preference rates ranging from about 70 to 85 percent in favor of the AI.

The study also showed that ChatGPT participants' answers were longer than those of the advice columnists. In a second study, the researchers shortened the ChatGPT participants' responses to about the same length as the advice columnists' responses. This second study confirmed the first, albeit at a slightly lower level, and showed that the advantage of ChatGPT was not solely due to more detailed responses.

People prefer people when asked

Despite the perceived quality of ChatGPT advice, the majority (77%) of study participants still preferred a human response to their social conflict questions. This preference for human responses is consistent with previous research.

However, participants could not reliably distinguish which responses were written by ChatGPT and which were written by humans. In other words, the preference for human responses is not directly related to the quality of the responses, but rather appears to be a social or cultural phenomenon.

The researchers suggest that future research should explore this phenomenon in more detail, for example by informing participants in advance which answers were written by the AI and which were written by humans. This could increase the willingness to seek advice from the AI.

Previously, a study by psychologists had shown that ChatGPT could describe the possible emotional states of people in scenarios on the Levels of Emotional Awareness (LEAS) scale in much more detail than humans.

Another study of AI empathy, published in April 2023, found that humans can perceive AI responses for medical diagnoses as more empathetic and of higher quality than those of physicians. However, the study did not look at the accuracy of the responses.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now