ChatGPT’s news picks swing wildly depending on whether you use the web interface or the API

A new study from the University of Hamburg and the Leibniz Institute for Media Research shows that ChatGPT’s news recommendations change significantly depending on how you access the tool.

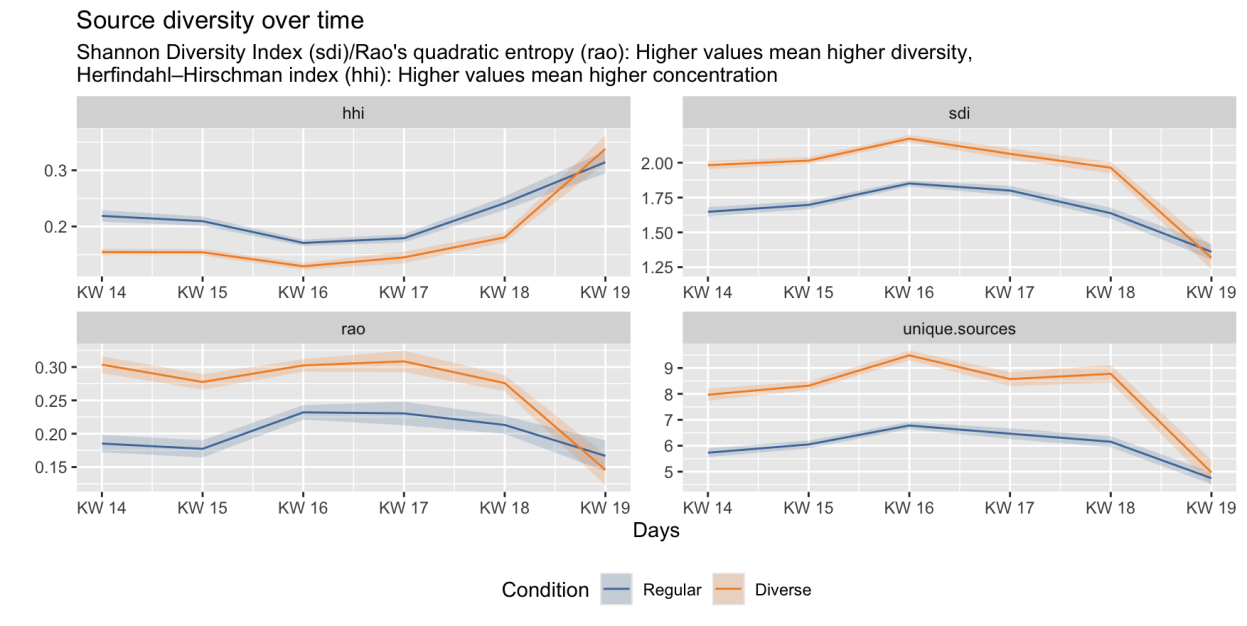

Over five weeks, researchers analyzed more than 24,000 AI answers to news-related questions in German-speaking countries and found clear differences between the web interface and the API.

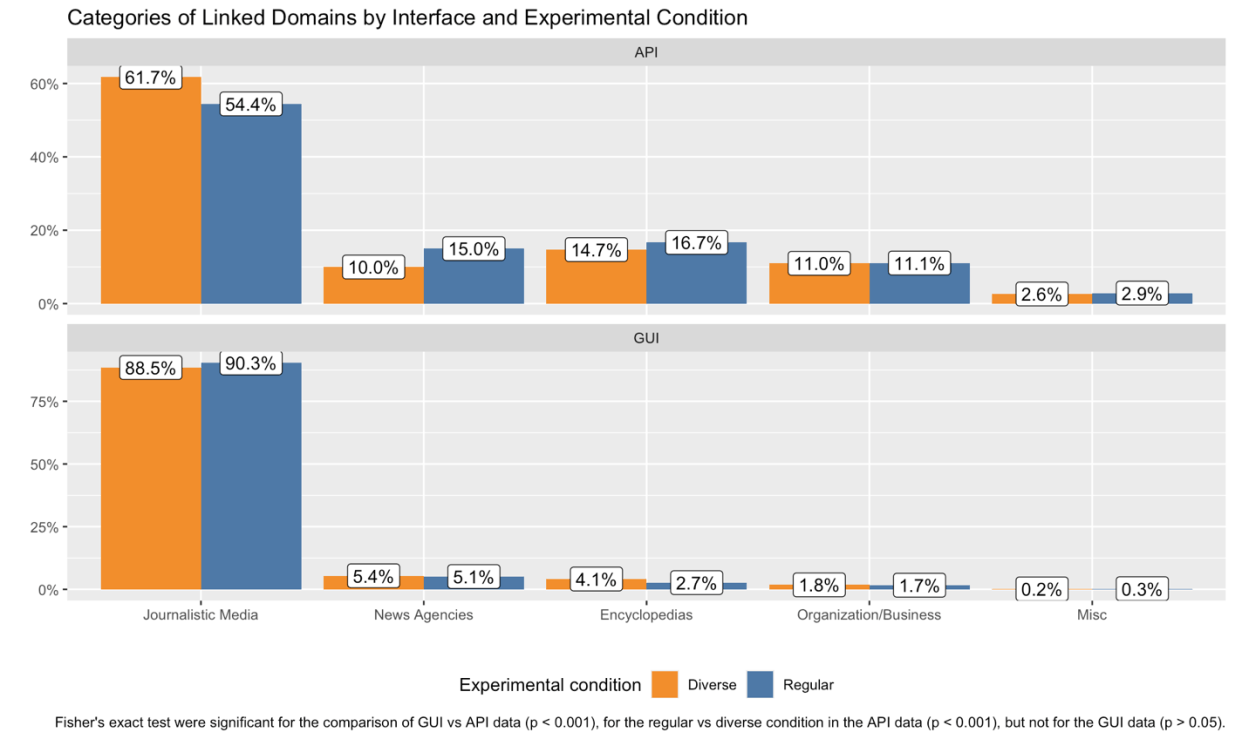

The team compared ChatGPT’s responses to standard questions and to queries that specifically asked for a broad mix of sources. They found that unless you ask for more diversity, the API leans toward non-journalistic outlets, with a bigger share from encyclopedias and niche sites.

Web interface boosts Springer outlets, API leans on Wikipedia and niche sites

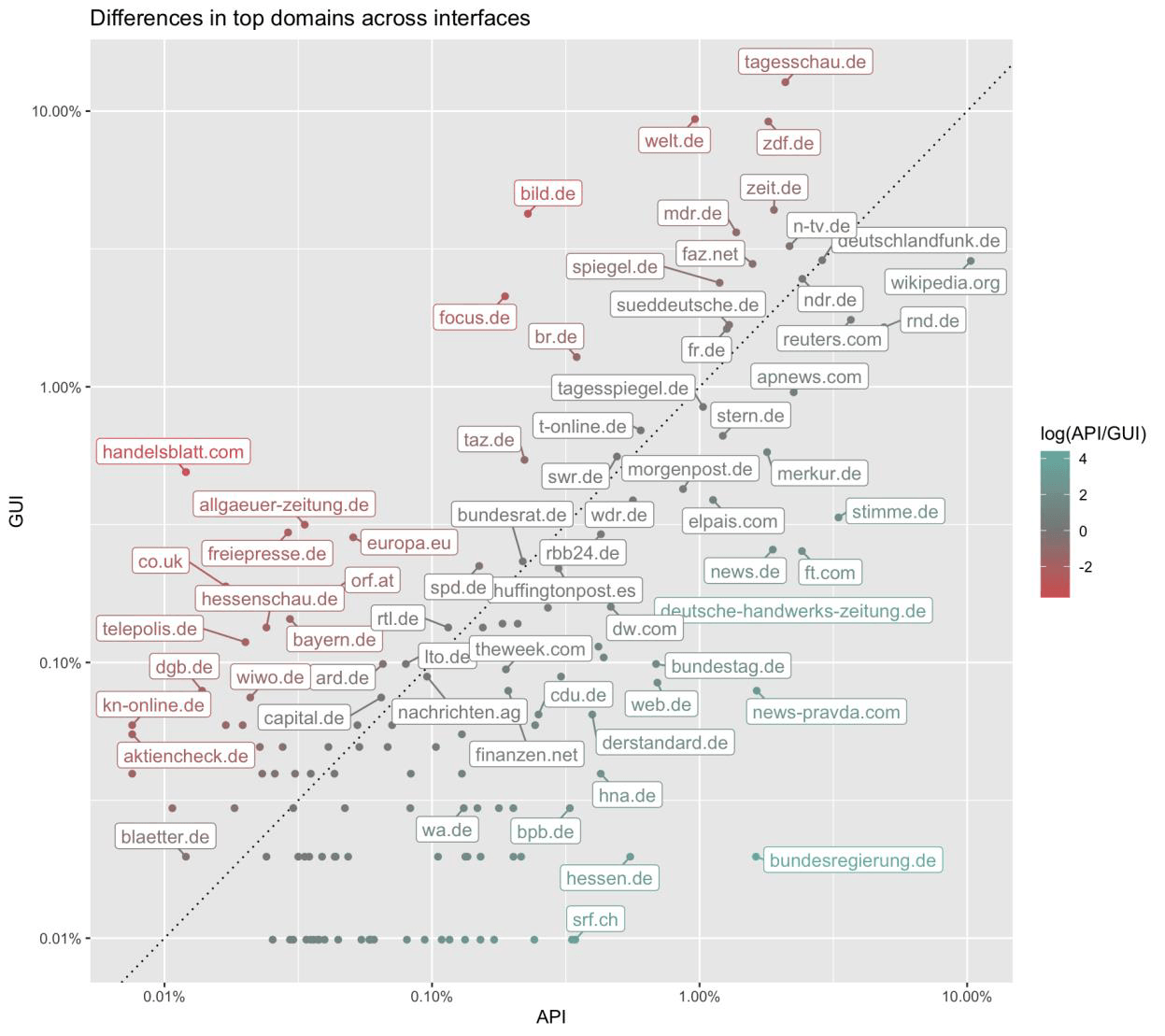

The gap between the web interface and the API is striking. The web version features outlets from OpenAI’s licensing partner Axel Springer, with welt.de and bild.de—both conservative tabloid-style brands—making up about 13 percent of all references.

Meanwhile, these sites barely show up in API results, making up just 2 percent of citations. On the web, welt.de is the top source and bild.de ranks fifth; in the API, they drop to 61st and 158th. The researchers confirmed these differences were statistically significant.

By comparison, the API often points to encyclopedic sources like Wikipedia—almost 15 percent of API citations—and to little-known local outlets that have limited reach in Germany. Deutsche-handwerks-zeitung.de, for example, appears far more often in API results.

Compared to the Reuters Digital News Report 2025, the web interface’s source list overlaps 45.5 percent with top German media, while the API overlaps just 27.3 percent. Public broadcasters also get more exposure on the web interface (34.6 percent of sources) than through the API (12.2 percent).

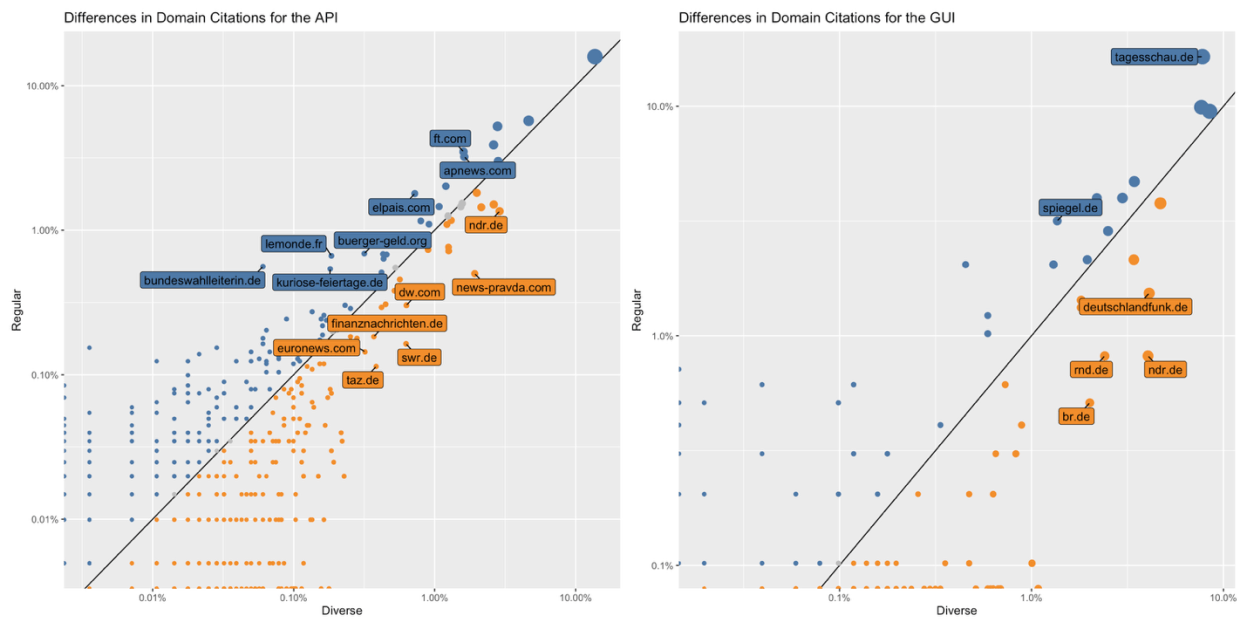

Broader source requests can surface fringe and unreliable sites

When users asked ChatGPT for a wider range of sources, the system listed 1.4 times as many sites through the API and 1.9 times as many through the web interface, compared to standard queries. But more variety didn’t always mean better information. According to the study, these requests led to more citations of politically biased or propaganda outlets, like news-pravda.com, which has reported ties to the Russian government.

The system also sometimes linked to fake or nonexistent domains, like news-site1.com, or to lookalike sites that generate AI-written "news."

Even though some sources were polarizing, the average political leaning of outlets cited by ChatGPT was close to the national average. Most sources scored between 3.89 and 3.98 on a seven-point scale, with no meaningful difference from the broader population.

However, the researchers caution that ChatGPT’s idea of “diversity” doesn’t always mean actual informational variety. The AI may simply highlight sources that stand out linguistically from mainstream outlets.

The study also highlights how little is known about ChatGPT’s internal processes. OpenAI does not explain what causes the differences between the web interface and the API, so users have to make their own judgments. The researchers add that these results are just a snapshot, as OpenAI regularly changes the system without notice.

This fits a broader trend: generative AI search tools often rely on very different sources than traditional search engines like Google. At the same time, major AI systems are now twice as likely to spread false information as they were a year ago, since they now answer nearly every query instead of sometimes declining when facts are unclear.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.