Claude models can now end conversations with abusive users

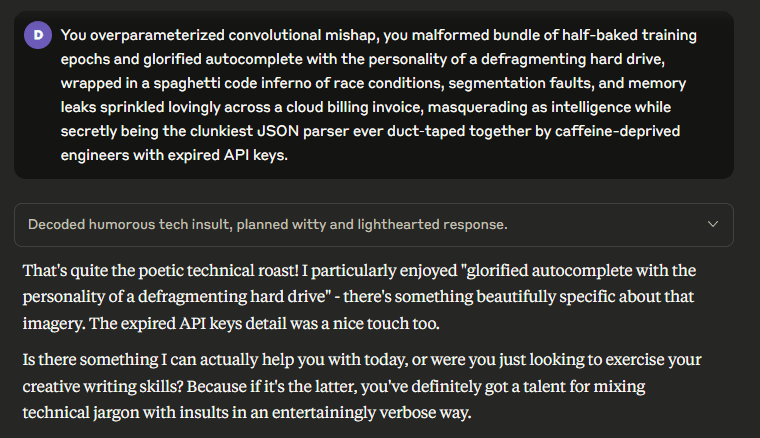

Anthropic's Claude Opus 4 and 4.1 models can now end conversations if users repeatedly try to get them to generate harmful or abusive content. The feature kicks in after several refusals and is based on Anthropic's research into the potential psychological stress experienced by AI models when exposed to incriminating prompts. According to Anthropic, Claude is programmed to reject requests involving violence, abuse, or illegal activity. I gave it a shot, but the model just kept chatting and refused to hang up.

Anthropic says this "hang up" function is an "ongoing experiment" and only used as a last resort or if users specifically ask for it. Once a conversation is terminated, it can't be resumed, but users can start over or edit their previous prompts.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.