- Code Llama 70B is available

Update from 29. January 2024:

Meta has just released the latest version of Code Llama. Code Llama 70B is a powerful open-source LLM for code generation. It is available in two variants, CodeLlama-70B-Python and CodeLlama-70B-Instruct. Meta says it is suitable for both research and commercial projects, and the usual Llama licenses apply.

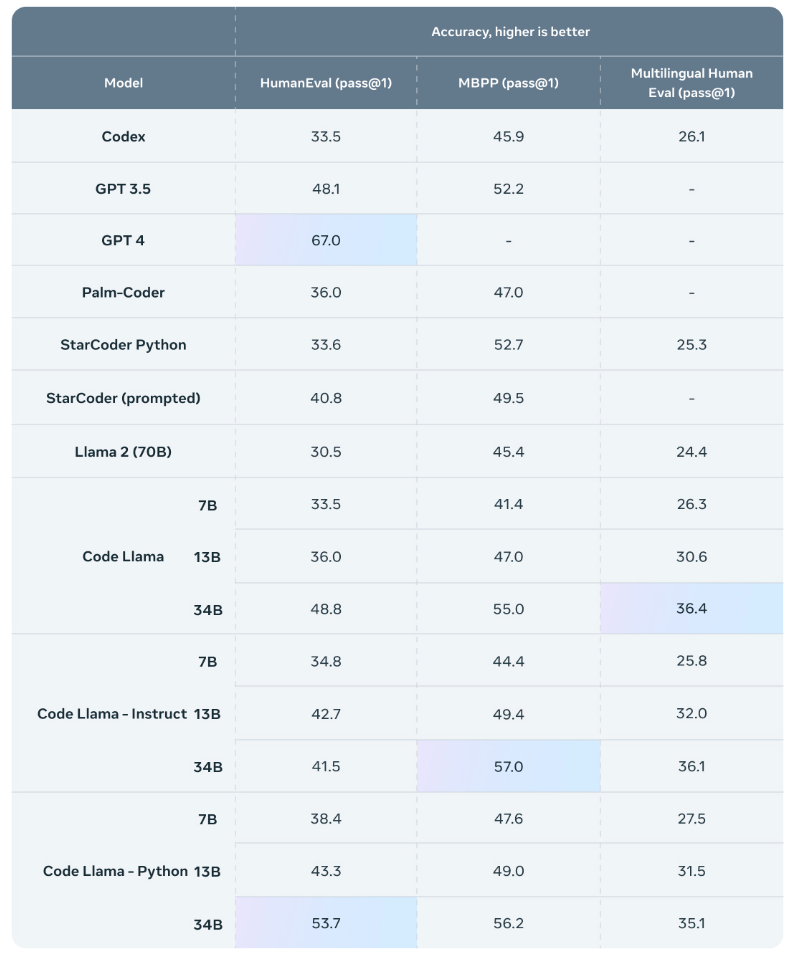

The new 70B-instruct-version scored 67.8 on HumanEval, just ahead of GPT-4 and Gemini Pro for prompts without examples (zero-shot). The first version of Code Llama achieved up to 48.8 points.

According to Meta, Code Llama's performance makes it an ideal basis for refining code generation models and should advance the open-source community as a whole. This seems likely since the 34B version of Code Llama has already been significantly improved by the open-source community and brought up to GPT-4 levels. Code Llama 70B could have even more room for improvement.

Code Llama 70B and other Llama models are available here after asking Meta. More information is available on Github.

Original article from August 24, 2024:

Code Llama is Meta's refined Llama 2 variant for code generation.

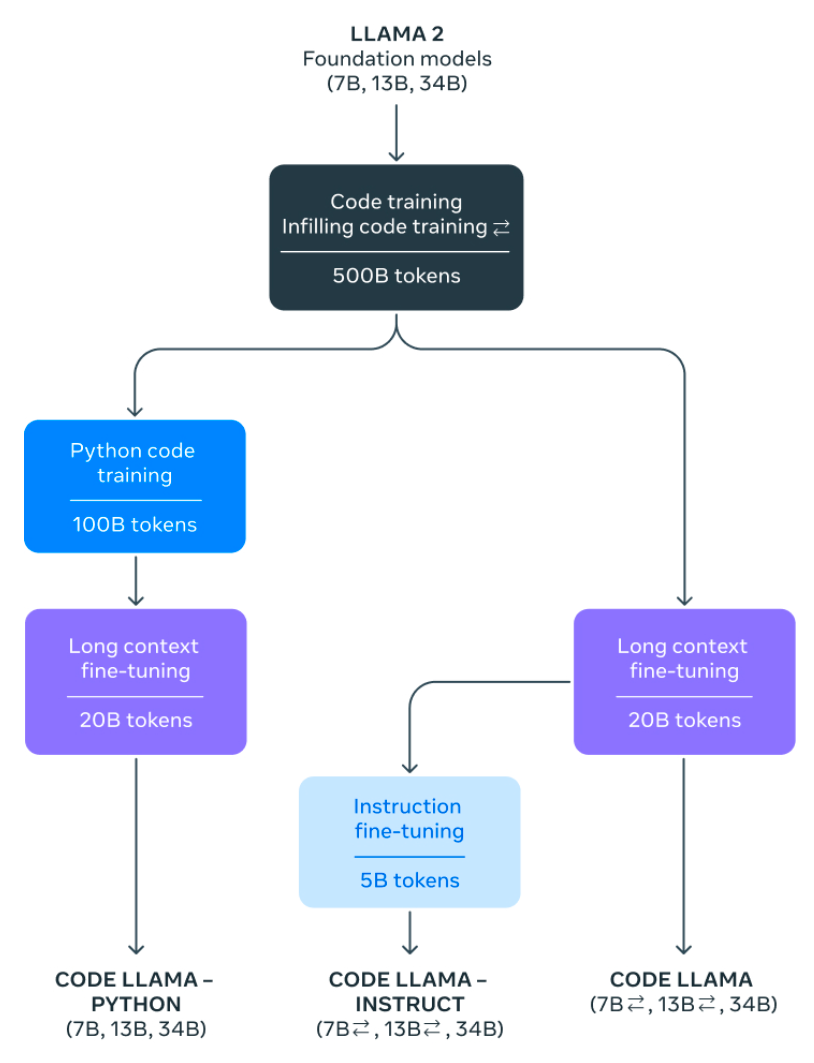

According to Meta, Code Llama is an evolution of Llama 2 that has been further trained with 500 billion code tokens and code-related tokens from Llama 2's code-specific datasets. To train Code Lama, Meta used more code data over a longer period of time.

Compared to Llama 2, Code Lama has enhanced programming capabilities and can, for example, generate appropriate code in response to a natural language prompt such as "Write me a function that outputs the Fibonacci sequence." Similar to Github Copilot, it can also complete and debug code.

Video: Meta AI

Code Lama supports popular programming languages like Python, C++, Java, PHP, Typescript, C#, Bash and others.

Three models and two variants

Meta releases Code Llama in three sizes with 7 billion, 13 billion and 34 billion parameters. The particularly large context window is 100,000 tokens, which makes the model particularly interesting for processing large amounts of code simultaneously.

"When developers need to debug a large chunk of code, they can pass the entire code length to the model," Meta AI writes.

The 34-billion-parameter variant is said to provide the highest code quality, making it suitable as a code assistant. The smaller models are optimized for real-time code completion. They have lower latency and are trained to fill-in-the-middle (FIM) by default.

In addition, Meta releases a Code Llama variant optimized for Python and trained with an additional 100 billion Python code tokens, as well as an Instruct variant optimized with code tasks and their sample solutions. This version is recommended by Meta for code generation because it is the best at following instructions.

Code Llama outperforms other open-source models, but GPT-4 stays ahead

In the HumanEval and Mostly Basic Python Programming (MBPP) benchmarks, Code Llama 34B achieves results on par with GPT-3.5, but is far behind GPT-4 in Human Eval. Code Llama outperforms Llama 2, which is not optimized for code, and other open-source models tested.

Meta releases Code Llama under the same Llama license as Llama 2 on Github. The application and the content it generates can be used for scientific and commercial purposes.

The Open Source Initiative criticized Meta for marketing the models as open source, saying the license restricts commercial use and certain areas of application and thus does not fully meet the open-source definition.