DALL-E 2 and similar AI systems create authentic images. Can you still tell the difference between man-made art and machine-made art?

AI research and industry have made great strides in developing multimodal AI models in the past year. OpenAI's DALL-E in early 2021 showed where the journey was heading: AI that generates hypnotic, surreal, photorealistic and all other desired motifs in response to text input.

About a year later, these advances in multimodal models enabled the development of OpenAI's DALL-E 2. The AI far surpasses its predecessor, continuously generating stunning images in many styles and on many topics.

In late May, Google unveiled Imagen, a generative image AI that actually outperforms DALL-E 2 in some areas. In both cases, all it takes is a short text input - and two minutes later, the image is ready.

AI art: can humans still tell the difference?

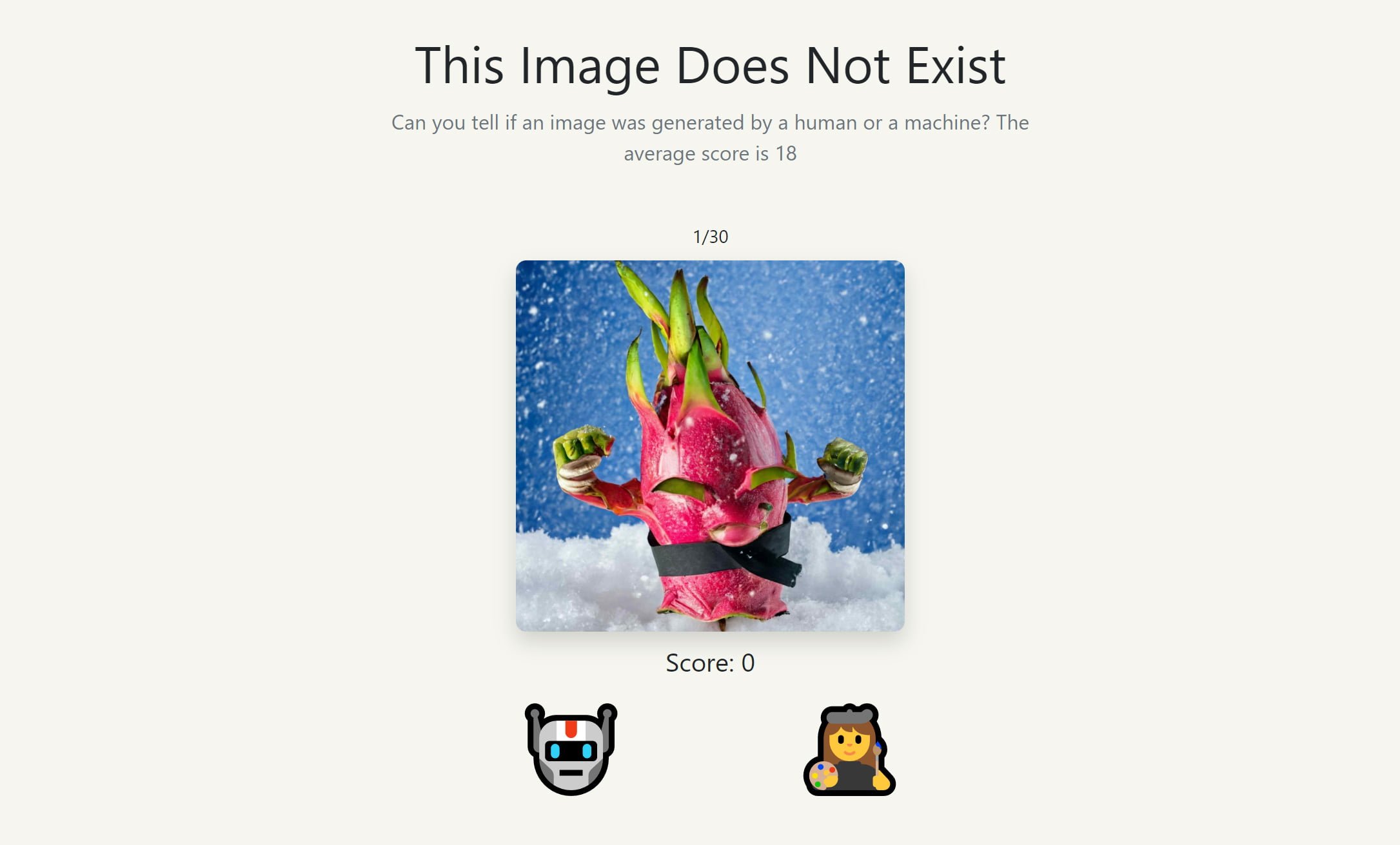

To draw attention to these advances, Sahar Mor, product manager at Stripe and an AI enthusiast, has launched the website thisimagedoesnotexist.com. Visitors can test whether they can still tell the difference between human- and machine-generated images.

For the website, which is inspired by the classic AI fake website thispersondoesnotexist.com, he collected several hundred images generated by DALL-E 2 as well as images created by humans and developed a simple voting procedure: Does the image come from DALL-E 2 or is it derived from a human brain?

Sahar describes his website as a visual Turing test: It shows 30 images one after another that are either made by artificial intelligence or by humans. For each image, visitors can vote for one of the two options and then see if they are correct. In the case of AI-generated images, the website displays the text input used to generate them.

DALL-E 2: Things are (not) looking good

In the first week after the launch of the website, more than 40,000 visitors from over 100 countries voted more than 400,000 times. The average score is 18 out of 30, so visitors were only able to correctly classify images in slightly more than half of the cases. That is little better than coincidence.

For OpenAI, this is good news. For some people who earn their living with graphics, illustrations and photos, probably not.

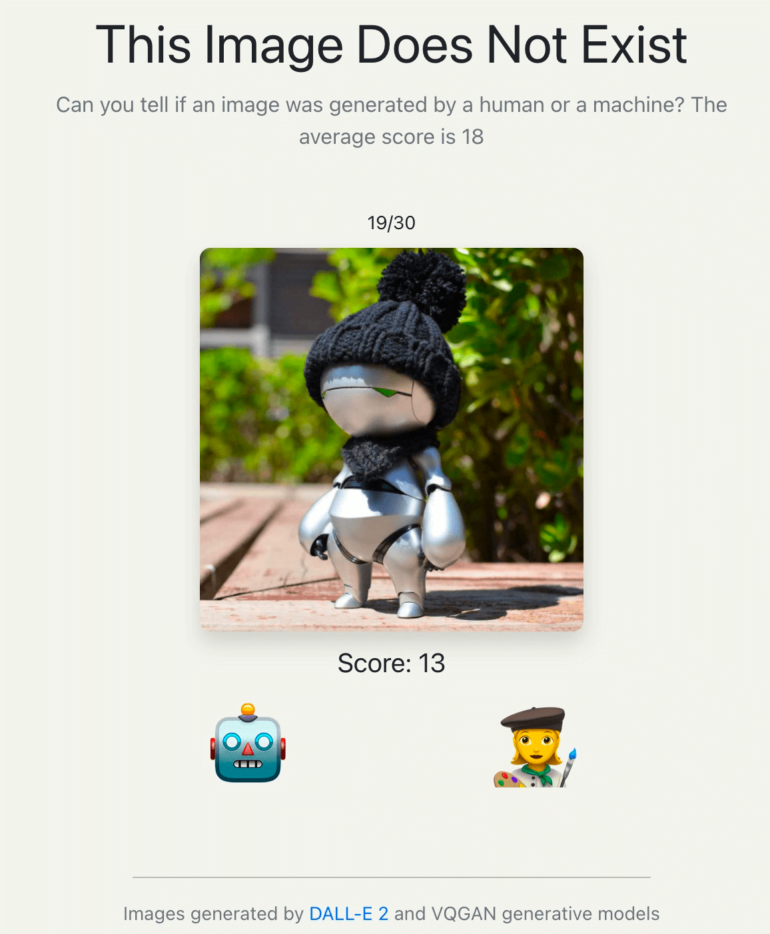

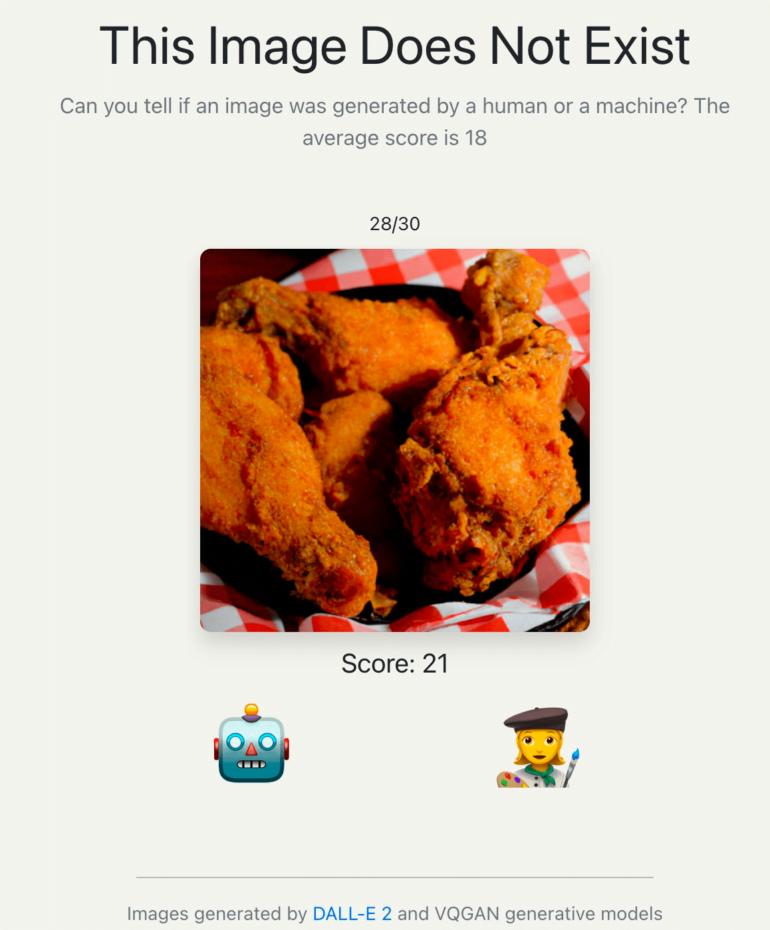

The visitors were particularly often wrong about the two images shown above: 74 percent thought the robot with a cap (image source: Flat Wave) was AI-generated, 82 percent thought the portion of fried chicken was a real photo. It's the other way around. DALL-E 2 generated the photo based on the input "KFC Original Recipe Chicken, fresh, hot and juicy, 8K high resolution, studio lighting".

Here you can test your own talent for detecting AI-generated images.