Deepmind's "force prompting" lets AI create realistic video motion without physics engines

A team from Brown University and Deepmind has found that generative video models can produce realistic motion when prompted with artificial forces, without needing 3D models or physics engines.

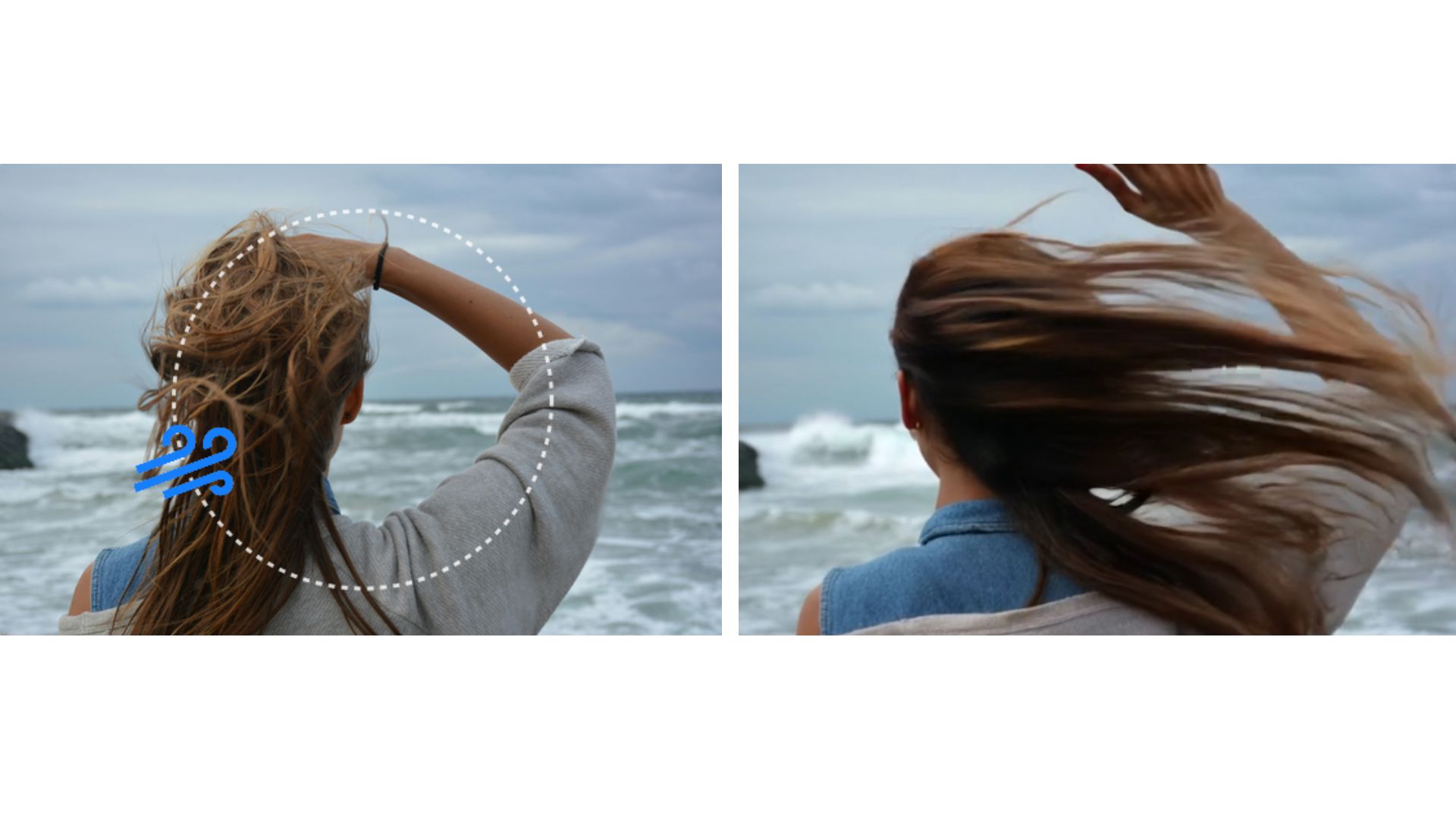

With so-called force prompting, you can nudge AI-generated motion by specifying the direction and strength of a force. The model handles both global forces—like wind blowing across the whole scene—and local forces, such as a tap on a single spot.

These forces are represented as vector fields and fed directly into the system, which then turns them into natural-looking movement. You can try interactive demos for both types of force on the project page.

Steering video generation with force vectors

The model distinguishes between global forces such as wind and local forces such as a targeted impact. Both variants are described by direction and strength. Global forces act uniformly on the entire image, local forces on a specific point. The control information is fed into the model as a vector field and translated into movement. Several interactive demos for both types of force can be found on the project page.

The team built on the CogVideoX-5B-I2V video model, adding a ControlNet module to process physical control data. These signals are passed into a Transformer architecture. The system generates 49 frames per video and was trained in just one day on four Nvidia A100 GPUs.

Training with synthetic wind, bouncing balls, and impacts

All the training data was synthetic. For global forces, the researchers created 15,000 videos of flags fluttering in different wind conditions. For local forces, they generated 12,000 videos with rolling balls and 11,000 clips of flowers reacting to impacts. Physical terms like "wind" or "bubbles" were included in the automatically generated text descriptions, anchoring the right relationships in the model.

Three AI-generated flag scenes show how the model reacts to different wind speeds. | Video: Gillman et al.

Each training example pairs a text prompt, a starting image, and a physical force—represented either as a full vector field (for global forces) or as a moving signal with direction, location, and strength (for local forces). These forces, originally simulated in 3D, were projected onto 2D image coordinates. To keep the dataset varied, the researchers also randomized backgrounds, lighting, camera angles, and wind or impact directions.

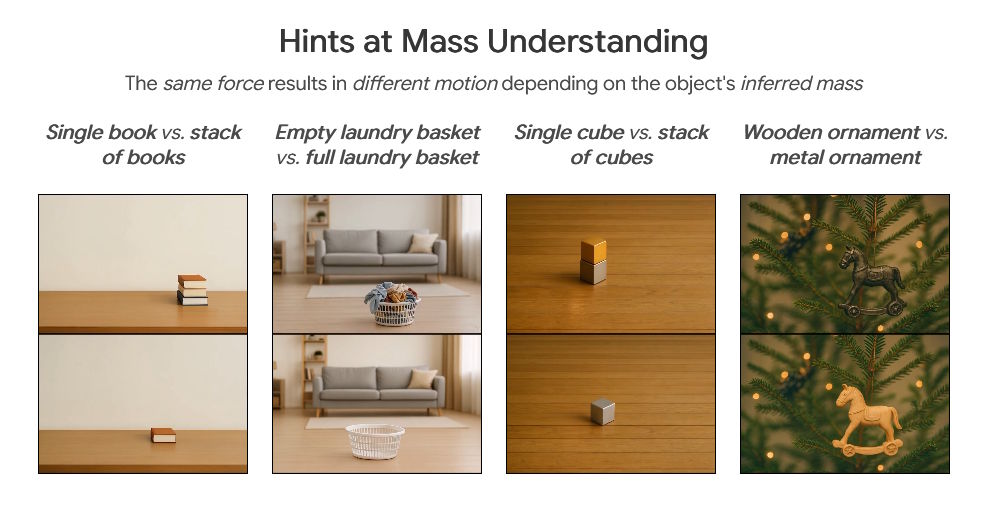

Intuitive physics from a small dataset

Despite being trained on relatively little data, the model does a good job generalizing to new objects, materials, and scenarios. It even picks up on simple physical rules—like lighter objects moving farther than heavier ones when hit with the same force.

In side-by-side comparisons, people preferred force prompting over text-only or motion-path-controlled baselines. Even against PhysDreamer, a model that uses real physics simulation, force prompting scored higher on how closely the movement matched the input force and how realistic it looked. PhysDreamer only pulled ahead in raw image quality.

Ablation studies showed that a diverse training set is crucial. Without varied backgrounds or extra objects, the model struggled to localize forces and separate movements. Omitting force-related terms from the text prompts also led to much worse results.

The researchers also found that the model treats objects as whole units: a bump anywhere moves the entire object. It also preserves style features from the original image, like lighting and depth of field, throughout the motion.

Realistic motion, but not a full simulation

While force prompting produces convincing results, it can't fully replace accurate physics simulations. The researchers saw mistakes in complicated scenarios—for example, smoke sometimes ignored wind, and human arms occasionally moved like cloth. Still, the technique is an efficient way to add physically plausible interactions to AI-generated video.

Deepmind CEO Demis Hassabis recently highlighted how new AI video models like Veo 3 are starting to pick up on the underlying rules of physics. According to Hassabis, these models are moving past basic text or image processing and beginning to represent the physical structure of the world itself, which he sees as a crucial step toward more general AI that can learn from experience in simulations, not just from data.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.