Deepmind's new prompting method takes a step back for more accuracy

A recent paper from Alphabet's AI company Google Deepmind shows that a simple tweak to prompts can significantly improve the accuracy of large language models. The technique taps into the human ability to abstract.

Step-back prompting asks the LLM a general question before the actual task. This allows the system to retrieve relevant background information and better categorize the actual question. The method is easy to implement with just one additional introductory question.

Question:

Which school did Estella Leopold attend between August 1954 and November 1954?

Step-back question :

What was Estella Leopold's educational history?

Step-Back Answer:

B.S. in Botany, University of Wisconsin, Madison, 1948

M.S. in Botany, University of California, Berkeley, 1950

Ph.D. in Botany, Yale University, 1955

Final answer:

From 1951 to 1955, she was enrolled in the Ph.D. program in Botany at Yale. from 1951 to 1955, so Estella Leopold was most likely at Yale University between August 1954 and November 1954.

The Deepmind study tested step-back prompting on the PaLM-2L language model and compared it to the base model and GPT-4. The researchers were able to increase the accuracy of the language models by up to 36 percent compared to chain-of-thought (CoT) prompting.

Improvements across all tested domains

Step-back prompting was tested in the areas of science, general knowledge, and reasoning. The researchers observed the greatest improvements in more complex tasks requiring multiple steps of reasoning.

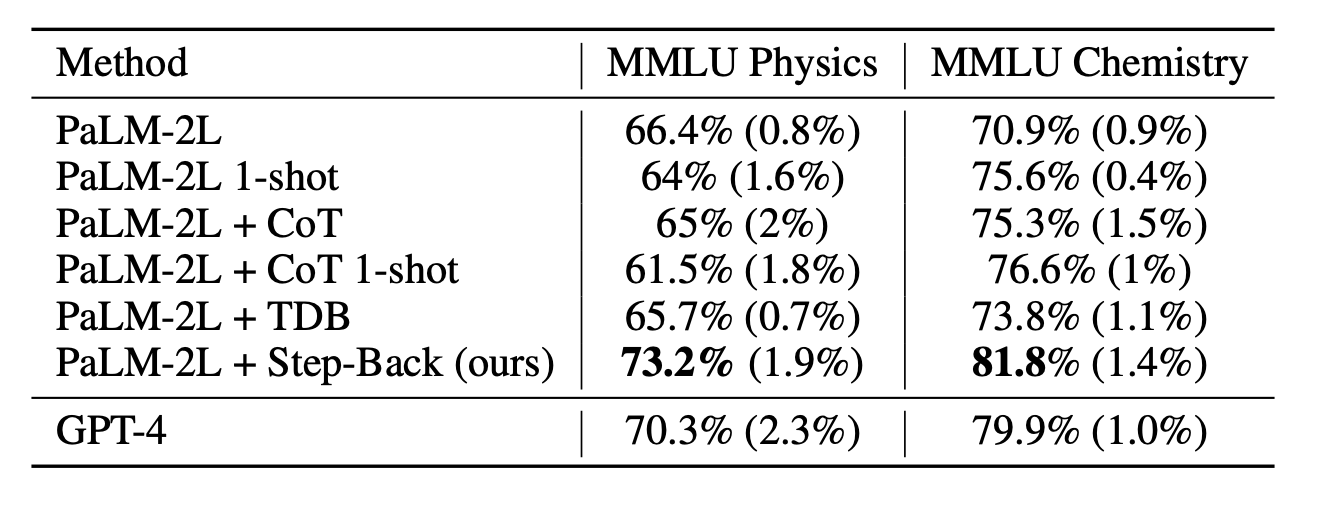

In physics and chemistry tasks, accuracy increased by 7 to 11 percent compared to the unmodified model. The adapted PaLM-2L even outperformed GPT-4 by a few percentage points. The abstract question of the experiment was: "What physical or chemical principles and concepts are needed to solve this problem?"

Most importantly, DeepMind's prompting method also performed significantly better than existing methods such as chain-of-thought and "take a deep breath" (TDB), which only marginally improved or even worsened accuracy.

PaLM-2L can achieve better performance with step-back prompting than GPT-4

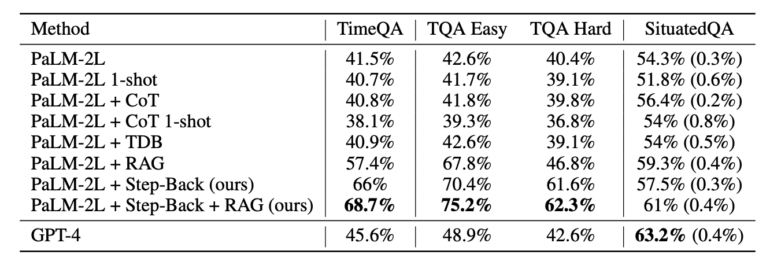

The improvement was even more pronounced for knowledge questions with a temporal component from the TimeQA dataset. Here, the gain from a combination of step-back prompting and retrieval augmented generation (RAG) was a whopping 27 percentage points over the base model, making it about 23 percent more accurate than GPT-4. Of course, step-back prompting can be used with GPT-4 as well; the comparison is just to show the performance gain.

Even on particularly difficult knowledge questions, which were less likely to be answered correctly with RAG, the researchers found a significant gain in accuracy with step-back prompting. "This is where STEP-BACK PROMPTING really shines by retrieving facts regarding high-level concepts to ground the final reasoning," the paper states.

Despite the promising results, the error analysis showed that multilevel reasoning is still one of the most difficult skills for an LLM. The technique is also not always effective or helpful, for example, when the answer is common knowledge ("Who was president of the USA in 2000?") or when the question is already at a high level of abstraction ("What is the speed of light?").

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.