Deepseek puts pressure on Meta with open source AI models at a fraction of the cost

In recent weeks, Chinese AI startup Deepseek has shown that cutting-edge AI development doesn't require massive budgets, putting pressure on established AI labs. Meta CEO Mark Zuckerberg is doubling down on AI investments.

Deepseek's latest model shows just how efficient AI development can be. Their Deepseek-V3 language model performs on par with the world's leading AI systems, but cost just $5.6 million to train - a tiny fraction of what larger companies typically spend.

Deepseek-V3 needed only 2.78 million GPU hours of training time, while Meta's smaller Llama-3 model (with 405 billion parameters) required about eleven times that amount. The company followed up with Deepseek-R1, a reasoning model that matches OpenAI's o1 - something Meta hasn't even released yet.

Meta responds with major expansion plans

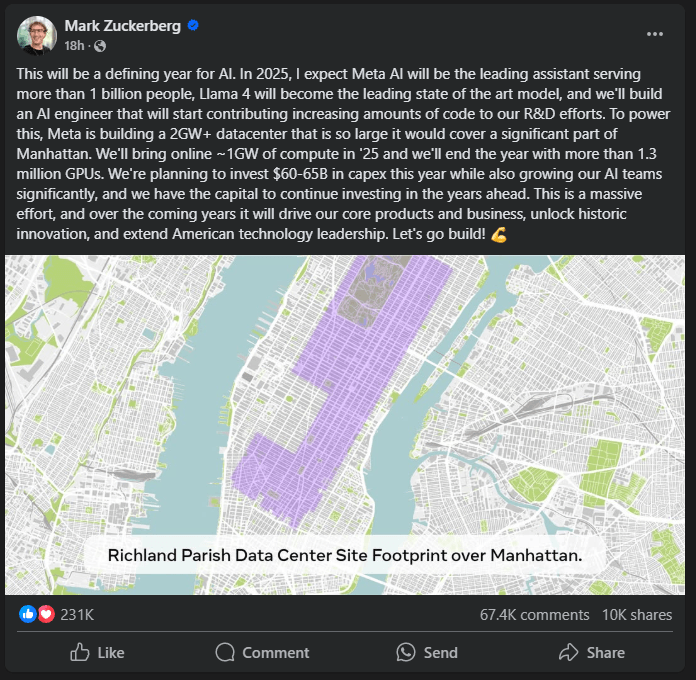

Zuckerberg took to Facebook recently to outline his company's response. In 2025, Meta aims to develop an AI assistant that can serve more than a billion people, upgrade Llama 4 to compete with the best models available, and create an "AI engineer" to help with its research and development. "This will be a defining year for AI," Zuckerberg wrote.

To support these goals, Meta is building a massive data center that will use more than two gigawatts of power. The company plans to bring online about one gigawatt of computing power and over 1.3 million GPUs in 2025 alone, backed by investments of $60-65 billion and significant team expansion.

Meta's AI chief researcher Yann LeCun sees Deepseek's success as a win for open source rather than a sign of Chinese dominance. He points out that Deepseek built on openly available research and profited from it, but also contributed new ideas others can build on. "This is the power of open research and open source," LeCun says. He praised their V3 model as "excellent" when it launched in late 2024.

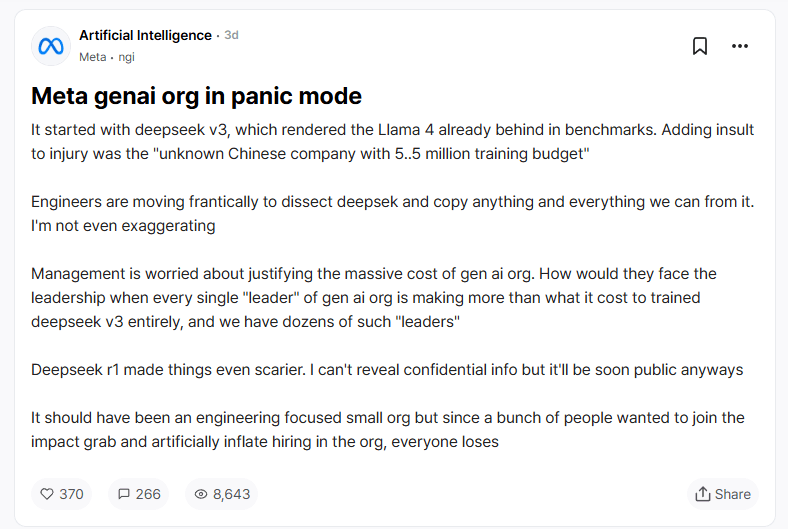

"It started with Deepseek-V3"

According to an anonymous post on Teamblind, a forum for verified Big Tech employees, Meta's AI department is feeling the pressure. The post claims Deepseek-V3 has already outperformed Meta's unreleased Llama-4 in benchmarks, leading to concerns about the department's high operating costs when a relatively unknown Chinese company can achieve better results on such a tight budget, pointing out that a single department head's salary exceeds Deepseek's entire training budget. Deepseek's R1 reasoning model is causing even more headaches for the team.

The post suggests Meta's engineers are working frantically to analyze and adopt Deepseek's technology. It criticizes how Meta's AI division, originally meant to be small and technically focused, has grown bloated as employees rushed to join the AI trend.

The timing of both Zuckerberg's and LeCun's public statements, both appearing almost at the same time, suggests that they have decided internally to indirectly respond to these rumors and the social media conversations they have sparked.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.