Developer squeezes entire GPT-2 AI model into a single Excel spreadsheet

Software developer Ishan Anand has packed the entire 124-million-parameter GPT-2 into an Excel spreadsheet. The goal is to give non-developers an insight into how modern LLMs based on the widely used Transformer architecture work in detail.

The Excel file containing the GPT-2 language model is about 1.2 GB in size and requires the latest version of Excel on Windows. Anand warns against using it on MacOS, as the file size will cause the program to freeze. The language model works completely locally, using only Excel's own functions, with no access to cloud services or Python scripts.

One token per minute

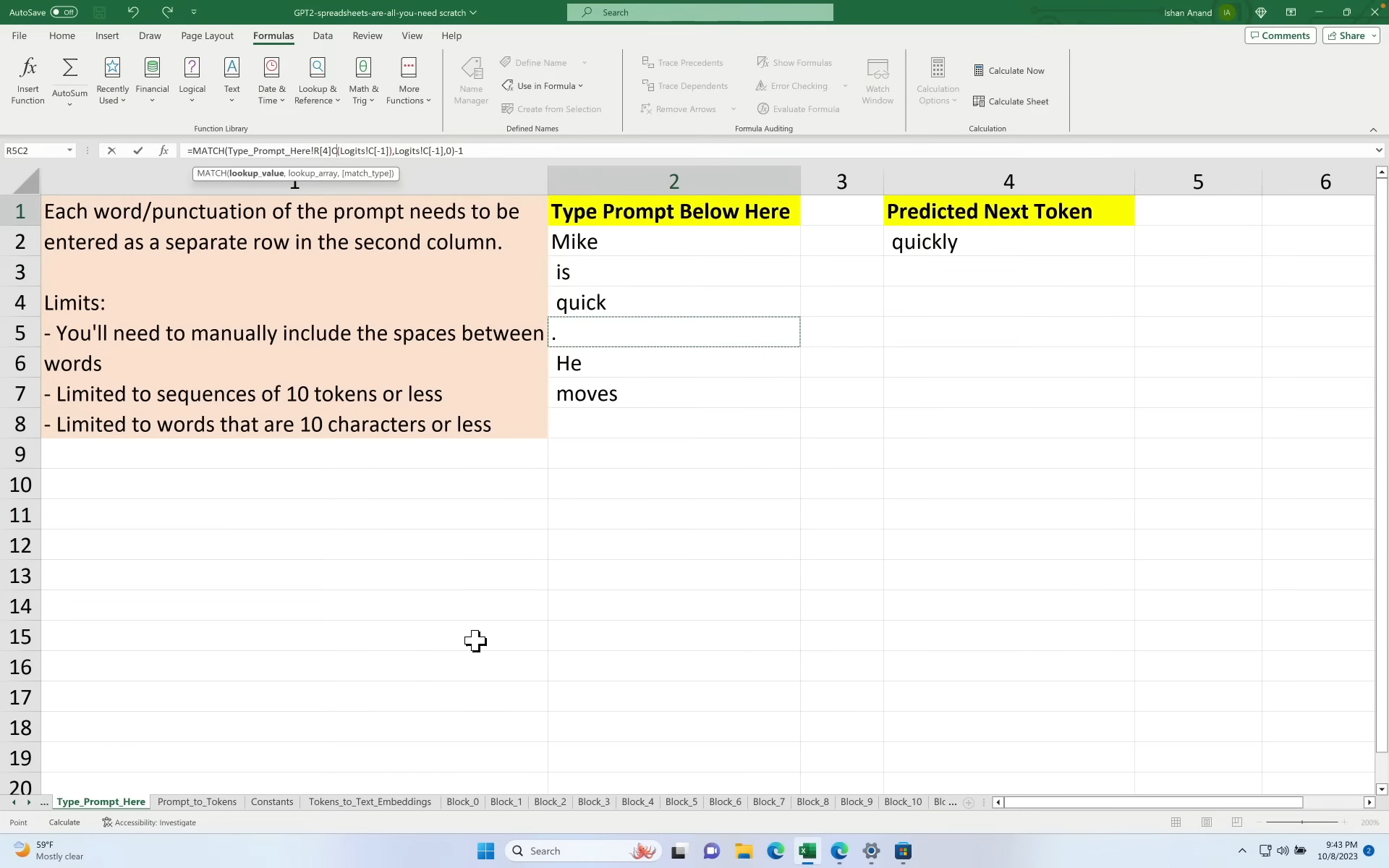

Of course, the Excel file is not a replacement for a chatbot like ChatGPT. Users can type words into a given cell and, after about a minute, see the next most likely word in another cell. However, various tables can be used to see what happens in the background during processing.

Anand demonstrated how this works in a talk at the Seattle AI Tinkerers. He entered the beginning of the sentence "Mike is fast, he moves". The model added "fast" to the end of the sentence, which seems like a likely solution.

Anand explained that his goal was to make the concepts of the underlying transformer architecture tangible and interactive. In fact, a language model lends itself well to an Excel file because it consists mainly of mathematical operations, Anand told Ars Technica. Tokenization, or the conversion of words into numbers, has been a particular challenge.

Anand wants to enable a low-threshold understanding of, for example, why the paper on which the Transformer architecture is based is called "Attention is all you need," to which his project pays tribute with "Spreadsheets is all you need." He has already published tutorial videos on his website, with more to come.

But Anand also sees benefits for developers. For example, the spreadsheet view makes it easier to understand why techniques like chain-of-thought prompting improve performance: Because they give the model more space and more "passes" through the attention layers to recognize correlations.

ChatGPT helped with implementation

He originally implemented the project in Google Sheets, but then switched to Excel due to lack of storage space. Anand is aware of the technical limitations: more than 10 token contexts are currently not possible without extensive restructuring of the internal weight matrices. Anand has been working on the project since June 2023, and ChatGPT has been particularly helpful, he says.

GPT-2 was considered a milestone in the development of large-scale language models when it was released in 2019. OpenAI was initially reluctant to release the full source code and trained parameters, citing concerns about misuse. It was not until later in 2019 that GPT-2 was made fully available. The Excel version uses the "small" GPT-2-Small with 124 million parameters instead of the full 1.5 billion parameters. Today's GPT-4 level models typically have several 100 billion parameters.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.