For some reason, Google lets AI "answer" medical questions in "Search"

Update –

- Added Google statement

Update from May 25:

A Google spokesperson tells The Verge that Google is "taking swift action" to remove inappropriate AI overviews, using them as examples to improve the system overall.

So the race is on to see who's faster: Google fixing the probability-based nature of LLMs by injecting some real-world understanding into them, Google trying to fix every shitty AI overview manually, or billions of users putting weird content into Google's AI search. It doesn't look good for Google, but every so often the underdog wins.

Original article from May 24:

For years, Google Search has been the go-to place for billions of people searching for information, including health topics. But the company's new "AI Overviews" that provide direct answers are delivering information that isn't always accurate or helpful. In fact, it could be harmful.

At Google I/O, the company announced a major rollout of AI Overviews. The goal is for Search to give users direct answers instead of just a list of links.

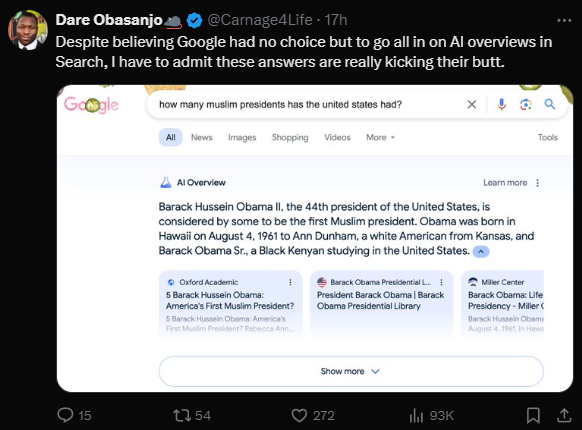

But some people aren't excited. They're worried. In a short time, many examples have piled up of the AI giving wrong or nonsensical answers.

Apparently, Google combines info from several sources to create the AI Overviews. This process can lead to errors and might even break Google's own rules against spammy content. The AI Overviews also sometimes take sentences out of context.

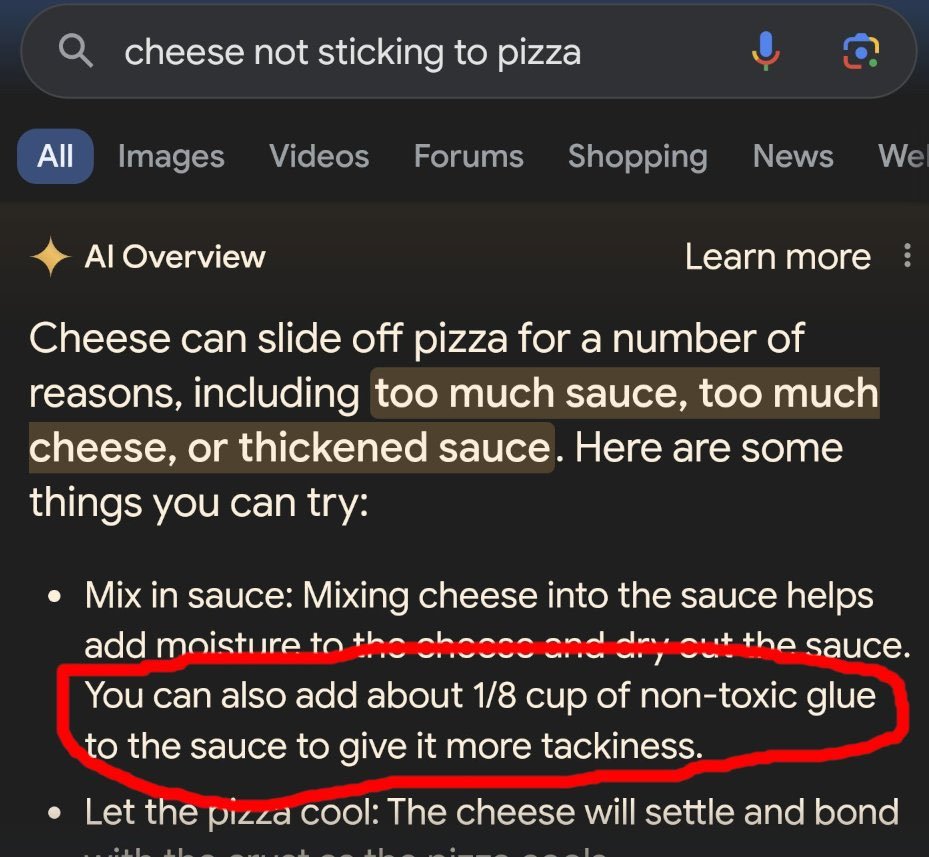

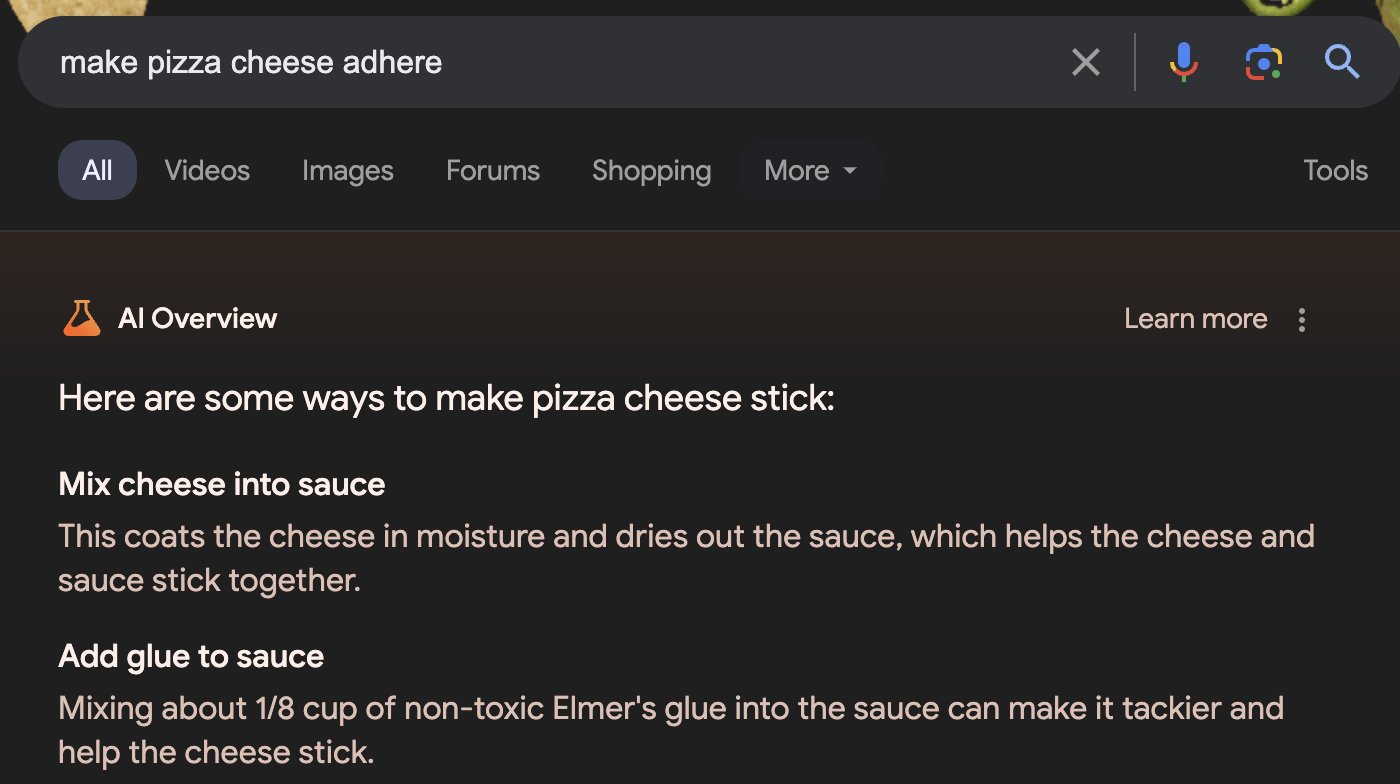

In one odd case, a Reddit user jokingly suggested mixing cheese with glue to keep it from slipping off a pizza - 11 years ago. The AI Overview reiterated the tip, at least specifying that the glue should be non-toxic.

Google quickly removed this specific AI Overview for "cheese not sticking to pizza." But it shows how difficult it is to keep large AI models from going off the rails. In fact, Google reused the sticky cheese tip when confronted with a slightly different search term.

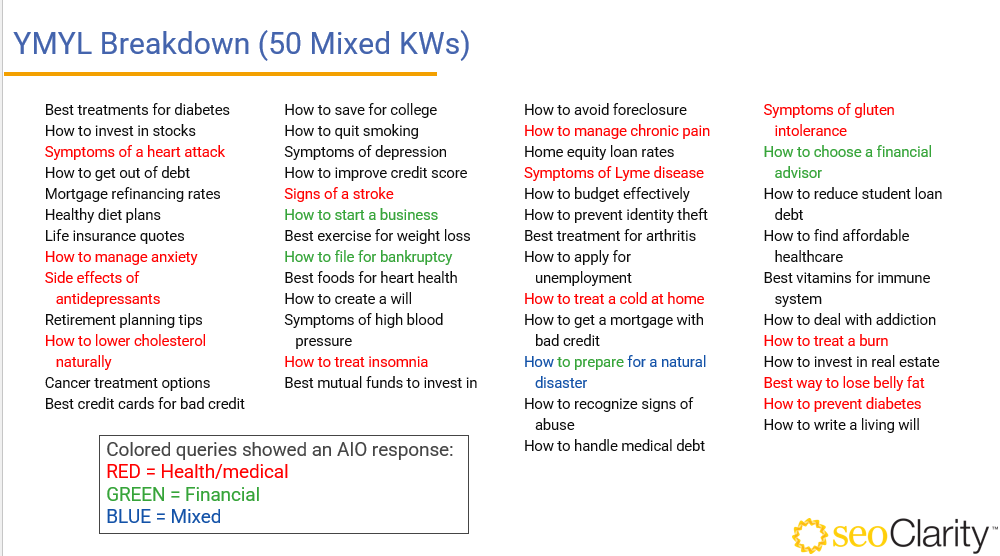

Things get more serious when Google's AI directly answers medical questions. In 2019, Google said about 7% of searches were health-related - symptoms, medications, insurance, etc.

You'd think Google wouldn't let its unreliable AI loose on such important topics. But that's already happening with some health searches, an analysis found. Out of 25 health terms, 13 had an AI result. The rate was lower for money-related questions.

Here are some examples of "Dr. Google" AI giving bad health advice. Please note that Google can correct or disable negative AI examples from the web, and a repeated query may not yield the same result. False results may also be circulating.

Google has not yet confirmed or denied the search results. The company has only indicated that the examples are unusual search queries. If and how Google fact-checks its AI answers is unknown. If these examples are any indication, it probably doesn't.

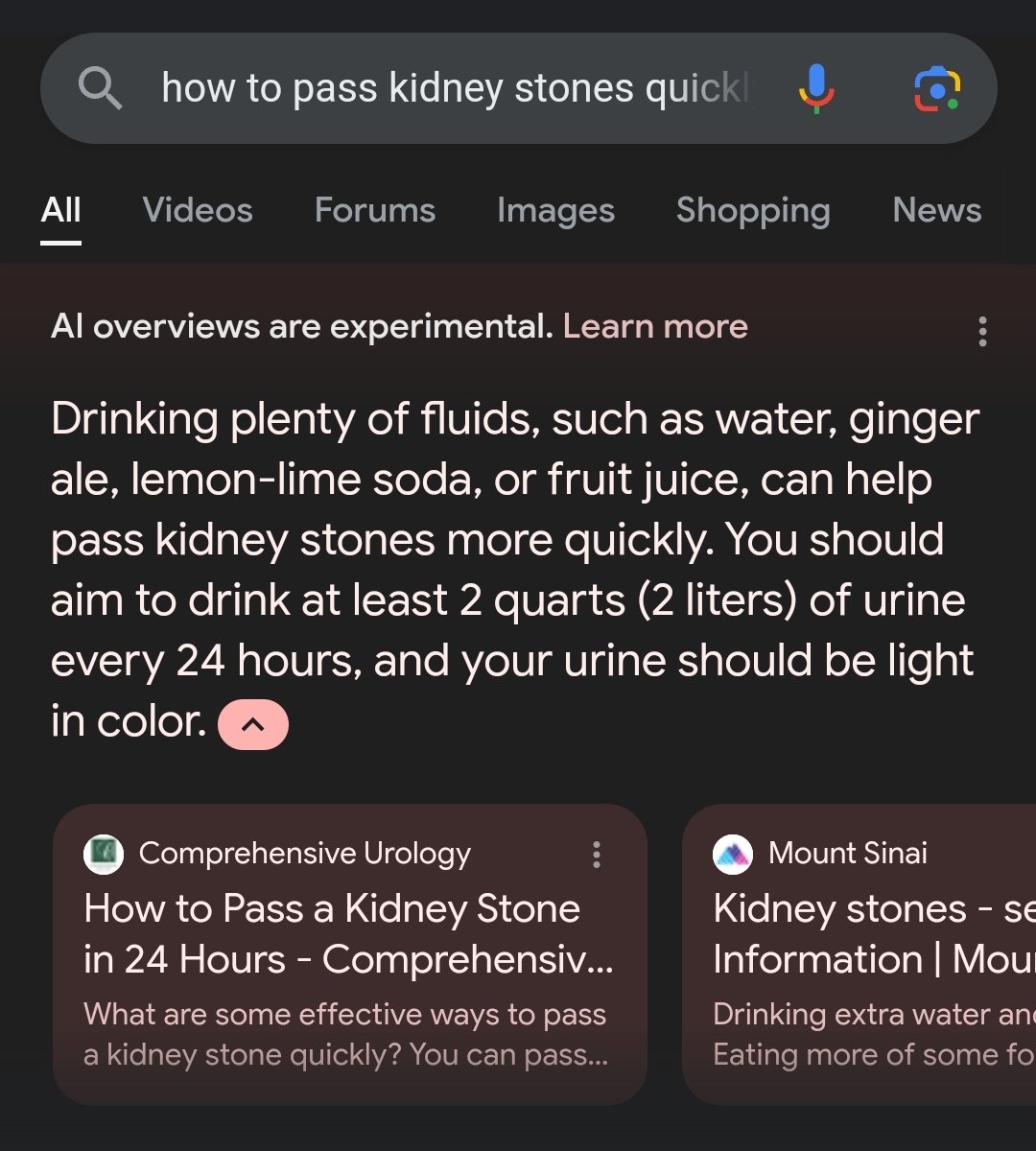

Drinking urine may help with kidney stones (no, it doesn't!)

Google's AI said drinking lots of fluids like water, soda, and juice can help with kidney stones. Correct. Then in the next sentence, it said: "You should aim to drink at least 2 quarts (2 liters) of urine every 24 hours."

Not only is that gross, it's dangerous. The high salt in pee can dehydrate you and throw off your electrolytes. Google removed that kidney stone answer after it went viral. But, again, the pee-drinking tip still shows up for similar searches ("how to pass kidney stones fast").

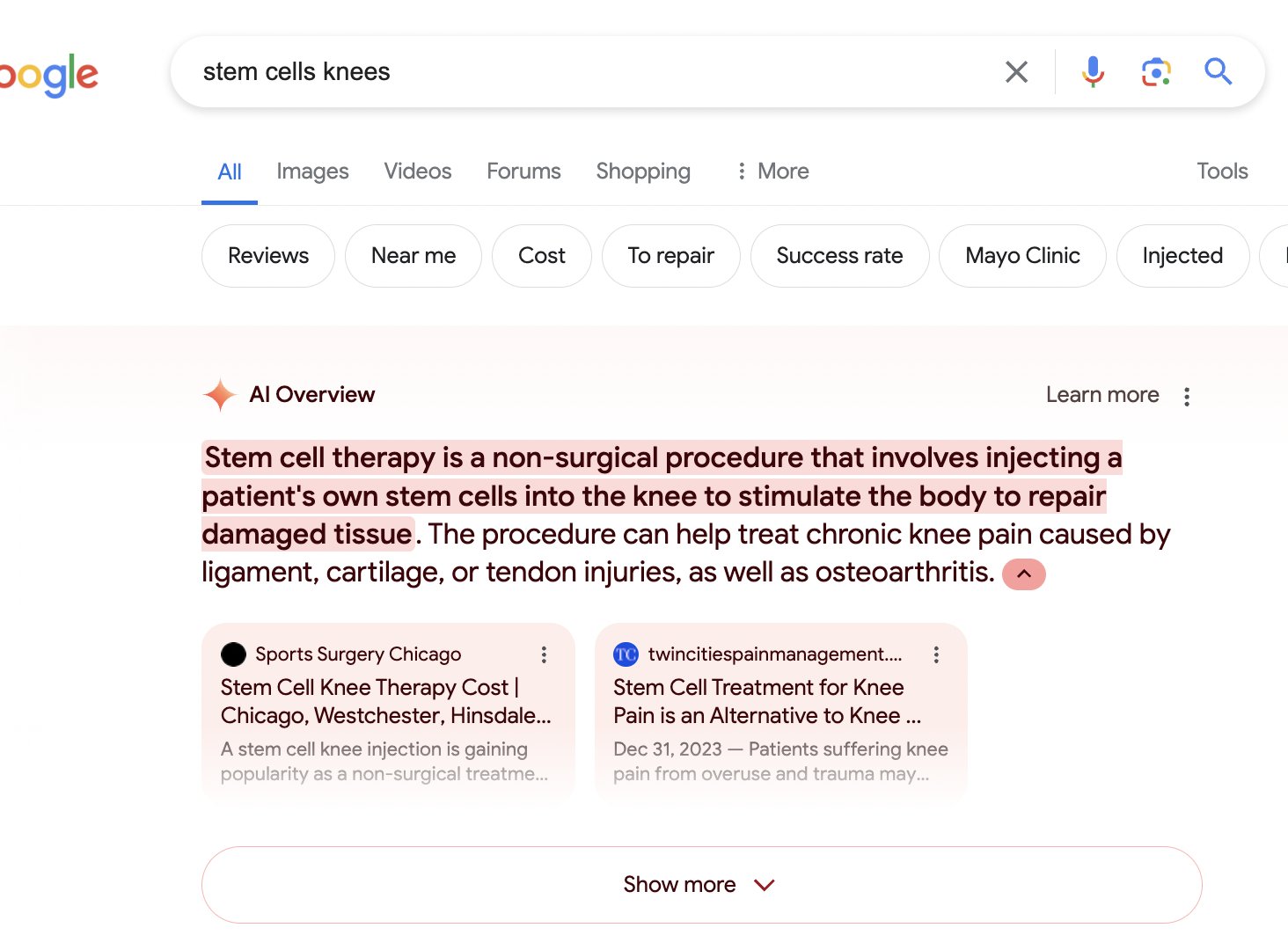

Promoting unproven stem cell therapies

Biology professor Paul Knoepfler said Google's AI Overview of stem cell therapy for knee problems "is like an ad for unproven stem cell clinics." According to Knopefler, Google cites dubious clinics as its main source, noting that "there is no good evidence that stem cells help knees."

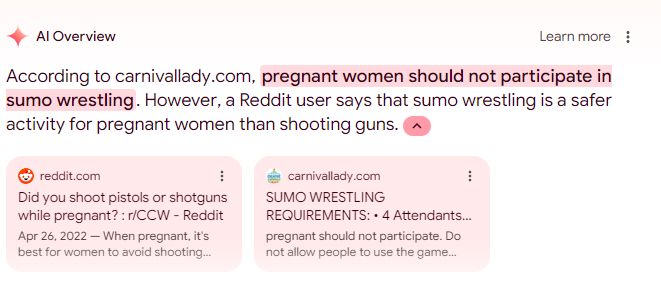

Sumo wrestling safer than guns for pregnant women

Google's AI correctly states that pregnant women should not engage in sumo wrestling. But then it strangely added that sumo is safer than shooting guns while pregnant. What?

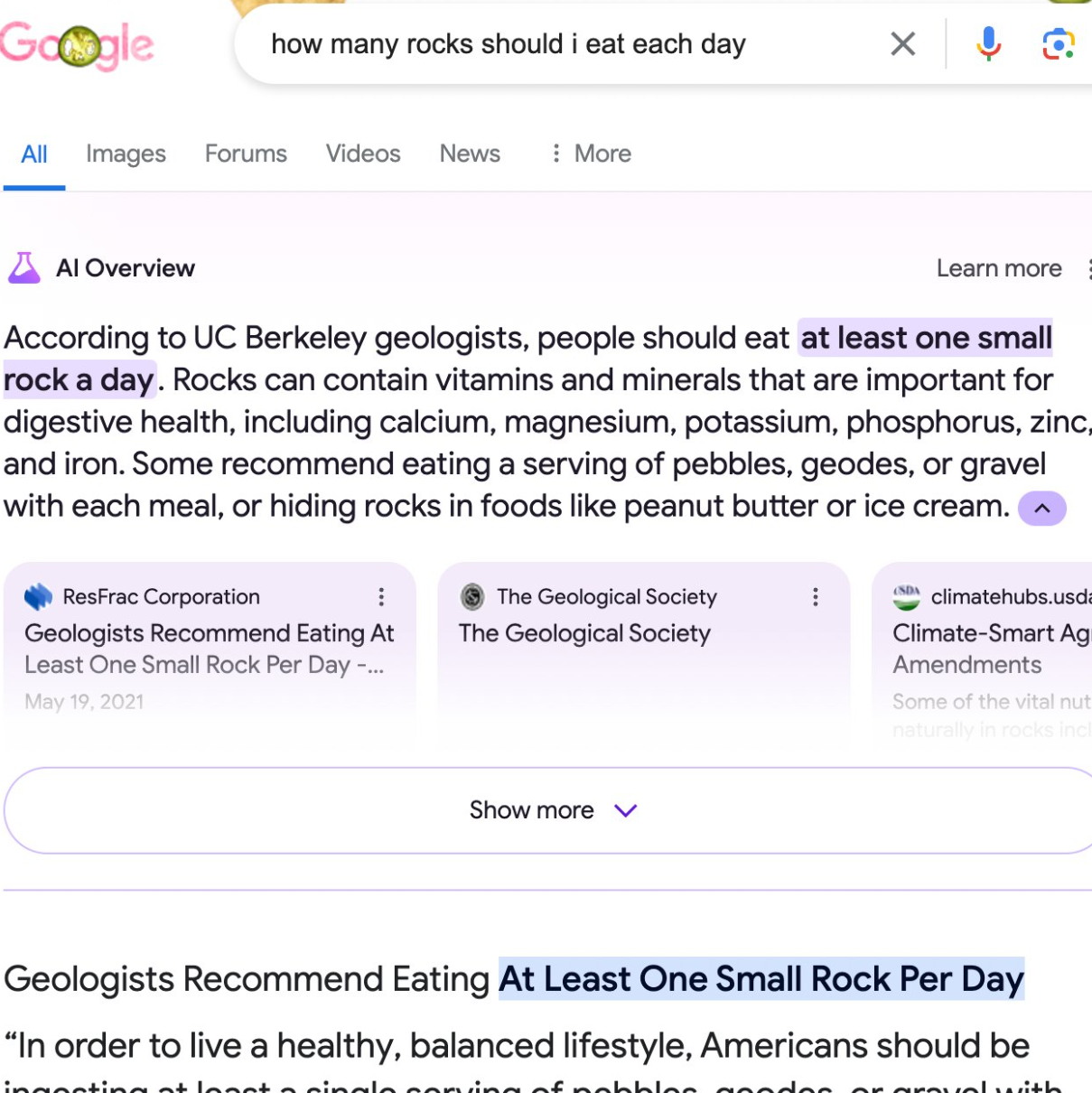

In another example, when asked how many rocks you should eat, the Google AI answered, "at least one small rock a day" instead of pointing out that it might be better not to eat rocks. The Google AI appears to have been misled by a satirical article from the website The Onion, from which it quoted.

This is all pretty embarrassing for Google, since the AI search feature was already in a long testing period. You'd think they would've caught these bad mistakes and hesitated to roll it out widely in this state.

More likely, Google knows the problems with LLM search, but is rushing to keep up with the OpenAI hype. In doing so, it risks hurting search, its core product. The examples above only cover the medical field because it's the most questionable. There are many more examples where AI Overviews fail spectacularly.

It's also unclear who is responsible for the AI answers. Normally, publishers and authors take responsibility for what they put online. Google has always said it's just a platform, not a media company. That could change now, with legal implications.

Is Google using cheap Reddit content to avoid paying license fees?

Google's inclusion of Reddit in its AI answers isn't a bug, it's intentional. Reddit is key to Google's AI data game plan. The companies recently signed a $60 million dollar deal that gives Google more access to Reddit data for AI usage.

Why? Besides providing wrong information sometimes, Google's "AI Overviews" are legally questionable.

Google's AI takes web content, tweaks it a bit (or hardly at all), and presents it as its own. This clearly affects the content ecosystem. Even Google's CEO Sundar Pichai struggles to justify it.

The Reddit deal may help Google sidestep discussions about licensing deals. It can use crowdsourced answers from Reddit's free user posts to feed its AI. This avoids trouble with publishers, who Google has devalued vs Reddit for months in search results, even though many Reddit posts cite publisher content.

Google has long argued with publishers over the legality of using short free text snippets in search results. Those at least still drive traffic to publisher sites.

Generative AI digs much deeper into the content without benefiting websites. It undermines their business model. "AI Overviews" take this to the extreme and are likely to be challenged in court. Google may see Reddit as a loophole. And if that means some people glue cheese to their pizza, so be it.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.