French AI lab Kyutai unveiled its Moshi AI assistant in Paris, which can have natural conversations with users. The technology will be released as open source.

According to Kyutai, Moshi is the first publicly accessible AI assistant with natural conversational abilities. OpenAI had previously showcased this feature for GPT-4o but has not yet released it.

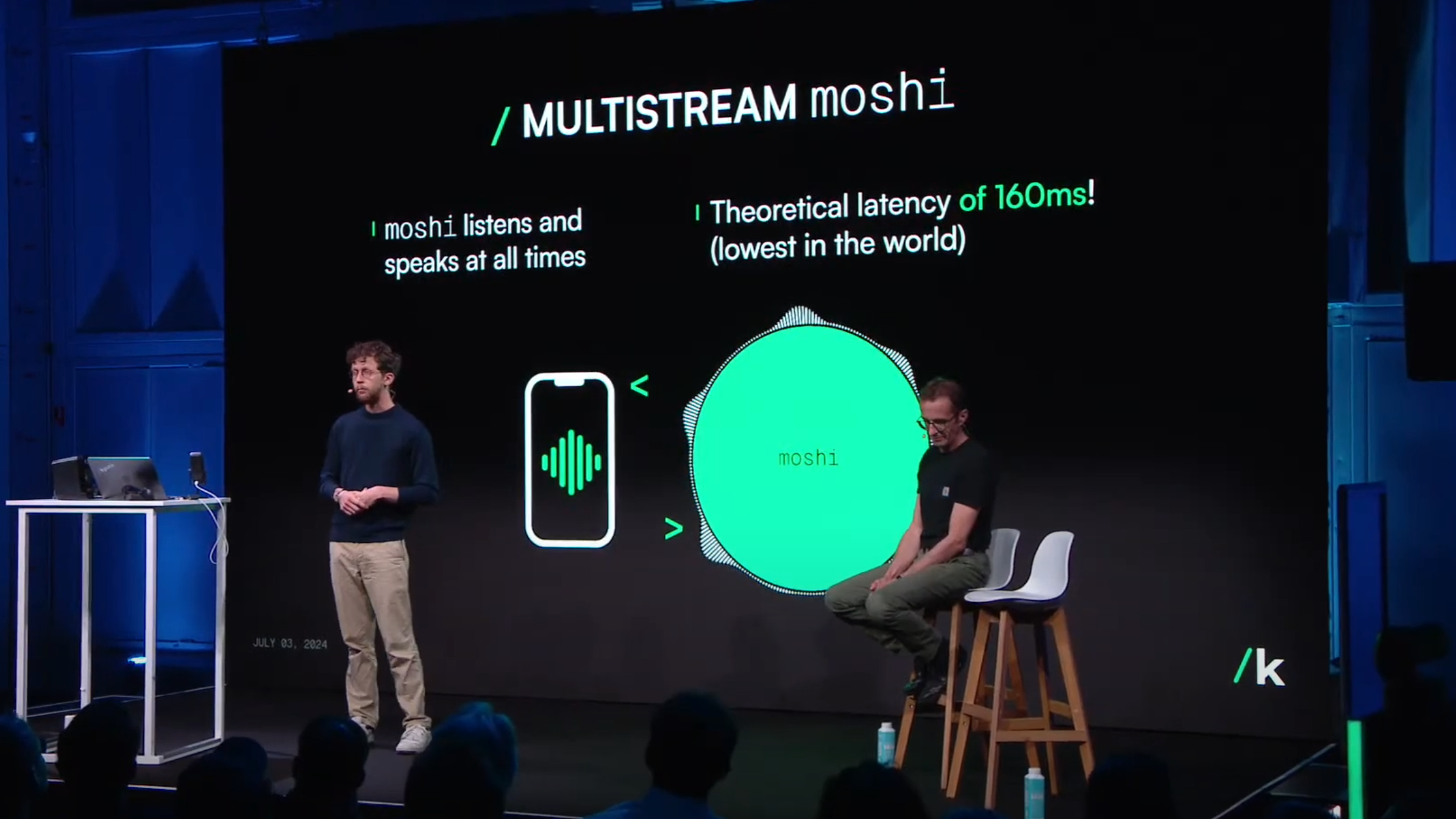

During the presentation, Kyutai CEO Patrick Perez explained that Moshi was developed by a team of eight in just six months. What sets Moshi apart is its ability to speak and listen in real time. Kyutai claims that Moshi has a theoretical latency of only 160 milliseconds, while in practice, it ranges between 200 and 240 milliseconds.

Moshi's architecture is based on a new approach that Kyutai calls an "Audio Language Model." Instead of converting speech into text as usual, the model heavily compresses audio data and treats it like pseudo-words. This allows it to work directly with audio data and predict speech, making it a natively multimodal model, similar to GPT-4o.

Video: Kyutai

For training, Kyutai used various data sources, including human motion data and YouTube videos. First, a pure text model called Helium was trained. Then, combined training with text and audio data was conducted. Synthetic dialogues were used for fine-tuning the conversation.

Since the underlying language model has only 7 billion parameters, it exhibits the usual limitations of small models in dialogue. Nevertheless, the language capabilities and speed are impressive and hint at the potential when more powerful and larger modules are employed with this technology.

To give Moshi a consistent voice, Kyutai collaborated with a voice actress named Alice. She recorded monologues and dialogues in various styles, which were then used to train a speech synthesis system.

Moshi: Demo available, open source to follow

Kyutai sees great potential in Moshi to change the way we communicate with machines. The company sees promising applications, particularly in the area of accessibility for people with disabilities.

The Moshi demo is now available online. If you are in the US, you should use this link for better latency. In the coming months, Kyutai plans to release the technology as open source, allowing developers and researchers to examine, adapt, and extend it. A research paper is also set to follow.

Kyutai was founded in 2023 and received 300 million euros from French billionaires like Xavier Niel and Rodolphe Saadé last November. Kyutai has attracted renowned AI researchers like Yann LeCun and Bernhard Schölkopf as scientific advisors. One of the main arguments that attracts researchers to Kyutai is its commitment to open science and the ability to publish their work: All of Kyutai's models are to be open source, and the researchers plan to publish not only the models but also the training source code and documentation of the training process.