Google cuts Gemini 1.5 Flash prices and adds new PDF features

The AI model price war continues as Google cuts prices for its fast Gemini 1.5 Flash by up to 78 percent.

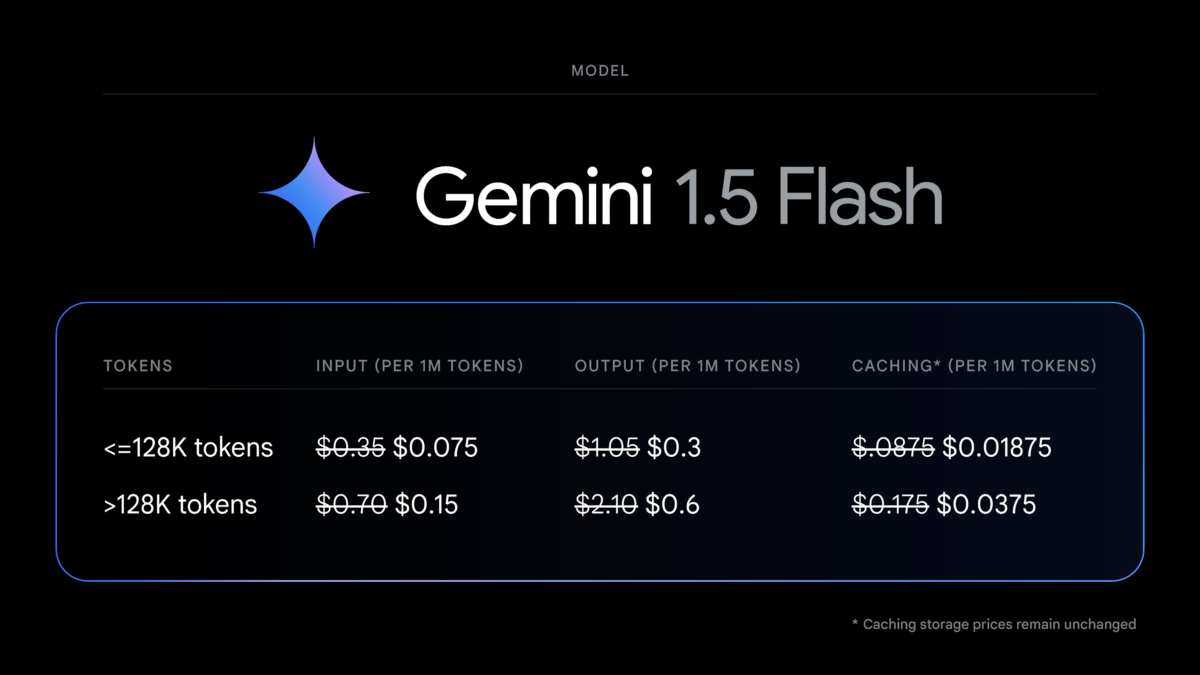

Google announced that input token costs will drop 78 percent to $0.075 per million tokens. Output token costs will drop 71 percent to $0.30 per million tokens for prompts under 128,000 tokens. Similar price reductions apply to longer prompts and caching.

According to Google, Gemini 1.5 Flash is the most popular model for use cases that require high speed and low latency, such as summarization, categorization, and multimodal understanding.

The Gemini API and AI Studio now support better PDF understanding based on text and image analysis. For PDFs that contain graphics, images, or other visual content, the model uses native multimodal processing capabilities.

Expanded language and fine-tuning support

Google has also expanded language support for Gemini 1.5 Pro and Flash models to over 100 languages. This allows developers around the world to work with the models in their preferred language. It should stop the model from blocking responses for using an unsupported language.

In addition, Google is expanding access to Gemini 1.5 Flash fine-tuning. It's now available to all developers through the Gemini API and Google AI Studio. Fine-tuning allows developers to customize base models and improve performance for specific tasks by providing additional data. This reduces the size of the prompt context, lowering latency and cost, and can increase model accuracy.

Google's announcement follows OpenAI's recent price reductions of up to 50 percent for GPT-4o API access. It seems that despite the high cost of developing and running AI models, providers are already engaged in a fierce price war.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.