Google expands AI for Search and Lens - and starts placing ads

Google is rolling out new AI-powered capabilities for its search engine and Google Lens. Users can now analyze video content, use voice input, and receive AI-generated summaries of search results.

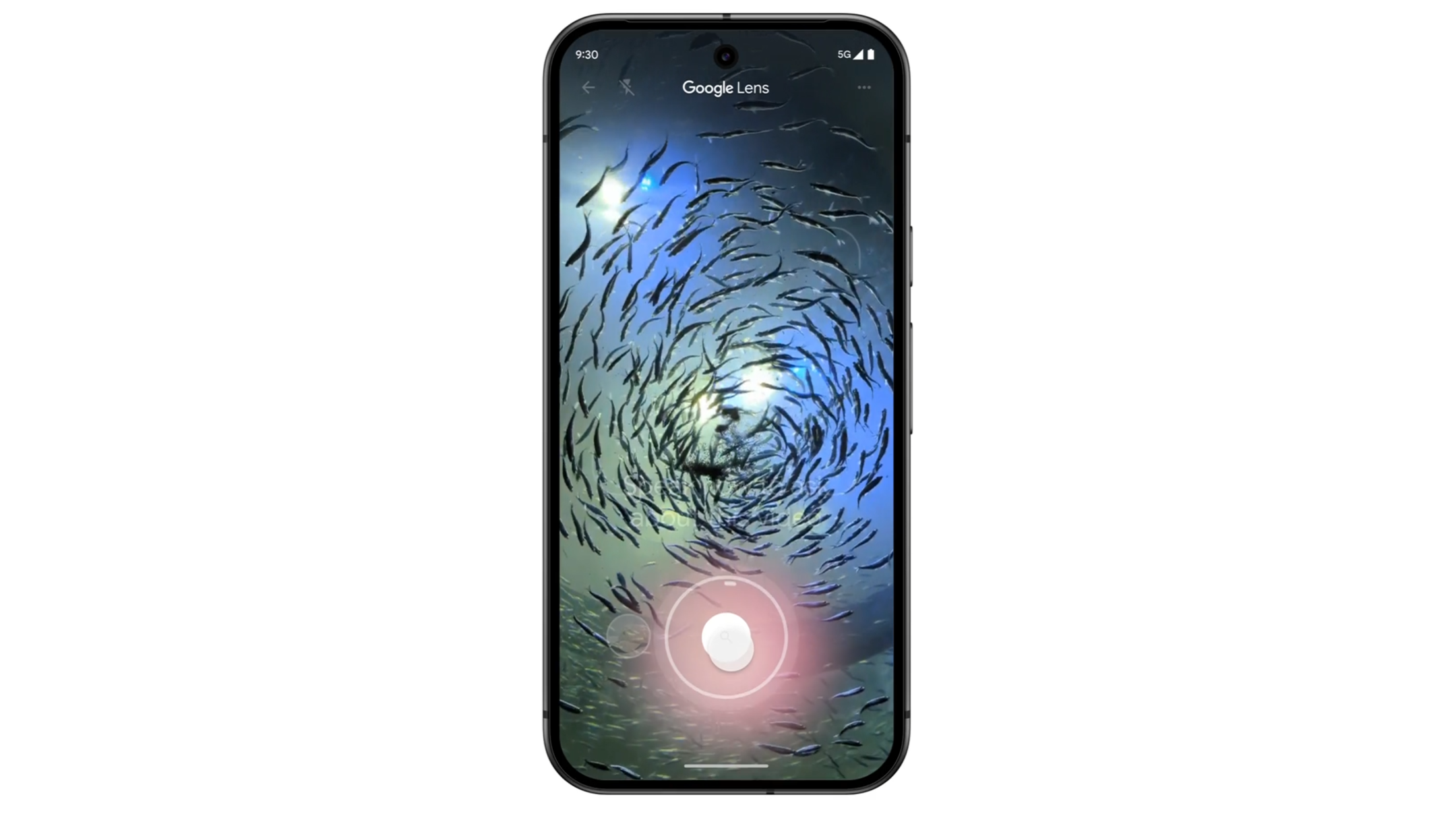

According to Liz Reid, VP of Google Search, Lens now handles nearly 20 billion visual queries monthly. A new feature lets users ask questions about videos they capture with Lens by holding the shutter button in the Google app and speaking. The system then analyzes both the video and question to generate an AI summary.

Users can also now ask voice questions about any photos taken with Lens. This works globally in the Google app on Android and iOS, but only for English queries initially.

Shopping features in Lens are improving too. Users get more detailed product info when capturing items with the camera, including reviews, price comparisons and purchase options. A new "Circle to Search" function lets users identify songs playing in videos, movies, or websites without switching apps.

Video: Google

For general web searches, Google is introducing an AI-organized results page in the US, starting with recipes and food queries on mobile.

Ads coming to AI features

Google also announced it will integrate ads into AI summaries and Lens results. This is active in the US and expanding to other countries soon. Ads will be clearly labeled as "Sponsored."

Shopping ads will appear above and beside Lens visual search results later this year. Google says this will connect advertisers with purchase-ready users. Existing AI-enhanced search, Shopping and Performance Max campaigns will automatically extend to the new formats.

Video: Google

An updated AI overview design that's been in testing is now rolling out widely. Google claims it drives more traffic to sources than the previous version, but didn't provide specific metrics.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.