Google launches Gemini Pro API access

Google has launched developer access to Gemini Pro, its new multimodal AI that can process both text and image input.

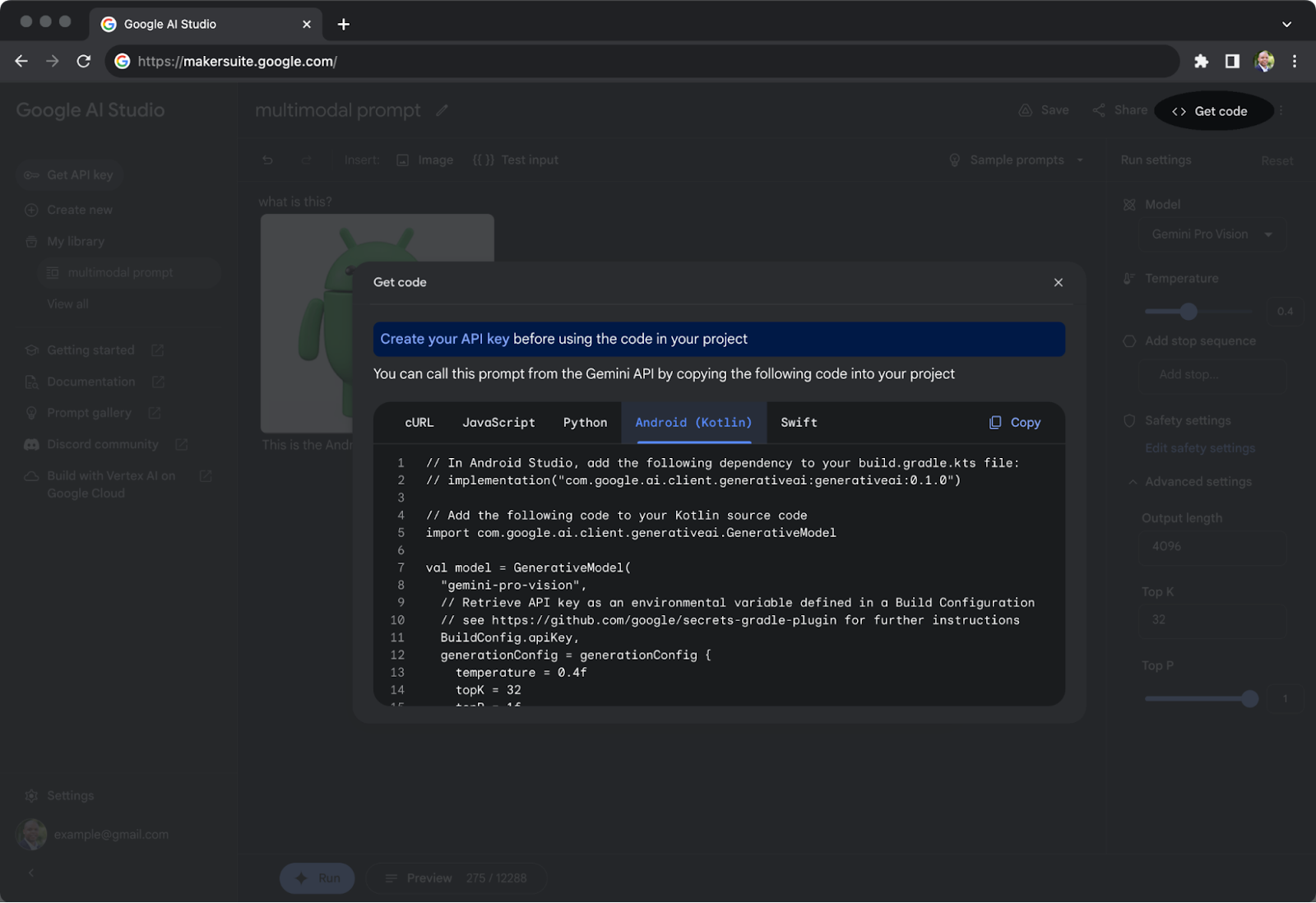

The model, which runs off-device in Google's data centers, is accessible through the Gemini API and can be integrated using the Google AI SDK, a client SDK for Android. This SDK frees developers from having to build their own backend infrastructure.

Developers can integrate Gemini Pro, generate API keys, and build AI applications all through Google AI Studio. Google says it made it easier for developers to use the Gemini API with a new project template in the latest preview of Android Studio.

Last week, Google launched access to Gemini Nano, an on-device model available on select devices through AICore. Nano will run on Google's new Pixel 8.

The MLLM benchmark wars are on

Gemini Pro's performance is about the same as GPT-3.5, but according to Google, it outperforms OpenAI's model in six out of eight benchmarks. Google has also upgraded its ChatGPT competitor Bard with Gemini Pro.

Google's GPT-4 competitor Ultra will follow early next year. Google has shown that it can outperform GPT-4 on important benchmarks such as MMLU using special prompting methods. Microsoft, meanwhile, has shown that GPT-4, also driven by special prompts, is just as capable, if not more so, on benchmarks.

Microsoft also recently introduced Phi-2, also a small, large language model optimized for on-device performance like Gemini Nano. Phi-2 outperforms Google's Gemini Nano in all benchmarks presented by Microsoft.

But that's just benchmarks, the more interesting part will be how the model behaves in real-world scenarios.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.