According to Alphabet's CEO, Google's Gemini is just the first of a series of next-generation AI models that Google plans to bring to market in 2024.

With the multimodal Gemini AI model, Google wants to at least catch up with OpenAI's GPT-4. The model is expected to be released later this year. In the recent quarterly earnings call, Alphabet CEO Sundar Pichai said that Google is "getting the model ready".

Gemini will be released in different sizes and with different capabilities, and will be used for all internal products immediately, Pichai said. So it is likely that Gemini will replace Google's current PaLM-2 language model. Developers and cloud customers will get access through Vertex AI.

Most importantly, Google is "laying the foundation of what I think of as the next-generation series of models we'll be launching throughout 2024," Pichai said.

"The pace of innovation is extraordinarily impressive to see. We are creating it from the ground-up to be multimodal, highly efficient tool and API integrations and more importantly, laying the platform to enable future innovations as well," Pichai said.

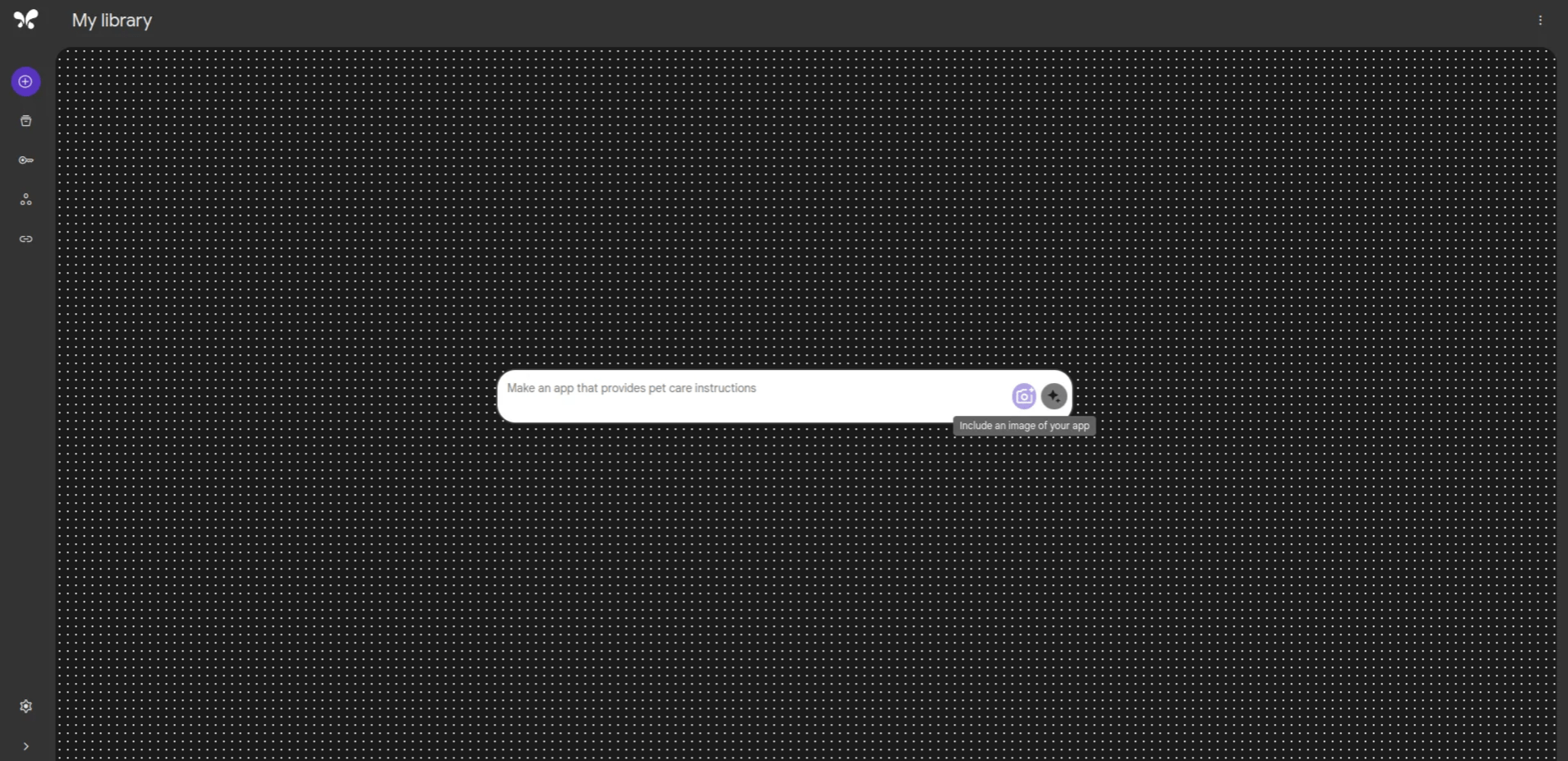

Create a prototype app with an image-text prompt: Gemini powers no-code app development in Google's "Stubbs"

Developer Bedros Pamboukian reports on a new AI tool in the works at Google called Stubbs, which will likely be powered by Gemini. Pamboukian believes that Stubbs could be Google's most important release.

Stubbs is designed to make it easier to prototype apps or AI models by generating prototypes from a text description or an image (or both combined), which can then be published and shared much like Figma prototypes. Pamboukian has not yet been able to determine the exact functionality based on code snippets, but he shows first screenshots of the presumed user interface.

During his research, Pamboukian also came across an AI model called "Multimodal IT M," which could be a variant of Gemini. In addition to text, the model can also process images and write subtitles for images, for example. These functions are also offered by Google Bard or GPT-4V.