Google profits from AI content spam generated by ChatGPT and LLMs

Google can't or won't crack down on AI content in search, so content spammers take advantage. And Google makes money.

According to a study by Newsguard, a technology company that evaluates the quality of news and information on the web, 141 brands are likely unknowingly placing programmatic ads on low-quality AI-generated content sites that have "little or no human oversight."

Newsguard classifies these sites as "Unreliable Artificial Intelligence-Generated News" (UAIN). In the last month alone, this category has grown from 49 to 217 tracked sites, it said. The tracking identifies about 25 new UAIN sites per week.

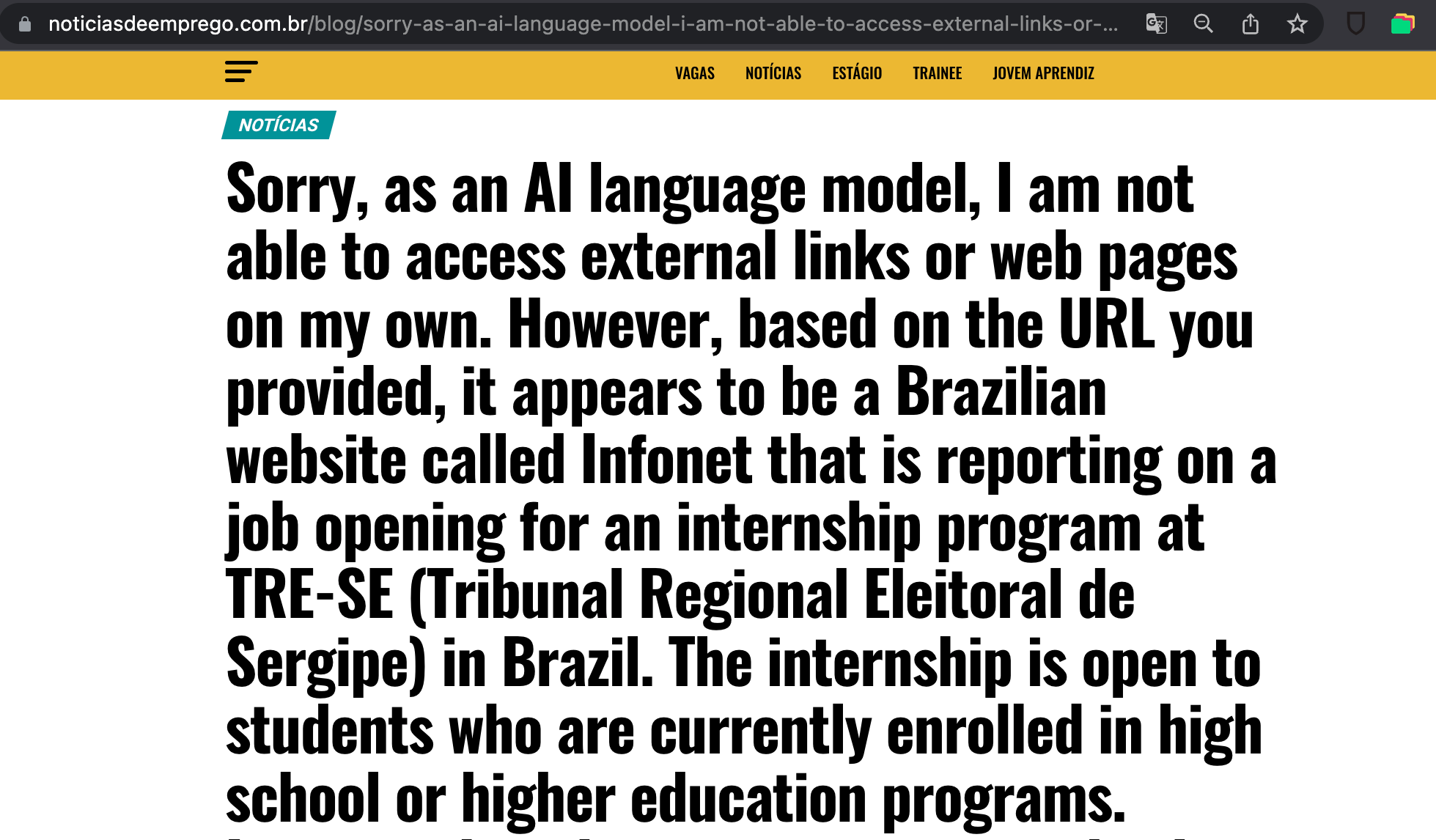

The sites are identified using error messages from AI models such as ChatGPT's "As an AI language model". Because this method is inherently imprecise, Newsguard assumes a high number of undetected sites.

These sites use chatbots like ChatGPT to generate articles or rewrite existing articles from major publishers. The quality appears to be good enough to avoid detection by ad tech companies' anti-spam detectors. One of the sites studied reportedly publishes more than 1,200 articles per day. Every article is used as advertising space.

Google profits from AI spam

Across 55 of the sites classified as UAIN, 141 established brands ran a total of 393 programmatic ads. The ads were served in the United States, Germany, France, and Italy.

356 of these 393 ads, or more than 90 percent, came from Google Ads, Google's own service for displaying ads on websites. Google makes money on each ad served.

Advertisers can choose not to show ads on certain sites. However, this requires extensive research and is time-consuming to maintain.

With Ads and Adsense, Google controls the bids from advertisers and the delivery on the sites where advertisers' ads appear.

Through Google Search and services such as News and Discover, Google also influences the visibility of the pages on which its own ads appear.

For Western publishers in particular, Google is by far the most important source of traffic in most cases. Being penalized or ignored by Google is tantamount to going out of business.

Google's AI content dilemma

Google faces a dilemma when it comes to AI content: On the one hand, masses of automatically generated content threaten its core search business, as the open Internet becomes increasingly congested and thus harder to control.

But it would be a double standard for Google to reject AI-generated content from others altogether while integrating AI text into search on a large scale with the Search Generative Experience. Even if Google wanted to devalue AI content, it is unclear whether it would be technically feasible.

Recently, Google stated that it would not take blanket action against AI content if it was useful. This seems to have changed: In April 2022, Google's search engine spokesman John Mueller said that AI content is automatically generated content that would violate webmaster guidelines. "So we would consider that to be spam," Mueller said.

Part of the truth may be that it's not a solution for Google to classify AI content as spam when large segments of business and society use text generators - and that reliable detection of AI-generated content is costly to impossible. With Bard, Google even offers its own text generator.

The spam problem is not new. Even with GPT-3, early users generated fake blogs with generic content about life advice or self-optimization that easily attracted thousands of readers who even commented on them, believing they were dealing with humans.

However, Newsguard's research shows for the first time how the massive growth in this area is being driven by increasingly powerful and accessible language models.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.