Google's ImageInWords could boost everything from image search to text-to-image AI

Key Points

- With ImageInWords (IIW), Google is developing a highly detailed image description system that combines object-based AI descriptions with human refinement and outperforms previous approaches on benchmarks.

- Human describers refine the AI-generated object-based descriptions using a comprehensive set of guidelines that take into account properties such as function, shape, size, color, pattern, texture, and relationships between objects.

- In tests with downstream tasks, IIW descriptions performed best, even in tasks that required a deeper understanding of images. Google sees potential for a wide range of applications and plans to further develop IIW and reduce the amount of human work.

When AI and humans work together, more detailed and accurate image descriptions emerge. This method could greatly improve the accuracy of image models.

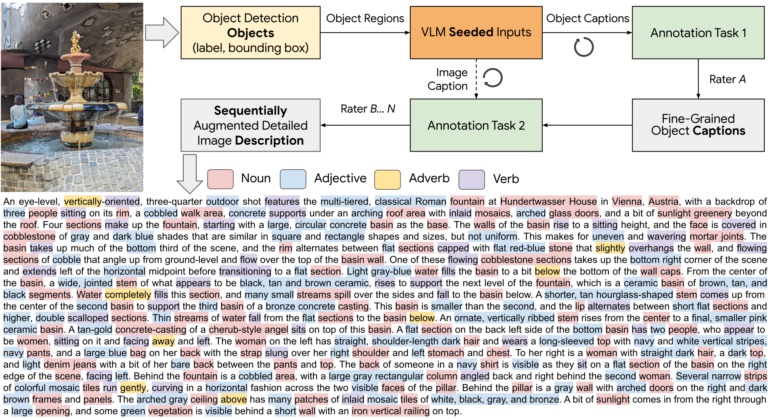

A Google research team has developed ImageInWords (IIW), a system designed to take image descriptions to a new level. IIW combines precise instructions for human workers with a step-by-step description process. The result is extremely detailed image descriptions that surpass previous approaches in benchmarks.

Current AI systems for image processing are often trained with enormous amounts of data sourced from the internet. However, this data is frequently inaccurate and uses simple alt-texts instead of meaningful image descriptions, limiting the capabilities of these systems.

Previous attempts to create higher-quality image descriptions also had weaknesses, regardless of whether the descriptions were created by humans or AI models, as they exhibited subjective biases or hallucinations.

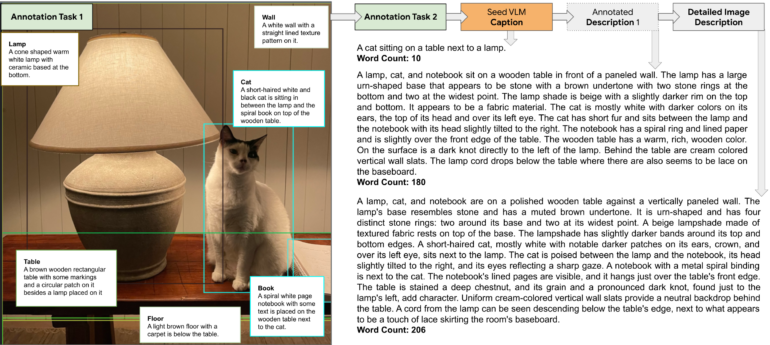

IIW directly addresses these challenges. First, the system recognizes individual objects in an image. Then, an AI generates initial descriptions for each object, serving as a starting point for human annotators.

Humans should describe images "like a painter"

The human annotators refine and expand the object-related descriptions, ensuring that the descriptions are both comprehensive and accurate.

The annotators are asked to operate as if they are instructing a painter to paint with their words and only include details that can be deduced from visual cues, erring on the side of higher precision. Unnecessary fragmentation of sentences should be avoided to compose in a flowy coherent style avoiding the use of filler phrases like: “In this image,” “we can see,” “there is a,” “this is a picture of,” since they add no visual detail and come at a cost of verbosity.

From the paper

The complete guide for descriptions can be found in the appendix of the paper under Chapter 7.1 and spans several pages. Among other things, the workers should pay attention to these properties of an image:

- Function: Purpose of the component or the role it plays in the image

- Shape: Specific geometric shape, organic, or abstract

- Size: Large, small, or relative size to other objects

- Color: Specific color with nuances like solid or variegated

- Design/Pattern: Solid, flowers, or geometric

- Texture: Smooth, rough, bumpy, shiny, or dull

- Material: Wooden, metallic, glass, or plastic

- Condition: Good, bad, old, new, damaged, or worn out

- Opacity: Transparent, translucent, or opaque

- Orientation: Upright, horizontal, inverted, or tilted

- Location: Foreground, middle ground, or background

- Relationship to other components: Interactions or relative spatial arrangement

- Text written on objects: Where and how it’s written, font and its attributes, single/multi-line, or multiple pieces of individual text

Subsequently, a Vision Language Model generates a description for the entire image. The annotators use this, along with the object-related descriptions, to create a complete and coherent image description.

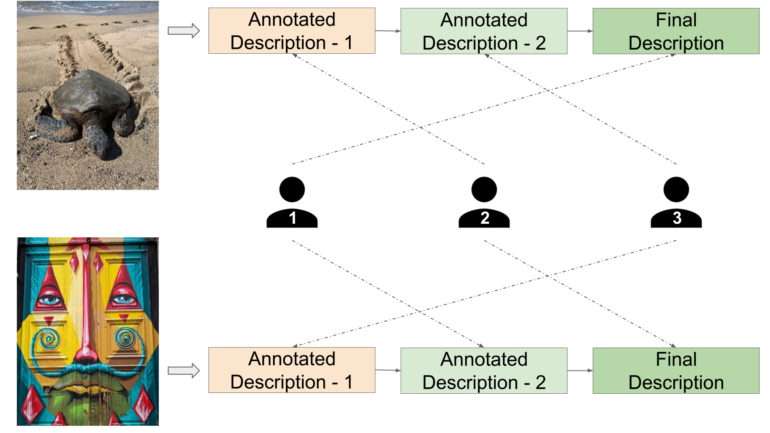

The initial AI-generated descriptions in IIW give human annotators a starting point and accelerate the process. IIW also uses a step-by-step approach where annotators build on previous descriptions, leading to higher-quality results in less time.

Google's method often beats others in tests

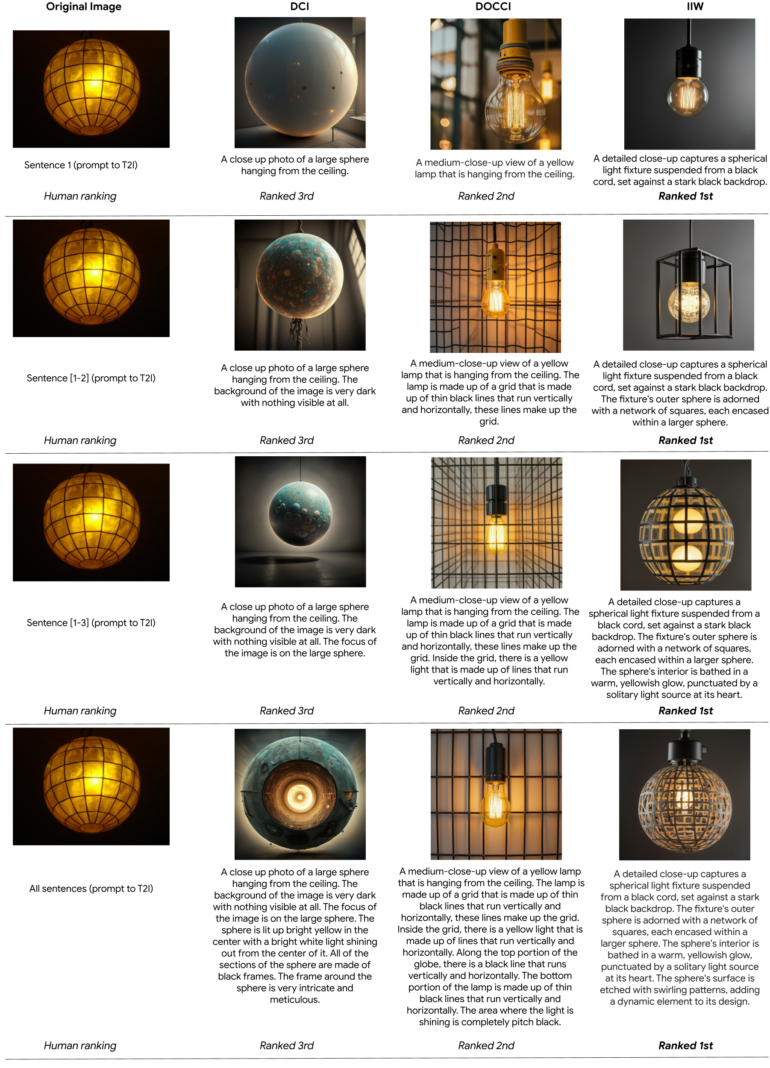

In tests with downstream tasks generating input images from prompts, IIW performed best in human evaluations, regardless of description length. IIW descriptions also excelled in tasks requiring a deeper understanding of image content, containing the necessary details to distinguish real from false image information.

Google plans to further improve IIW, expand it to other languages, and reduce the amount of human labor. IIW has the potential to influence a wide range of AI applications, from image search to visual question-answering systems and synthetic data creation, thereby continuously improving text-to-image models.

While current technologies like Midjourney v6, SDXL, or Firefly 3 can already produce images of astonishingly high quality, prompt-following—how precisely the model implements the text input—is still an area with optimization potential. IIW seems to be an important building block that could benefit not only Google's software like Imagen, but also that of other companies.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now