Google's new open TranslateGemma models bring translation for 55 languages to laptops and phones

Key Points

- Google has released TranslateGemma, a family of open translation models supporting 55 languages that deliver better results than larger competing models.

- The specialized translation models still maintain broader capabilities, including the ability to read text in images and translate it, as well as respond to general instructions.

- TranslateGemma comes in three different sizes, making it accessible for various hardware configurations from smaller devices to more powerful systems.

Google's new TranslateGemma models show that smaller can be better: a 12-billion-parameter version outperforms a model twice its size. The models support 55 languages.

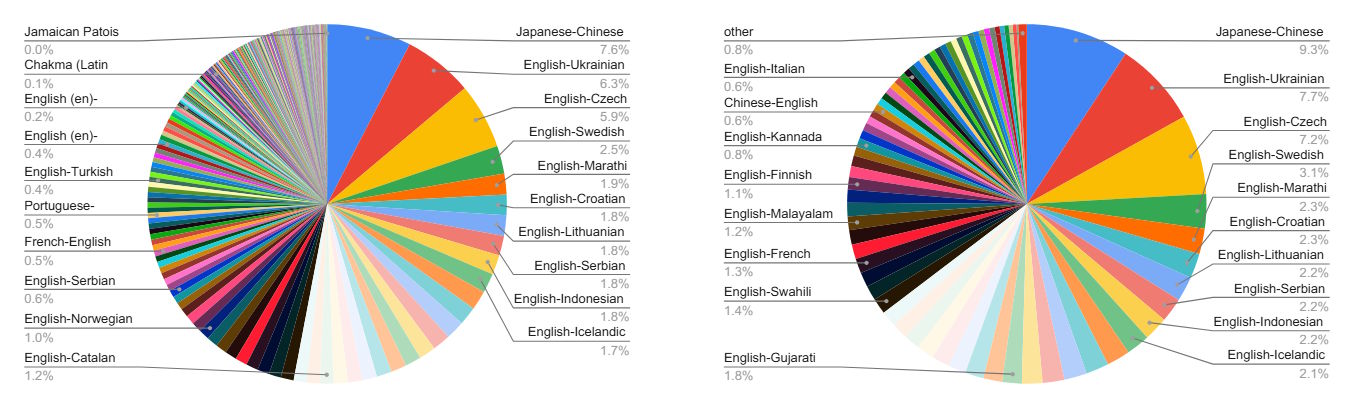

Google has released TranslateGemma in three sizes: a 4-billion-parameter model optimized for mobile devices, a 12B model designed for consumer laptops, and a 27B model for cloud servers that runs on a single H100 GPU or TPU.

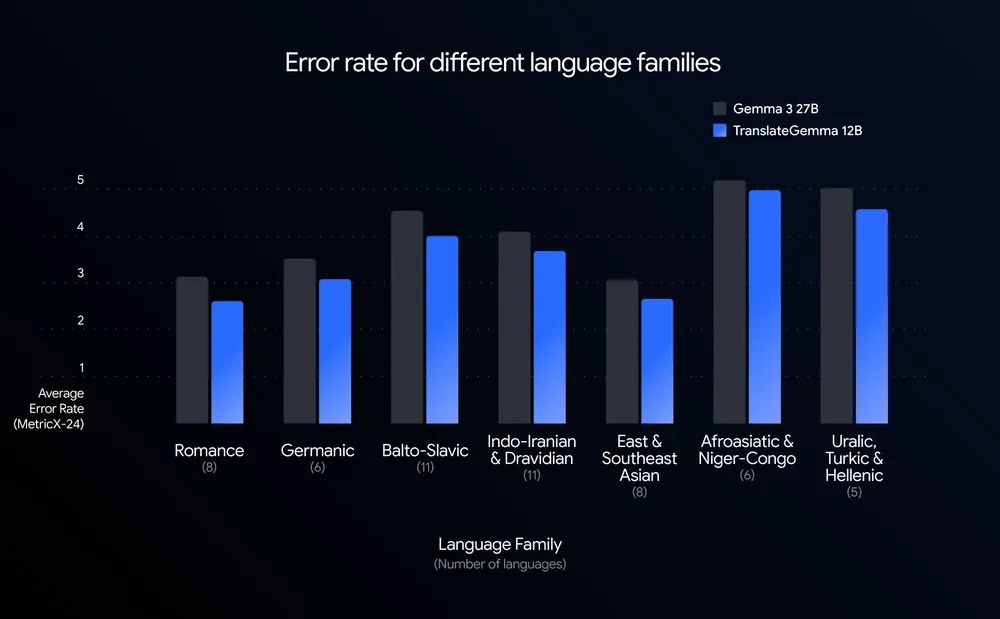

Google measured quality using MetricX, a metric that tracks translation errors—lower scores mean fewer mistakes. The 12B TranslateGemma scores 3.60, beating the 27B base model's 4.04. Compared to its own 12B base model (4.86), the error rate dropped by around 26 percent.

The improvements hold across all 55 language pairs tested. Low-resource languages see the biggest gains: English-Icelandic error rates drop by more than 30 percent, while English-Swahili improves by about 25 percent.

Two-stage training distills Gemini knowledge into smaller models

The performance boost comes from a two-stage training process. First, the models are fine-tuned on human-translated and synthetically generated parallel data. Then reinforcement learning optimizes translation quality: multiple automatic evaluation models check outputs without needing human reference translations. A separate model judges whether the text sounds natural, as if written by a native speaker.

To keep the models versatile despite their specialization, the training mix includes 30 percent general instruction data. This means TranslateGemma can also work as a chatbot.

Human evaluation by professional translators largely confirms the automated measurements, with one exception: Japanese-to-English translations showed a decline that Google attributes to errors with proper names.

Models retain their multimodal capabilities

The models keep Gemma 3's multimodal capabilities intact: they can translate text in images even without specific training for this task. Tests on the Vistra benchmark show that text translation improvements carry over to image-based translation as well.

For best results, Google recommends prompting the model as a "professional translator" that accounts for cultural nuances. The models are available on Kaggle and Hugging Face.

TranslateGemma uses the Gemma Terms of Use, Google's license for the Gemma model family. It allows commercial use, modification, and redistribution but requires passing on the terms and prohibits certain applications under Google's Prohibited Use Policy. The license isn't a traditional open-source license because of its additional restrictions. Google calls it 'Open Weights' instead. The company claims no rights to generated translations.

Google expands Gemma family as open model competition heats up

TranslateGemma is the latest addition to Google's growing Gemma family. It builds on Gemma 3, which Google released in spring 2025 as a powerful open weights model for consumer hardware. Since then, Google has developed specialized variants for different use cases: MedGemma for medical image analysis, FunctionGemma for local device control, Gemma 3 270M for low-resource environments, and Gemma 3n for mobile devices.

In the expanding open models market, Google is positioning itself against Chinese competitors like Alibaba (Qwen), Baidu, and Deepseek, which have significantly grown their presence over the past year. Meanwhile, US labs like OpenAI (which just released its own translation tool, ChatGPT Translate) and Anthropic continue to focus on closed systems.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now