Google shows Spotlight, a multimodal AI model that can understand mobile interfaces. The goal is to improve accessibility and automation.

Google has previously introduced specialized models that can summarize screen content, recognize actionable elements, or execute simple commands. According to Google, these models primarily used metadata from mobile websites in addition to visual data, which is not always available and often incomplete.

With Spotlight, the Google team is training a multimodal AI model that works exclusively with visual information.

Google's Spotlight uses Vision Transformer and T5 language model

Spotlight is based on a pre-trained Vision Transformer and a pre-trained T5 language model. It is trained by Google on two datasets totaling 2.5 million mobile UI screens and 80 million web pages. This allows the AI model to benefit from the general capabilities of large models.

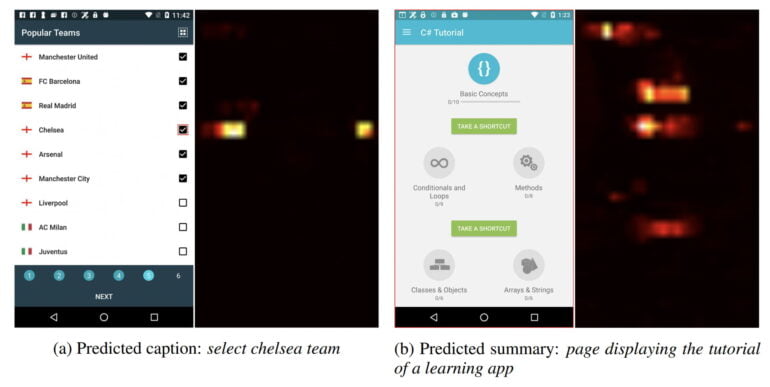

In addition, Google uses an MLP mesh to represent individual regions on a screenshot and extract them for processing, for example, to detect clickable buttons.

Video: Google

The team trains two different model sizes (619 million and 843 million parameters) for each task tested individually and once for all tasks. Tasks include describing individual elements, the entire visible page, or detecting controls.

The specialized Spotlight models significantly outperform all of Google's older expert UI models. The model trained on all four tasks drops in performance, but is still competitive, the team said.

Google wants to scale Spotlight

In the visualizations, Google shows that Spotlight pays attention to both the buttons and the text, such as for the "Select the Chelsea team" command in the screenshot. The multimodal approach works.

Compared to other multimodal models such as Flamingo, Spotlight is relatively small. The larger of the two Spotlight models already performs better than the smaller one. The model could therefore be scaled further and become even better.

"Spotlight can be easily applied to more UI tasks and potentially advance the fronts of many interaction and user experience tasks," the team writes.

In the future, Google's UI model could form the basis for reliable voice control of apps and mobile websites on Android smartphones, or take on other automation tasks. The startup Adept showed what this could look like in the web browser last year with the Action Transformer. Read more in Google's Spotlight blog post.