Under certain circumstances, AI models generalize beyond training data. This phenomenon is called "grokking" in AI research, and Google is now providing insight into recent findings.

During training, AI models sometimes seem to suddenly "understand" a problem even though they have only memorized training data. In AI research, this phenomenon is called "grokking," a neologism coined by American author Robert A. Heinlein and used primarily in computer culture to describe a kind of deep understanding.

When grokking occurs in AI models, the models suddenly move from simply reproducing the training data to discovering generalizable solutions - so instead of a stochastic parrot, you may get an AI system that actually builds a model of the problem to make predictions.

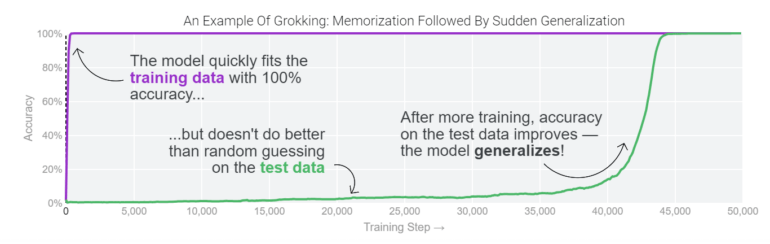

Researchers first noticed grokking in 2021 while training small models to perform algorithmic tasks. The models would fit the training data through memorization but perform randomly on test data. After prolonged training, the accuracy on test data suddenly improved as the models began to generalize beyond the training data. Since then, this phenomenon has been reproduced in various tests, such as Othello-GPT.

Google team shows that "grokking" is a "contingent phenomenon"

Grokking has generated a lot of interest among AI researchers who want to better understand how neural networks learn. That's because grokking suggests that models can have different learning dynamics when they memorize and when they generalize, and understanding these dynamics could provide important insights into how neural networks learn.

While initially observed in small models trained on a single task, recent work suggests that grokking can also occur in larger models, and in some cases can be reliably predicted, according to new research by Google. However, detecting such grokking dynamics in large models remains a challenge.

In the post, Google researchers provide a visual look at the phenomenon and current research. The team trained more than 1,000 small models with different training parameters for algorithmic tasks and shows that "contingent phenomenon — it goes away if model size, weight decay, data size and other hyper parameters aren’t just right."

Grokking 'grokking' could improve large AI models

According to the team, there are still many unanswered questions, such as which model constraints reliably cause grokking, why models initially prefer to memorize training data and the extent to which the methods used in the research to study the phenomenon in small models can be applied to large models.

Advances in understanding grokking could inform the design of large AI models in the future so that they reliably and quickly generalize beyond the training data.