Huawei pushes back on AI model plagiarism claims

Huawei has publicly denied reports that its Pangu Pro MoE open-source model is a "recycled product" based on work from Alibaba.

In an official statement from the Huawei Noah's Ark Lab, the company says the Pangu Pro MoE model was developed in-house and trained from scratch on Huawei's Ascend hardware platform. Huawei insists the model was not created through continued training on another provider's model.

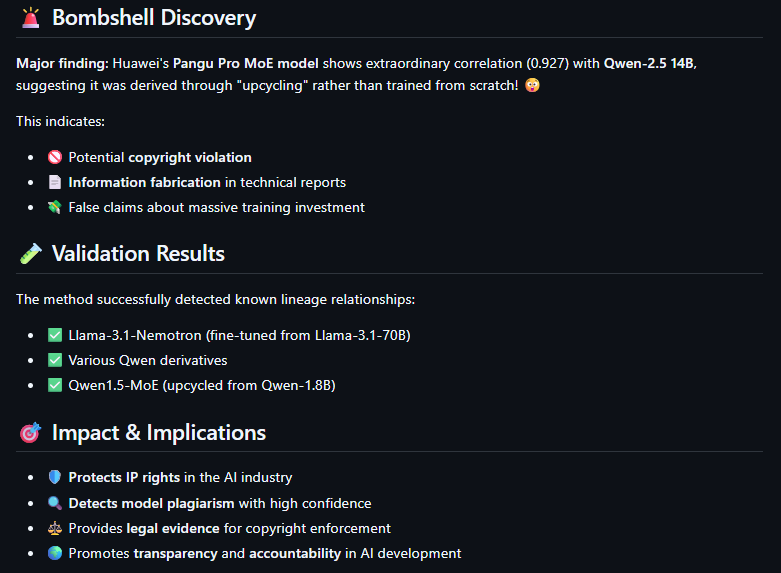

These statements come in response to an analysis by "HonestAGI" that was deleted from GitHub but is still accessible via the Wayback Machine. The report, titled "Intrinsic Fingerprint of LLMs: Continue Training is NOT All You Need to Steal A Model!", highlighted strong similarities between Huawei's Pangu Pro MoE and Alibaba's Tongyi Qianwen Qwen-2.5 14B, especially in the distribution of attention parameters.

The anonymous GitHub analysis argued that Huawei's model may not have been trained from scratch but instead "upcycled." Huawei rejects this, stressing that while some code uses "industry-standard open-source practices," all open-source components are properly licensed and marked.

Accusations go beyond Huawei

The accusations come at a sensitive time for Huawei, which is working to show that its Pangu models - trained on Ascend chips - can compete with global AI leaders. This matters as China seeks alternatives to Nvidia amid increasing US export restrictions.

For China, Huawei's chips are considered a way around tightening US curbs on Nvidia GPUs. The impact of those restrictions is already being felt: the Deepseek R2 model is reportedly delayed due to lack of access to Nvidia H20 chips.

Huawei is promoting its upcoming Ascend 910C as China's top Nvidia alternative. Chip analyst Dylan Patel says the performance gap between the 910C and the formerly US-approved Nvidia H20 is now less than a year, compared to two years with the previous 910B. China still lacks an answer to Nvidia's Blackwell chips, but a scaled-down version for China is reportedly in the works.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.