HumanRF brings high-resolution 3D avatars to NeRFs. Behind it is an AI startup for synthetic media.

Neural Radiance Fields (NeRFs) learn 3D representations from photos or videos and can render individual objects or entire scenes. Some variants specialize in moving scenes or objects, others experiment with editing capabilities, and others attempt to render people photorealistically. NeRFs are considered one of the AI technologies that will play an important role in 3D graphics, video conferencing, or, in the future, the metaverse.

Researchers from synthetic media AI startup Sytnhesia, UCL London, and TU Munich now present HumanRF, a method for learning high-resolution NeRFs from humans in motion.

ActorsHQ is a 12 MP resolution data set of people in motion

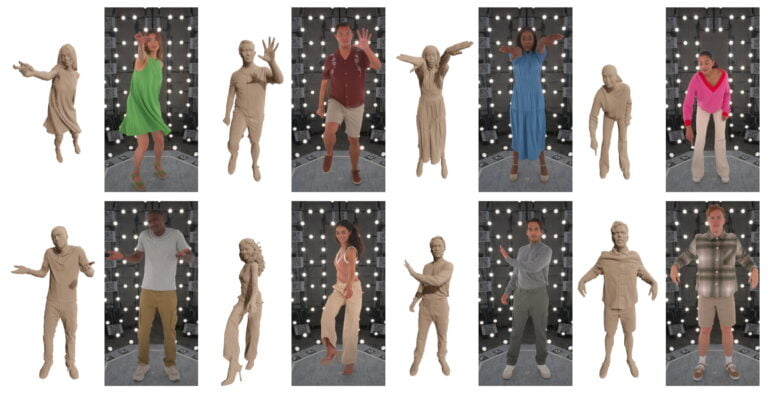

The team is training HumanRF on their own dataset. ActorsHQ consists of 39,765 frames of dynamic human motion captured using multi-view video. The team used a proprietary multi-camera acquisition system combined with an LED array for global illumination. The camera system consists of 160 Ximea 12 MP cameras operating at 25 frames per second and an illumination array of 420 LEDs.

As a result, ActorsHQ provides much higher resolution data than older datasets, which reach a maximum resolution of 4 MP. The dataset contains four females and four males performing 20 randomly selected motions.

HumanRF can learn long sequences of movements at high quality

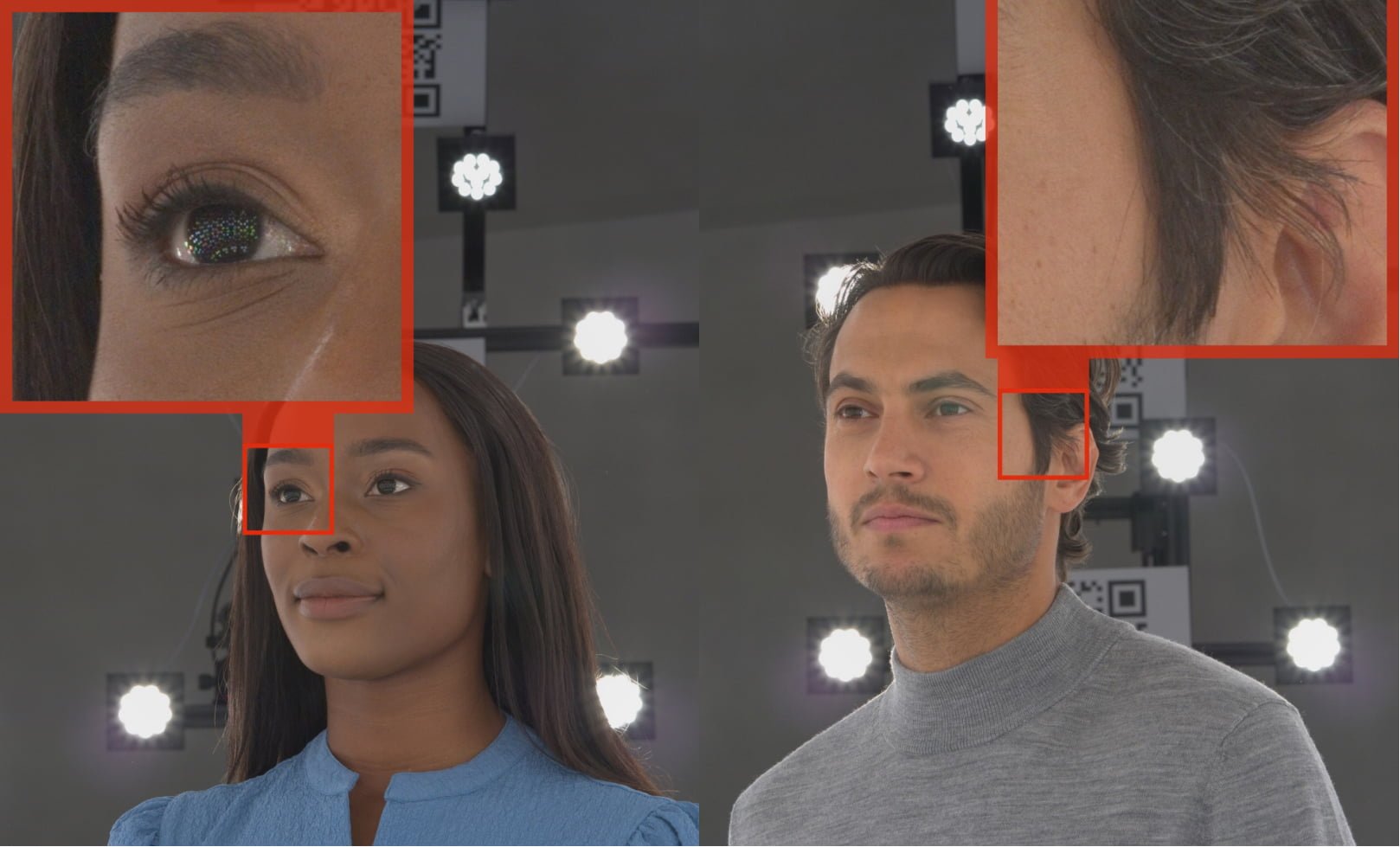

With HumanRF, the team introduces a NeRF method that captures this high-resolution data and obtains temporally consistent reconstructions of human actors, even for long sequences, while being able to display high-resolution details. The team is inspired by Nvidia's Instant-NGP, but adds a time dimension to the encodings used there.

The results are impressive, and the team hopes that HumanRF and the ActorsHQ dataset, which has also been released, will enable further advances in the photorealistic reconstruction of virtual humans. In the future, the team plans to explore methods for controlling the articulation of the trained actors. This could allow Synthesia to evolve its own products from simple 2D recordings to dynamic 3D avatars.

The team plans to make the code and dataset available on the HumanRF project website. More information and examples can be found there.