Incidents of AI misuse are rapidly increasing

"The number of incidents concerning the misuse of AI is rapidly rising." This is one of the key statements that can be read in the Artificial Intelligence Index Report 2023.

This year's 400-page work is considered one of the most trusted reports on the status quo of artificial intelligence, and is now in its sixth year of publication by Stanford University.

The authors didn't mention the word "deepfake" once in their first report in 2017, but they mention it a whopping 21 times in this year's report. They devote an entire chapter to "technical AI ethics."

The rise in reported incidents is likely evidence of both the increasing degree to which AI is becoming intermeshed in the real world and a growing awareness of the ways in which AI can be ethically misused. The dramatic increase also raises an important point: As awareness has grown, tracking of incidents and harms has also improved—suggesting that older incidents may be underreported.

AI Index Report 2023

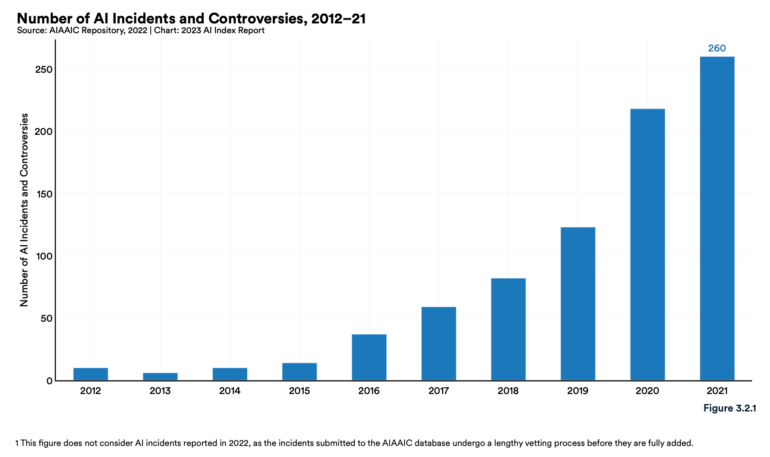

According to the independent AIAAIC database, which tracks incidents related to the misuse of AI, the number of AI incidents increased 26-fold between 2012 and 2021.

Final figures for 2022 are not yet available, as database entries are subject to a lengthy verification process. In addition, a high number of unreported cases can be expected in the first years of tracking.

The authors cite a deepfake video published in March 2022, in which Ukrainian President Volodymyr Zelenskyy allegedly calls on his troops to surrender, as an example with particularly high reach.

U.S. prisons monitor phone calls with AI

But even beyond deepfakes, the use of AI in the recent past has led to discussions about how to use it ethically. For example, there have been reports of AI being used to monitor phone calls in US prisons. Speech recognition was also found to produce less accurate transcripts for Black individuals, despite the fact that a large proportion of prisoners in the US are black.

Surveillance is not just about prisoners, and it is not always covert. The IT giant Intel, for example, is quite open about the fact that it has developed software for the videoconferencing tool Zoom that can read emotions such as "bored," "distracted," or "confused" from the faces of students.

Finally, Midjourney - representative of all AI image generators, including DALL-E 2 and Stable Diffusion - appears in this troubling lineup. The systems raise ethical questions about fairness, bias, copyright, jobs, and privacy, according to the report.

Left unmentioned, however, is the ability to create photorealistic images, which has recently made headlines in connection with people like Pope Francis and Donald Trump.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.