Instagram CEO argues humans must override their instinct to trust what they see online

Key Points

- Instagram head Adam Mosseri predicts a fundamental shift in how people interact with digital media, as AI-generated photos and videos will soon become indistinguishable from authentic content.

- According to Mosseri, skepticism toward visual media will need to become a default mindset for users, marking a significant change in how we consume online content.

- This transition will be "incredibly uncomfortable" for people, as humans are biologically wired to trust what they see with their own eyes.

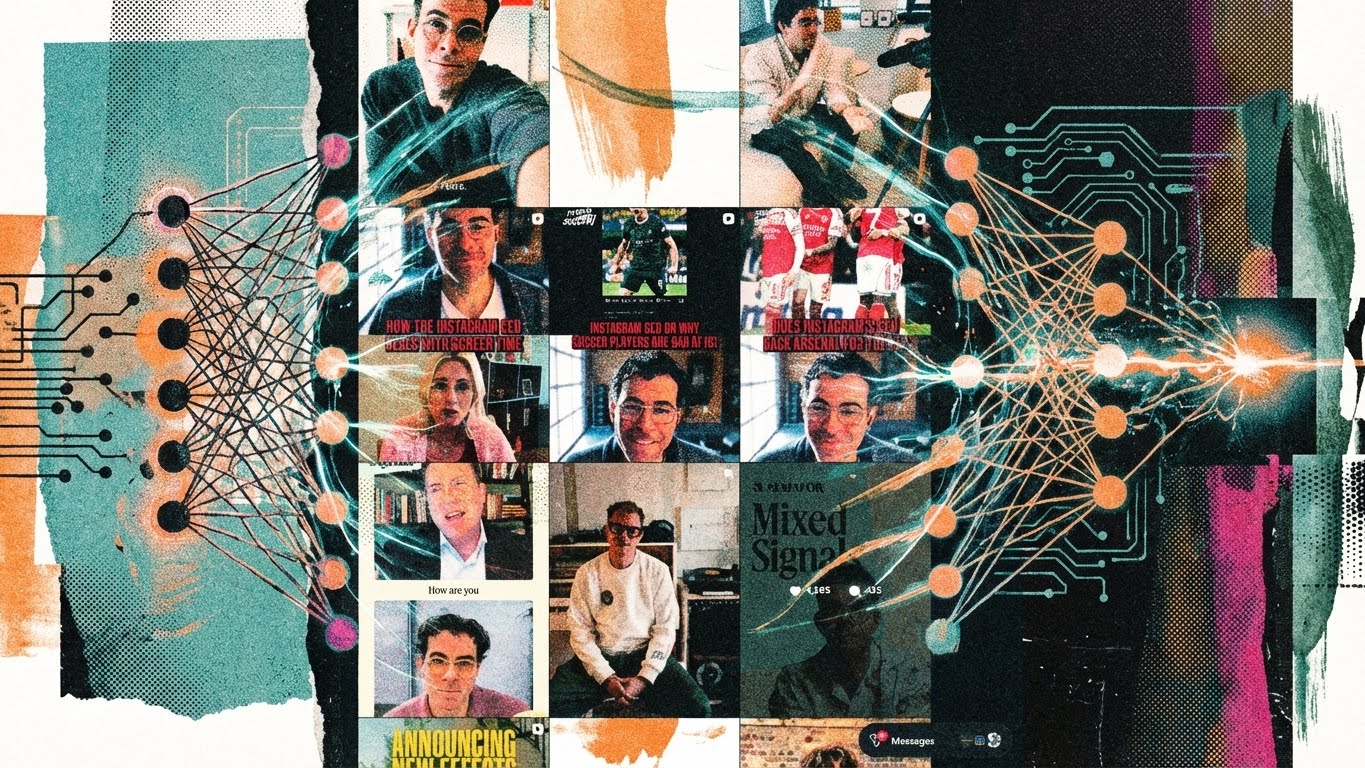

Instagram CEO Adam Mosseri lays out his vision for AI's impact on the platform, and his words echo what deepfake co-inventor Ian Goodfellow predicted back in 2017.

Adam Mosseri, head of Meta's Instagram, describes a fundamental shift coming in the next few years, one that will change how people trust content online. The core problem, according to Mosseri, is that authenticity will become infinitely reproducible.

Everything that used to define creators—being genuine, having a distinct voice, building real connections—is now available to anyone with the right tools, he says. Deepfakes keep getting better. AI generates photos and videos indistinguishable from real footage. Feeds are "starting to fill up with synthetic everything," Mosseri writes.

Right now, there's a lot of talk of "AI slop," Mosseri says, but impressive AI content already exists, without twisted limbs and broken physics. Even this higher-quality AI content has a recognizable look, though: images seem somehow fabricated, too smooth. That will change, according to Mosseri.

Why imperfection is becoming proof that something is real

Mosseri sees the future in imperfection. The classic Instagram feed, with its polished photos, heavy makeup, smoothed skin, and high-contrast landscape shots, is already dead, he says. What's thriving instead: stories and direct messages, where blurry photos and shaky videos get exchanged freely.

Camera manufacturers focused on the wrong aesthetics, Mosseri argues, making everyone look like a professional photographer from the past. More megapixels, more processing, and portrait modes with artificial background blur. It looks good, but it's cheap to produce and boring to consume, he writes.

In a world where everything can be perfected, imperfection becomes a signal, according to Mosseri. "Rawness" isn't just an aesthetic preference, but proof something is genuine, he writes. Smart creators will lean into unpolished, unflattering images of themselves. But even those will be AI-generated eventually (editor's note: they already are).

"The bar is going to shift from 'can you create?' to 'can you make something that only you could create?' That's the new gate," Mosseri writes.

Why learning to doubt our eyes will be painful

The biggest challenge is a social rethink, Mosseri says. People need to stop assuming what they see is real. Skepticism has to become the default when viewing media, paired with closer attention to who's sharing something and why, he argues.

"This is going to be incredibly uncomfortable for all of us," Mosseri writes. Humans are wired to believe their eyes. He points to Malcolm Gladwell's book "Talking to Strangers," which argues that humans default to truth because the evolutionary and social benefits of efficient communication and cooperation far outweigh the occasional cost of being deceived.

The focus has to shift from what's being said to who's saying it, Mosseri writes. Photos and videos used to be reliable records of real moments. That's no longer true, and adapting will take years, he predicts.

Mosseri's take isn't new. Back in 2017, AI researcher Ian Goodfellow, then at Google and co-inventor of Generative Adversarial Networks (GANs), warned of a future filled with mass multimedia fakes.

Goodfellow called it "a little bit of a fluke, historically" that humanity could rely on video for decades to verify something actually happened. That era is ending, he predicted. What Mosseri is describing now is confirmation of that eight-year-old prediction.

How Instagram plans to verify authentic content

Mosseri sees a tough road ahead for platforms. Social media services will face pressure to identify and label AI content, he writes. Major platforms will do a decent job at first, but will fall behind as AI improves, according to Mosseri.

A more practical approach, he argues: give genuine media a digital fingerprint rather than chasing down fakes. Camera manufacturers could cryptographically sign images at capture, creating a chain of custody, Mosseri suggests. But labeling content as authentic or AI-generated is only part of the solution, he adds.

Mosseri outlines Instagram's roadmap: build the best creative tools, label AI-generated content, verify authentic content, display credibility signals about accounts, and improve ranking for originality.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now