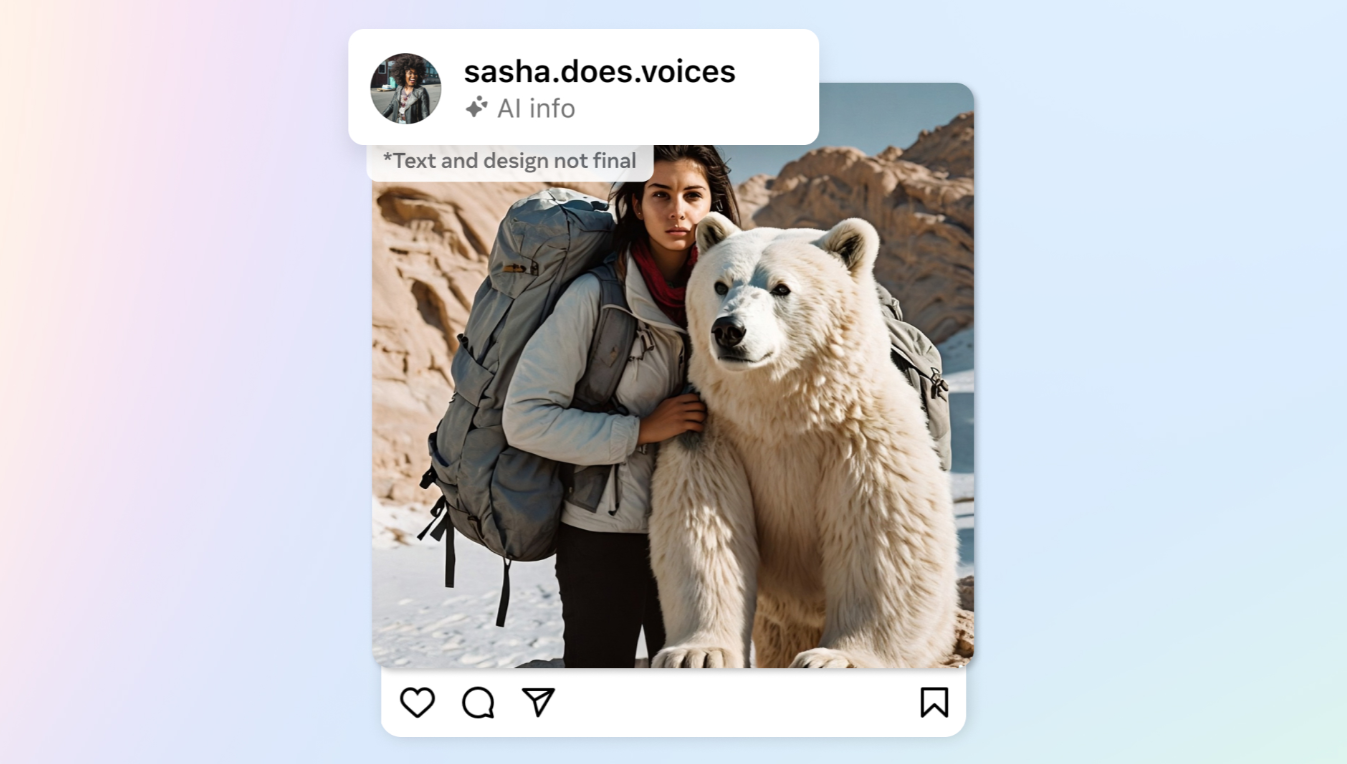

Meta is implementing "Imagined with AI" labels on AI-generated images published on Facebook, Instagram, and Threads.

The company aims to increase the transparency of AI-generated content, including video and audio, through visible labels, invisible watermarks, and metadata embedded in the image files.

Specifically, Meta is working with industry partners including Google, OpenAI, Microsoft, Adobe, Midjourney, and Shutterstock to develop common standards for tagging and identifying AI-generated content, such as C2PA and IPTC metadata.

Photorealistic images created with the proprietary Meta AI feature will also carry the visible label "Imagined with AI" and an invisible watermark, as well as appropriate metadata.

In the "coming months," Meta will begin tagging AI content in all languages supported by each app.

While this is the best technically feasible approach today, it is not possible to identify all AI-generated content, and humans can remove invisible tags, Meta says.

The company is working on more advanced classifiers to automatically recognize AI-generated content and is exploring watermarking technologies such as Stable Signature, which integrates watermarks directly into the image creation process, making them harder to disable, Meta said.

Until video and audio detection technology is standardized and mature, Meta also urges users to label organic content with photorealistic video or realistic-sounding audio that has been digitally created or modified. Meta threatens penalties for not doing so. Image, video, or audio content that poses a "particularly high risk" may be labeled more prominently. Meta will provide a tagging tool.

Meta tests LLMs for content moderation

Meta is also testing large language models to help enforce its community standards and identify content that violates its policies. According to Meta, initial tests indicate that LLMs can perform better than existing machine-learning models.

The company also uses LLMs to remove content from the review queue when it is highly confident that the content does not violate policies. This allows reviewers to focus on content that is more likely to violate policies.

OpenAI is also testing the extent to which language models can partially automate content moderation.