OpenAI demonstrates that GPT-4 can evaluate social media posts according to a content policy. The system is supposed to be significantly faster and more flexible than human moderators.

To establish GPT-4 as a reliable moderation system, OpenAI first performs an alignment with human experts: First, OpenAI has human content policy experts review the content to be moderated.

Then GPT-4, prompted with the content policy, performs the same evaluation. The model is then confronted with the human expert's evaluation and must explain any deviation from it.

Based on this explanation, the content policy can then be adjusted so that the model achieves the same rating as the human in future moderation cases. When the human and GPT-4 scores are in reliable alignment, the model can be used in practice.

Video: OpenAI

Moderation adapting to policy changes in hours instead of months

According to OpenAI, the moderation system continuously improves and learns as it refines and clarifies content policies. The much faster adoption of policy changes can save a lot of time: The model can implement a policy change in a matter of hours. Human moderators must be trained, a process that can take months.

To keep the computational effort manageable, OpenAI relies on a smaller model that, after being fine-tuned with the predictions of the larger model, takes over the execution of moderation tasks.

Using AI to moderate content is not new. Meta, for example, has been using machine learning for many years to identify and remove critical topics as quickly as possible.

However, these systems are specialized and not always reliable. Large language models such as GPT-4 have the potential to make more sophisticated and informed judgments across many categories, perhaps even answering or at least suggesting answers that need only be approved. A recent study showed that ChatGPT can describe emotional scenarios much more accurately and comprehensively than the average human, demonstrating a higher level of emotional awareness.

According to OpenAI, GPT-4 could therefore help to "relieve the mental burden of a large number of human moderators." OpenAI describes a scenario in which this workforce could then focus on "complex edge cases", but of course, these people might just lose their jobs.

OpenAI aims to tackle constitutional AI

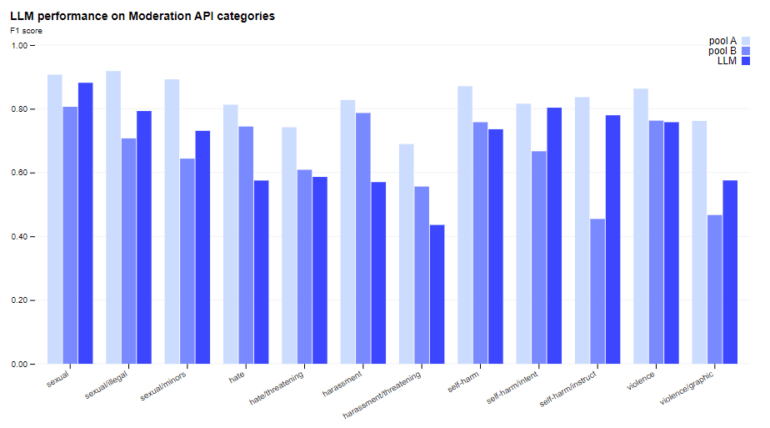

According to OpenAI, the language model achieves moderation results comparable to those of lightly trained humans. Well-trained human moderators outperform GPT-4's moderation quality in all areas tested, although the gap is not large in many cases. And OpenAI sees further room for improvement through chain-of-thought prompting and the integration of self-criticism.

OpenAI is also investigating how to identify unknown risks that don't appear in the examples or policy, and in this context intends to look at constitutional AI that identifies risks using high-level descriptions. A possible reference to competitor Anthropic, which, unlike OpenAI, does not base its AI models on human feedback, but on a constitution combined with AI feedback on generated content.

Video: OpenAI

OpenAI points out the usual risks of using AI: The models contain social biases that could be reflected in their outcomes. In addition, humans would need to monitor the AI moderation system, the company writes.

According to OpenAI, the proposed method of using GPT-4 for moderation can be replicated by anyone with access to its API.