Metas AITemplate delivers speedup for models like Stable Diffusion

Meta's AITemplate can execute code from the PyTorch AI framework up to twelve times faster. Among others, image AI systems like Stable Diffusion benefit significantly.

Meta's AITemplate (AIT) is a unified inference system with separate acceleration backends for AMD and Nvidia GPUs. It can perform high-performance inference on both GPU vendors' hardware - without the need for an entirely new implementation of the AI model that would otherwise be required when switching vendors.

Meta is making AITemplate available as open source and promises near hardware-native Tensor Core (Nvidia) and Matrix Core (AMD) performance for a variety of common AI models such as CNNs, Transformers, and Diffusion models.

AITemplate is up to twelve times faster, according to Meta

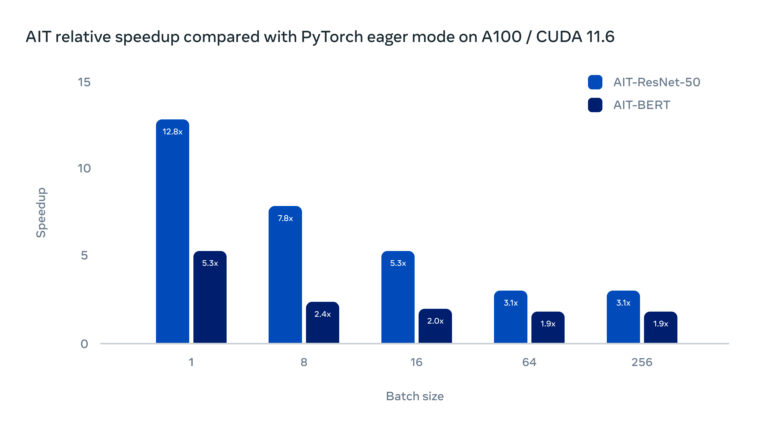

AITemplate converts AI models into high-performance C++ GPU template code as a Python framework, speeding up inference. According to Meta, AITemplate can speed up AI inference by up to 12x on Nvidia GPUs and up to 4x on AMD GPUs compared to Eager mode in PyTorch. In Eager mode, API calls are not executed until they are invoked. PyTorch is set to Eager Execution Mode by default.

The framework offers numerous performance innovations, according to Meta, including advanced kernel fusion, an optimization method that combines multiple kernels into a single kernel to run more efficiently, and advanced optimizations for transformer blocks.

Meta's open-source framework can accelerate stable diffusion

Meta also delivers commonly used models out of the box with AITemplate, including Vision Transformer, BERT, Stable Diffusion, ResNet, and MaskRCNN. The generative image AI system Stable Diffusion (SD) runs about 2.4 times faster with AIT on an Nvidia GPU, allowing it to work around an out-of-memory error in a test on an RTX 3080 even with high SD settings.

In practice, Meta's AIT can thus accelerate image generation and processing with Stable Diffusion or enable higher resolutions, for example. The implementation of AIT in common solutions like Stable Diffusion WebUI is probably only a matter of time.

According to Meta, the release of AITemplate is also just the beginning of a long series of planned releases on the road to building a powerful AI inference engine. Further optimizations are planned, as well as expansion to other hardware systems such as Apple M-series GPUs and CPUs from other companies.

Metas AITemplate is available on GitHub.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.