- Meta releases DINOv2 as open source under the Apache 2.0 license.

- Meta also introduces FACET (FAirness in Computer Vision EvaluaTion), a benchmark for bias in computer vision models.

Update, August 31, 2023:

Meta releases its computer vision model DINOv2 under the Apache 2.0 license to give developers and researchers more flexibility for downstream tasks. Meta also releases a collection of DINOv2-based dense prediction models for semantic image segmentation and monocular depth estimation.

Meta also introduces FACET, a benchmark for evaluating the fairness of computer vision models in tasks such as classification and segmentation. The dataset includes 32,000 images of 50,000 people, with demographic attributes such as perceived gender and age group, in addition to physical features.

FACET is intended to become a standard benchmark for evaluating the fairness of computer vision models and to encourage the design and development of models that take more people into account.

Original article from April 18, 2023:

Metas DINOv2 is a foundation model for computer vision. The company shows its strengths and wants to combine DINOv2 with large language models.

In May 2021, AI researchers at Meta presented DINO (Self-Distillation with no labels), a self-supervised trained AI model for image tasks such as classification or segmentation. With DINOv2, Meta is now releasing a significantly improved version.

Like DINO, DINOv2 is a computer vision model trained using self-supervised learning, and according to Meta, it performs as well as or better than most of today's specialized systems on all tasks benchmarked. Due to self-supervised learning, no labeled data is required, and the DINO models can be trained on large unlabeled image datasets.

Video: Meta

DINOv2 is a building block for all computer vision tasks

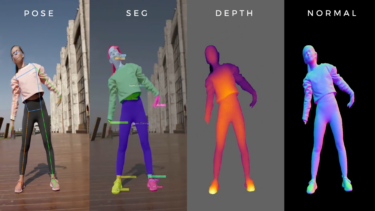

"DINOv2 provides high-performance features that can be directly used as inputs for simple linear classifiers," says Meta. This makes DINOv2 a flexible and versatile tool for a wide range of computer vision tasks, from image-level visual tasks (image classification, instance retrieval, video understanding) to pixel-level visual tasks (depth estimation, semantic segmentation).

Video: Meta

According to Meta, DINOv2 models could be useful for a variety of applications, including forest mapping with the World Resources Institute, estimating animal density and abundance, and biological research such as cell microscopy.

For training, Meta collected 1.2 billion images and filtered and balanced the dataset. In the end, DINOv2 was trained with 142 million images. Like its predecessor, DINOv2 relies on Vision Transformers.

Meta wants to link DINOv2 with large language models

DINOv2 complements Meta's work in computer vision, in particular "Segment Anything", a recently released model for zero-shot image segmentation with prompt capabilities. Meta sees DINOv2 as a building block that can be linked to other classifiers for use in many areas beyond segmentation.

The company is releasing the code and some models of the DINOv2 family. The company now plans to integrate DINOv2 into a more complex AI system that can interact with large language models. "A visual backbone providing rich information on images will allow complex AI systems to reason on images in a deeper way than describing them with a single text sentence."

Models like CLIP, which would be trained with image-text pairs, would ultimately be limited by the captions, he said. "With DINOv2, there is no such built-in limitation."

More information is available on the project page. There are also demos for DINOv2. Code and checkpoints are available on Github.