With Segment Anything, Meta releases a powerful AI model for image segmentation that can serve as a central building block for future AI applications.

Meta's Segment Anything Model (SAM) has been trained on nearly 11 million images from around the world and a billion semi-automated segmentations. The goal was to develop a "foundation model" for image segmentation, and Meta says it has succeeded. Such foundation models are trained on large amounts of data, achieving generalized capabilities that allow them to be used in many specialized use cases with little or no training. The success of large pre-trained language models such as GPT-3 sparked the trend toward such models.

Video: Meta

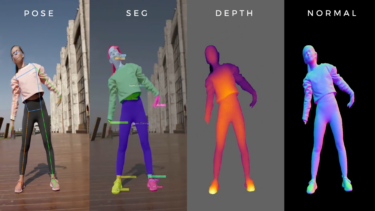

Once trained, SAM can segment previously unknown objects in any image and can be controlled by various inputs: SAM can automatically scan the entire image, users can mark areas to be segmented, or click on specific objects. SAM should also be able to handle text since Meta integrates a CLIP model into its architecture in addition to the Vision Transformer, which initially processes the image.

Nvidia researcher Jim Fan calls SAM the "GPT-3 moment" in computer vision.

Reading @MetaAI's Segment-Anything, and I believe today is one of the "GPT-3 moments" in computer vision. It has learned the *general* concept of what an "object" is, even for unknown objects, unfamiliar scenes (e.g. underwater & cell microscopy), and ambiguous cases.

I still... pic.twitter.com/lFWoYAxDmw

- Jim Fan (@DrJimFan) April 5, 2023

Meta's SAM for everything and the XR future

Meta sees many applications for SAM, such as being part of multimodal AI systems that can understand visual and text content on web pages or segment small organic structures in microscopy.

Video: Meta

In the XR domain, SAM could automatically segment objects, view a human wearing an XR headset, and selected objects could then be converted into 3D objects by models such as Meta's MCC.

Video: Meta

SAM could also be used to aid scientific study of natural occurrences on Earth or even in space, for example, by localizing animals or objects to study and track in video. We believe the possibilities are broad, and we are excited by the many potential use cases we haven’t even imagined yet.

Meta

In the accompanying paper, the authors compare SAM to CLIP: like OpenAI's multimodal model, they say SAM is explicitly designed to serve as a building block in larger AI models, enabling numerous applications.

Segment Anything dataset and demo available

At one point, Fan's GPT-3 comparison gets stuck: unlike OpenAI's language model, Meta's SAM is open source. In addition to the model, Meta also releases the SA-1B training dataset used.

It contains six times more images than previously available datasets and 400 times more segmentation masks. The data was collected in a human-machine collaboration in which SAM iteratively generated better and better segmentations from human-generated training data, which were then repeatedly corrected by humans.