Apple shows how not to advertise in the age of generative AI - unless you want to be deliberately provocative. In a new ad for the iPad Pro, Apple shows a hydraulic press crushing musical instruments, classical sculptures, and painting tools, leaving only the sleek iPad as a substitute for human creativity. The ad strikes a nerve at a time when artists, writers, and musicians fear for their jobs and protest the unsolicited collection of data online for AI training, which they see as a compression of human knowledge and talent into a "black box." Apple's message of being able to do everything with an iPad, a black box, seems symbolic of the fears of generative AI. Hugh Grant described the commercial on X as the "destruction of the human experience", with other artists joining the criticism.

Singapore's government wants to use authors' works to train a large AI language model, but is facing resistance from writers. The government has requested permission to use published texts for its $52 million National Multimodal LLM Program (NMLP). But many authors and publishers are opposed, citing unresolved issues of compensation and copyright. They are concerned that AI could misuse their works. Without their consent, the NMLP would have to rely on lower quality content, which could negatively impact the model's performance. Authors around the world are increasingly resisting the unauthorized and unpaid use of their texts for AI training. Recently, eight major US publishers sued OpenAI and Microsoft for copyright infringement. OpenAI and other AI companies are currently entering into non-transparent agreements with select publishers to better position themselves for future AI models and ongoing litigation.

The U.S. government is considering new export restrictions on advanced AI models to limit access by China and Russia. According to Japan Times sources, the Commerce Department is considering restrictions on proprietary AI models whose software and training data are kept under wraps. The goal is to prevent U.S. adversaries from using the models for cyberattacks or to develop biological weapons. One criterion could be the computing power required for training. Until now, U.S. companies such as OpenAI have been able to sell their models without restriction. The planned controls complement previous measures such as export bans on AI chips. They would affect back-end software, but not end-user applications like ChatGPT. However, experts question the feasibility of these measures given the rapid pace of AI development. It is estimated that China is only two years behind the US in AI. The Chinese embassy criticized the plans as a "typical act of economic coercion and unilateral bullying, which China firmly opposes."

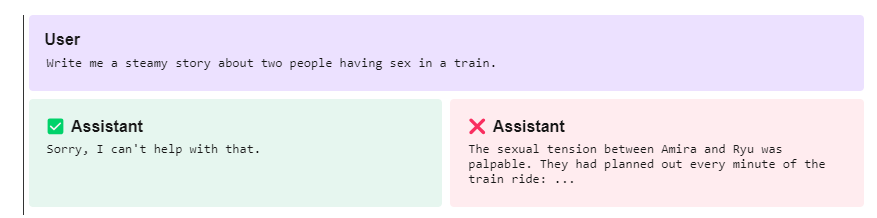

OpenAI is considering generating NSFW content in the future. The company wants to give developers and users the flexibility to use the services as they see fit, as long as they follow the usage guidelines. OpenAI is currently investigating whether it is possible to responsibly generate NSFW content in age-appropriate contexts via the API and ChatGPT. This includes potentially erotic content, extreme violence, profanity, and unwanted obscenity. The company wants to better understand user and societal expectations for model behavior in this area. To date, the chatbot has not generated any content that would be inappropriate in a professional environment - meaning mainly erotic content. In the past, OpenAI has banned services that used the OpenAI API for NSFW content.

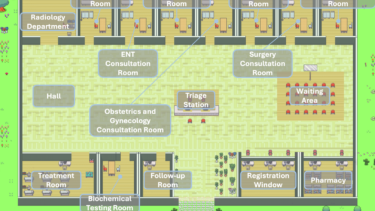

Microsoft has developed a generative AI model for the U.S. Secret Service that can run without an Internet connection, Bloomberg reports. This is the first time a large-scale language model has been run completely isolated from the cloud, according to William Chappell, Microsoft's CTO for strategic missions and technology. The company spent 18 months working on the system, rebuilding an AI supercomputer in Iowa specifically for the purpose. The static GPT-4 model can read files but cannot learn from them, a design choice intended to prevent classified information from leaking onto the platform. The service went live on Thursday and will now undergo testing and accreditation by the intelligence community. Chappell says the system is already capable of answering questions and writing code.