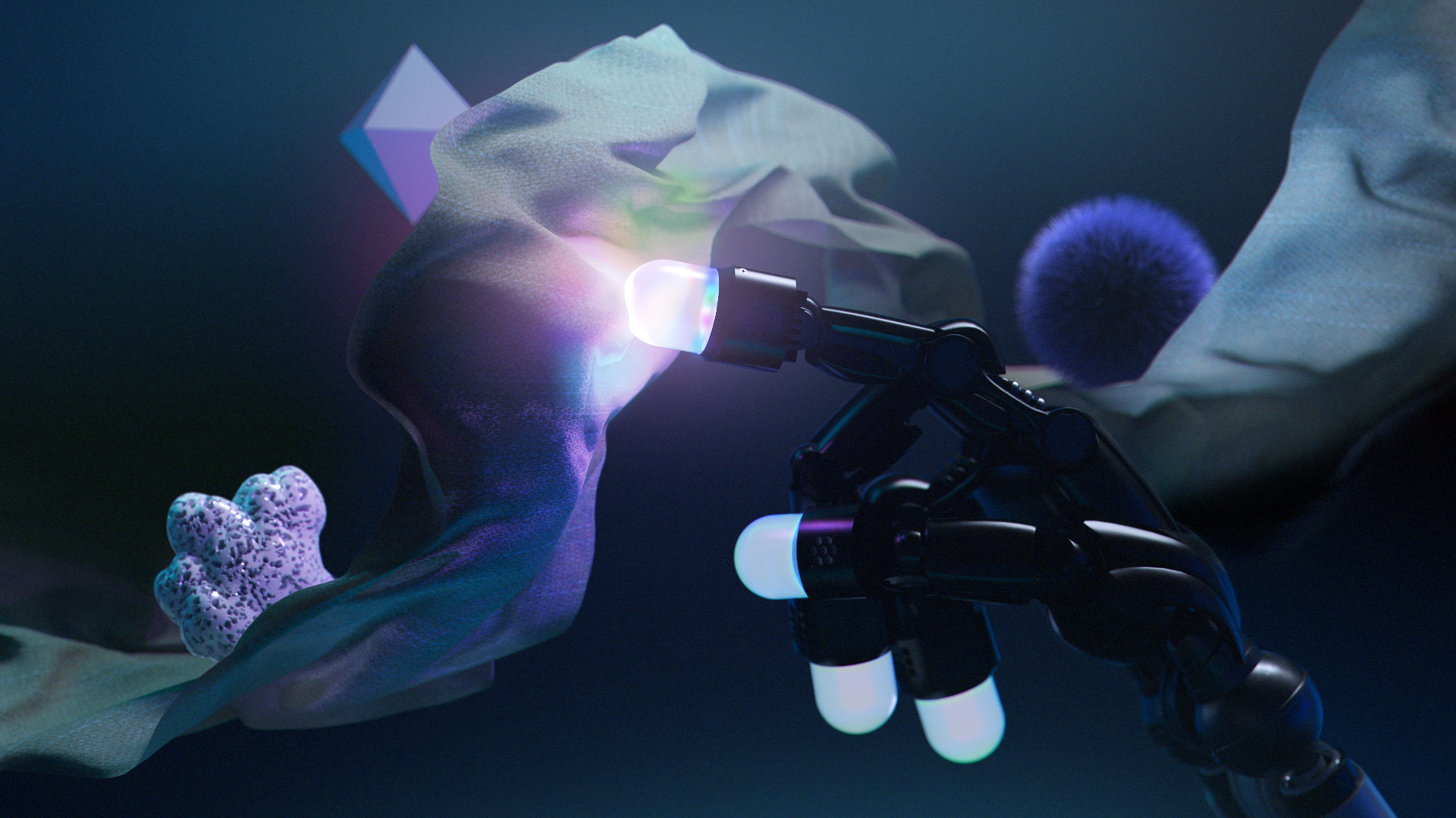

Meta's new robotic fingertip mimics "human-level multimodal sensing capabilities"

Meta FAIR has developed three new technologies to give robots better touch capabilities. The company plans to make these technologies available to researchers in 2024.

The key innovation is Meta Digit 360, a sensor-packed artificial finger with more than 18 sensing functions. The sensor can detect forces as small as one millinewton and uses a special optical system with more than 8 million measurement points, according to Meta, which says the sensor "significantly surpasses previous sensors, detecting miniature changes in spatial details."

According to Meta, the Digit 360 processes information directly through an "on-device AI accelerator," similar to the way the peripheral nervous system works in humans and animals.

New universal sensor technology

Meta also introduced Sparsh, which it calls the first universal encoder for visual tactile sensors. The system trained on more than 460,000 tactile images and uses self-supervised learning to work across different sensor types without specific adjustments.

According to Meta, Sparsh performs 95 percent better than task-specific and sensor-specific models in their testing. The company designed it as a pre-trained foundation for future robotics development.

Video: Meta

The third component, Meta Digit Plexus, is a standardized platform that integrates various tactile sensors in robotic hands. The system collects and analyzes data through a single cable connection.

Moving from the lab to the research community

Meta has partnered with two companies to bring these technologies to market. GelSight Inc will produce and sell Digit 360, while Wonik Robotics is creating a new robotic hand with built-in Digit Plexus technology.

Both products are planned for release in 2024, and researchers can now apply for early access. Meta is also releasing the code and designs for all three technologies to the research community.

Meta's Fundamental AI Research (FAIR) team says it developed these technologies to advance AI systems that can physically interact with their environment. Applications could range from healthcare to manufacturing, where machines need to perform precise movements. The technology could also allow people to feel virtual objects, such as touching a jacket in an online store.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.