Meta's next-gen MTIA chip triples AI performance, reducing reliance on Nvidia

Key Points

- Meta announces new details on the next generation of its proprietary MTIA AI chip, which is up to three times more performant than its predecessor and is already being used in Meta's advertising and ranking processes.

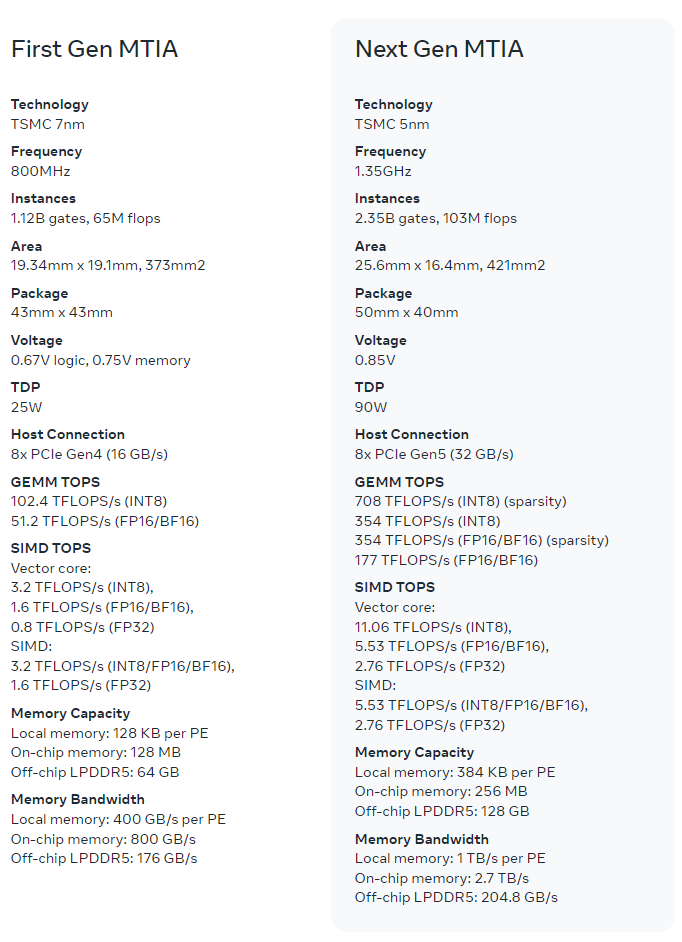

- The new chip doubles the compute and memory bandwidth of the previous version and focuses on the optimal balance of compute, bandwidth and memory capacity for Meta's specific workloads. By controlling the entire stack, Meta achieves higher efficiency compared to commercial GPUs.

- Despite advances in its own AI chips, Meta remains one of Nvidia's largest customers, with plans to deploy a total of 600,000 graphics cards by the end of the year, including 340,000 Nvidia H100 GPUs.

Meta has unveiled details of the next generation of its in-house AI chip MTIA. According to Meta, the new chip is up to three times more powerful than its predecessor and is already being used in Meta's advertising and ranking processes.

Meta has announced new details on the next generation of the Meta Training and Inference Accelerator (MTIA), the chip family that Meta is developing specifically for inferencing its AI workloads.

According to the company, the new version of MTIA doubles the compute and memory bandwidth of the previous version, while maintaining a tight connection to Meta's workloads.

The architecture focuses on striking the right balance between processing power, bandwidth and storage capacity for Meta's ad ranking and recommendation models.

In addition, several programs are underway to expand the scope of MTIA, including support for GenAI workloads.

Meta also optimized the software level and developed Triton-MTIA, a low-level compiler that Meta says generates "high-performance code" for the MTIA hardware.

Meta says initial results show that the new chip has already tripled the performance of four key models over the first generation chip. Because Meta controls the entire stack, higher efficiency can be achieved compared to commercial GPUs.

The new chip can reduce Meta's dependence on Nvidia graphics cards in some areas, which are used not only to run AI models but also to train them, but cannot replace them.

Meta CEO Mark Zuckerberg recently announced that 340,000 Nvidia H100 GPUs will be in use by the end of the year, for a total of about 600,000 graphics cards. This makes Meta one of Nvidia's largest customers.

Meta isn't the only company investing in its own AI chips. Google just unveiled a new version, TPU v5p, that offers more than twice as many FLOPS and three times as much high-speed memory as the previous generation. Google says the chip is a general-purpose AI processor that supports training, fine-tuning, and inference.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now