Meta has introduced a new AI model, the Video Joint Embedding Predictive Architecture (V-JEPA). It is part of Meta's research into the general JEPA architecture, which seeks to improve AI's ability to understand and interact with the physical world.

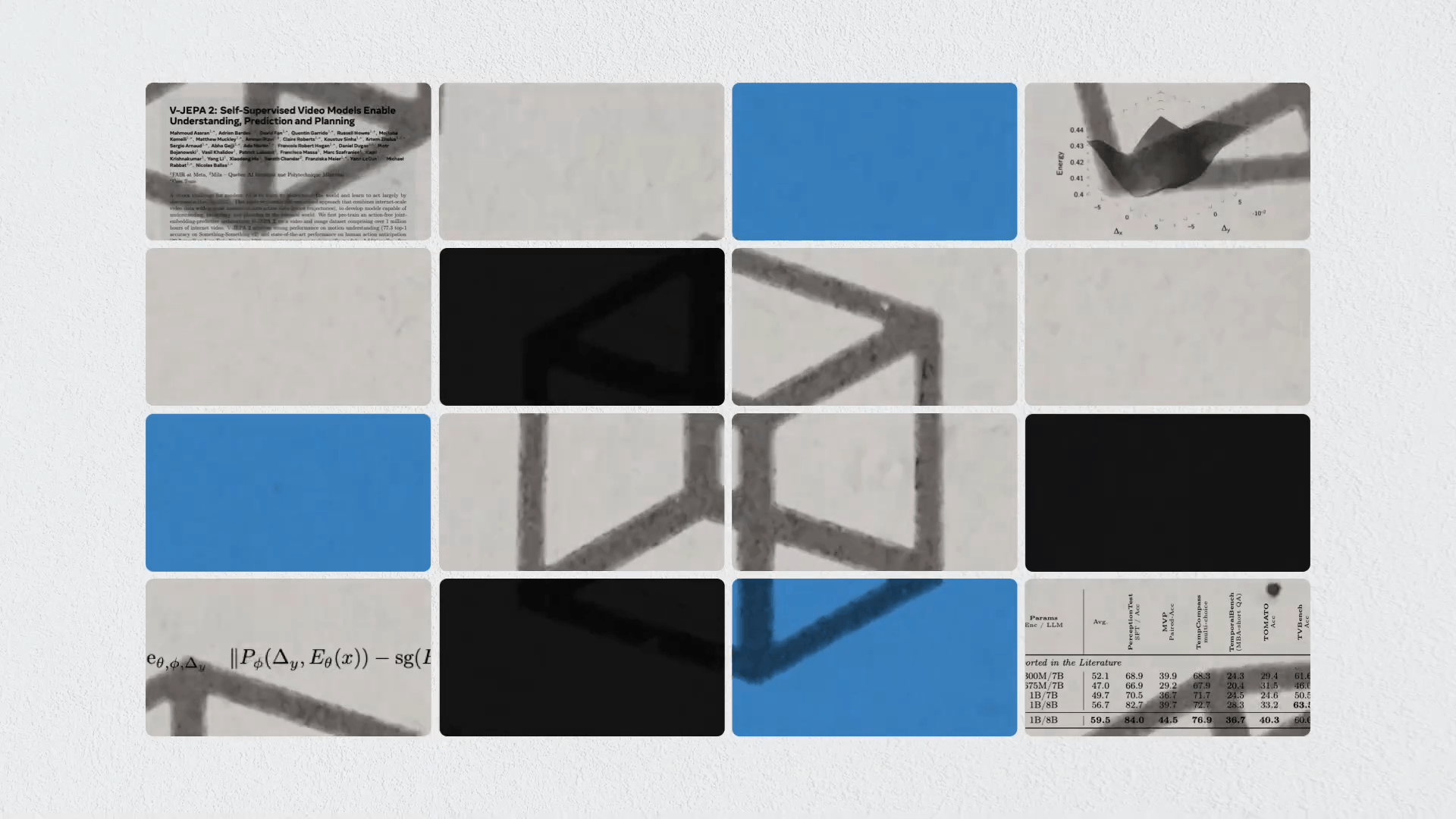

Developed by Yann LeCun, Meta's VP & Chief AI Scientist, and his team, V-JEPA is designed to predict and understand complex interactions within videos, much like an infant learns about gravity by watching objects fall. The model works by filling in missing or obscured parts of a video, not by reconstructing each pixel, but by decoding an abstract representation of the scene, which Meta explains is similar to how we process images in our minds.

The idea behind V-JEPA is that predictions should occur in a higher-level conceptual space, allowing it to focus on what's important for understanding and completing tasks without getting bogged down in irrelevant details. For example, when recognizing a tree in a video, the model doesn't need to consider the movement of each leaf.

Video: Meta

The model's training involves a masking method that hides significant portions of a video, forcing V-JEPA to learn about the scene's dynamics by predicting what's happening in space and time. This masking isn't random; it's carefully designed to ensure that the model doesn't just learn from simple guesses, but develops an understanding of how objects interact. The model was trained with 2 million videos.

One of the model's strengths is its ability to adapt to new tasks without retraining the core model. Traditionally, AI models would have to be fine-tuned, meaning that their entire architecture would be specialized for one task and rendered inefficient for others. V-JEPA, on the other hand, can be pre-trained once and then simply add a small task-specific layer to adapt to different tasks, such as action classification or object interaction detection.

Looking to the future, Meta's team sees potential in extending V-JEPA's capabilities to audio and improving its ability to plan and predict over longer time-spans. While it currently excels at short-term action recognition, longer-term prediction is an area of interest for further research.

LeCun's JEPA has broader ambitions

LeCun introduced the JEPA architecture in 2022 to address the challenge of learning from complex data and making predictions at different levels of abstraction. In 2023, his team introduced the first model, I-JEPA, which performed impressively on ImageNet with minimal labeled data.

Beyond its current capabilities, the Joint Embedding Predictive Architecture (JEPA) has broader ambitions to enable comprehensive world models that could underpin autonomous artificial intelligence. LeCun envisions a hierarchical stacking of JEPA models to create high-level abstractions of lower-level predictions. The ultimate goal is for these models to make spatial and temporal predictions about future events, with video training playing an important role in the equation.

The code is available on GitHub.