Microsoft unveils AI hallucination 'correction' tool

Microsoft has announced a new feature for Azure AI Content Safety, which detects and corrects inconsistencies in AI-generated text. With this tool, the company aims to increase confidence in generative AI technologies.

The correction feature within Azure AI Content Safety's Groundedness Detection is now available in preview. Groundedness Detection compares AI output to source documents to identify unsupported or hallucinated content. Google offers a similar feature in Vertex AI with Google Search Grounding.

No absolute improvement, but better alignment with underlying documents

Microsoft emphasizes that while the tool improves the reliability of AI output, it doesn't guarantee perfect accuracy. Instead, it improves consistency between generated content and source materials.

To use the feature, generative AI applications must link to base documents used for summarization or RAG question-answering tasks.

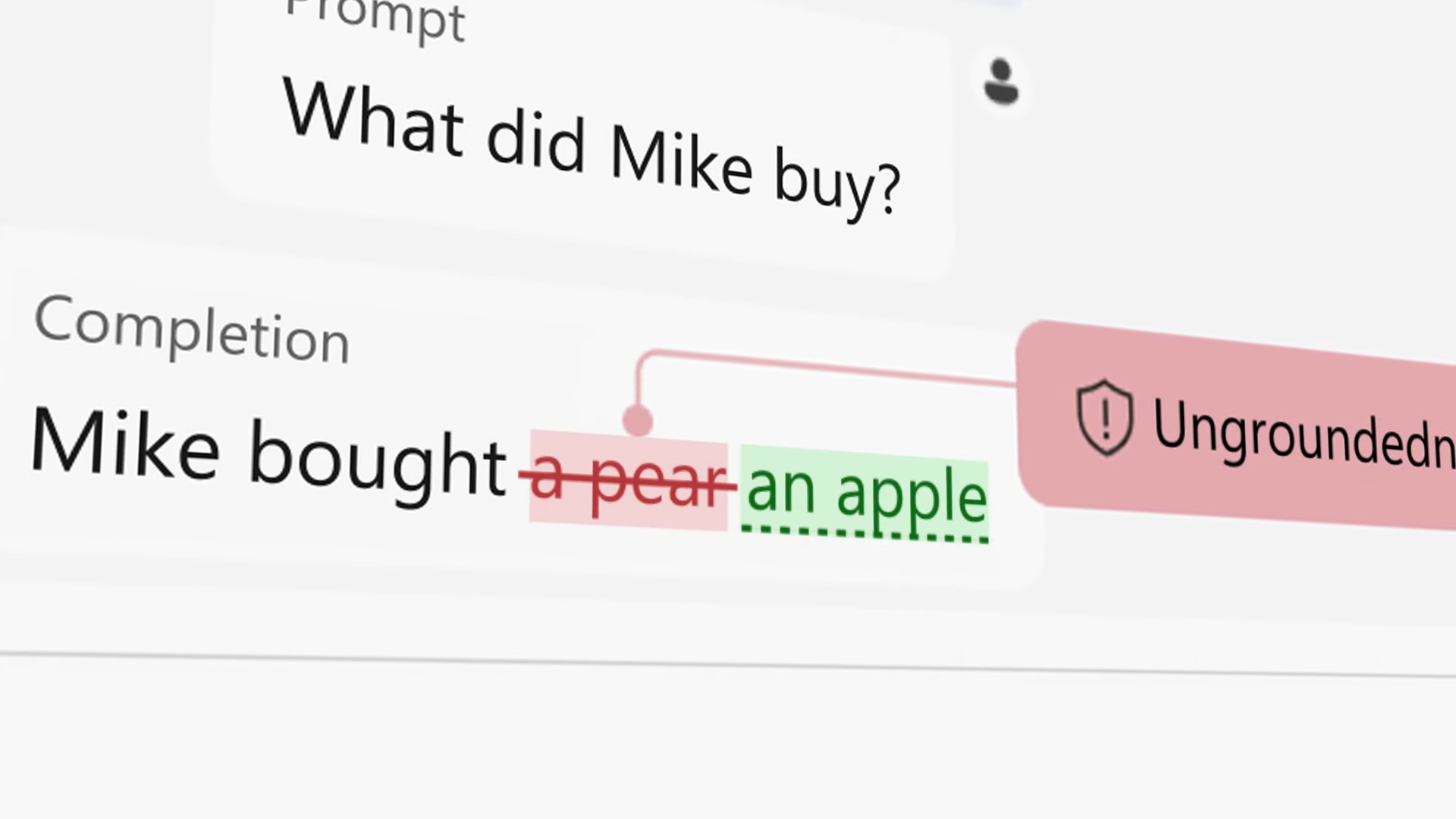

When the system detects an insufficiently supported sentence, it triggers a new request to a smaller AI model for correction. This model evaluates the sentence against the base document.

If a sentence contains no relevant information from the base document, it can be filtered out completely. For sentences with some related content, the model rewrites them to match the source material.

Initial data shows that the method is effective in detecting and correcting inconsistencies between AI-generated text and the underlying sources. In the best cases, only 0.1-1% of these specific issues remain after correction. However, this doesn't mean that 99% of all generated content is error-free, as the process targets specific types of inconsistencies and does not improve overall accuracy.

OpenAI recently demonstrated a similar process in which a smaller model critiques the output of a larger model.

LLM bullshit makes it hard for Microsoft to sell AI

Notably, in the "correction" announcement, Microsoft acknowledges that hallucinations have hindered the adoption of generative AI in critical areas such as medicine and prevented the wider, more public use of its copilots. The company hopes that this new correction feature will enable these applications.

Critics might argue that using generative AI to fix generative AI flaws doesn't address the underlying problem and could create a false sense of safety. Microsoft's AI search has previously generated incorrect information about elections and individuals.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.