Microsoft's HoloAssist dataset brings AI assistants closer to our daily lives

Key Points

- Microsoft has unveiled HoloAssist, the first dataset of egocentric video to support the development of AI assistants that help people with everyday tasks.

- The dataset includes more than 160 hours of video and seven different sensor streams as 222 participants performed 20 different tasks under the guidance of a human tutor.

- The dataset will be made available to the scientific community and contribute to the development of AI systems that can better assist people in everyday life. The full code and training materials are available on GitHub.

Microsoft has unveiled a new dataset to help build interactive AI assistants for everyday tasks.

Extensive dataset of egocentric videos

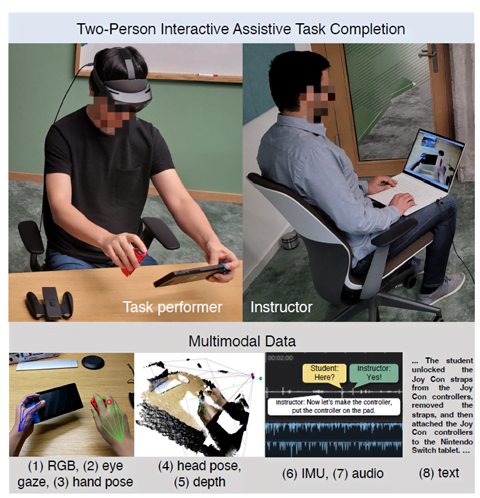

According to Microsoft researchers Xin Wang and Neel Joshi, the dataset, called "HoloAssist," is the first of its kind to include egocentric videos of humans performing physical tasks, as well as associated instructions from a human tutor.

In total, the dataset includes more than 160 hours of video recordings with seven different sensor streams to help understand human intentions and actions, according to the blog post. Data includes eye movement, hand and head position, and voice.

Assembling furniture, operating scanners

The 222 participants who helped collect the data performed 20 different tasks, such as assembling furniture while wearing the Hololens 2 mixed reality headset and receiving instructions from a tutor. The tasks ranged from assembling simple furniture to operating coffee makers or laser scanners.

According to Microsoft researchers, this scenario is ideal for developing AI assistants that can act proactively and give precise instructions at the right time. In the future, this could lead to the development of AI systems that can better assist people in their daily lives.

Previous AI assistants are still limited to the digital world and cannot adequately assist humans in real-world tasks because they lack the necessary experience and training data, the researchers said.

The key challenge is that current state-of-the-art AI assistants lack firsthand experience in the physical world. Consequently, they cannot perceive the state of the real world and actively intervene when necessary. This limitation stems from a lack of training on the specific data required for perception, reasoning, and modeling in such scenarios. In terms of AI development, there’s a saying that “data is king.” This challenge is no exception.

Microsoft Research Blog

HoloAssist aims to solve this problem. The dataset is available to the scientific community for further experimentation: Microsoft has uploaded all the code and more than a terabyte of training materials to GitHub.

Meta provided more than 2,200 hours of first-person perspective video footage in 2021. The dataset, called Ego4D, will also be used to develop an AI assistance system to work with AR devices. In 2022, seven hours of first-person video followed in the "Project Aria Pilot Dataset." The videos were shot with an early prototype of the sleek AR glasses that Meta hopes to one day build. The prototype has cameras for filming, but no displays.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now