Microsoft's small and efficient LLM Phi-3 beats Meta's Llama 3 and free ChatGPT in benchmarks

Update from April 24th, 2924:

Microsoft has officially launched the Phi-3 family. Phi-3-mini with 3.8 billion parameters is now available on Microsoft Azure AI Studio, Hugging Face and Ollama, including a variant with up to 128,000 token context length.

The models are instruction-optimized and use the ONNX runtime with support for Windows DirectML and NVIDIA GPUs. Phi-3-small (7 billion parameters) and Phi-3-medium (14 billion) will follow in the coming weeks.

According to Microsoft, the resource-efficient Phi-3 models are well suited for scenarios with limited computing power, where low latency is required, or where keeping costs down is critical. The models also make fine-tuning easier compared to larger AI models.

Original article from April 23, 2024:

Meta's Llama 3 has just set new standards for open-source models, but Microsoft's Phi 3 is poised to surpass them - at least on paper. Microsoft is focusing on a key feature of Phi: data quality.

Microsoft Research has developed a new, compact language model called Phi 3 that, according to internal tests, matches the performance of much larger models such as Mixtral 8x7B and GPT-3.5. The context length is 128K.

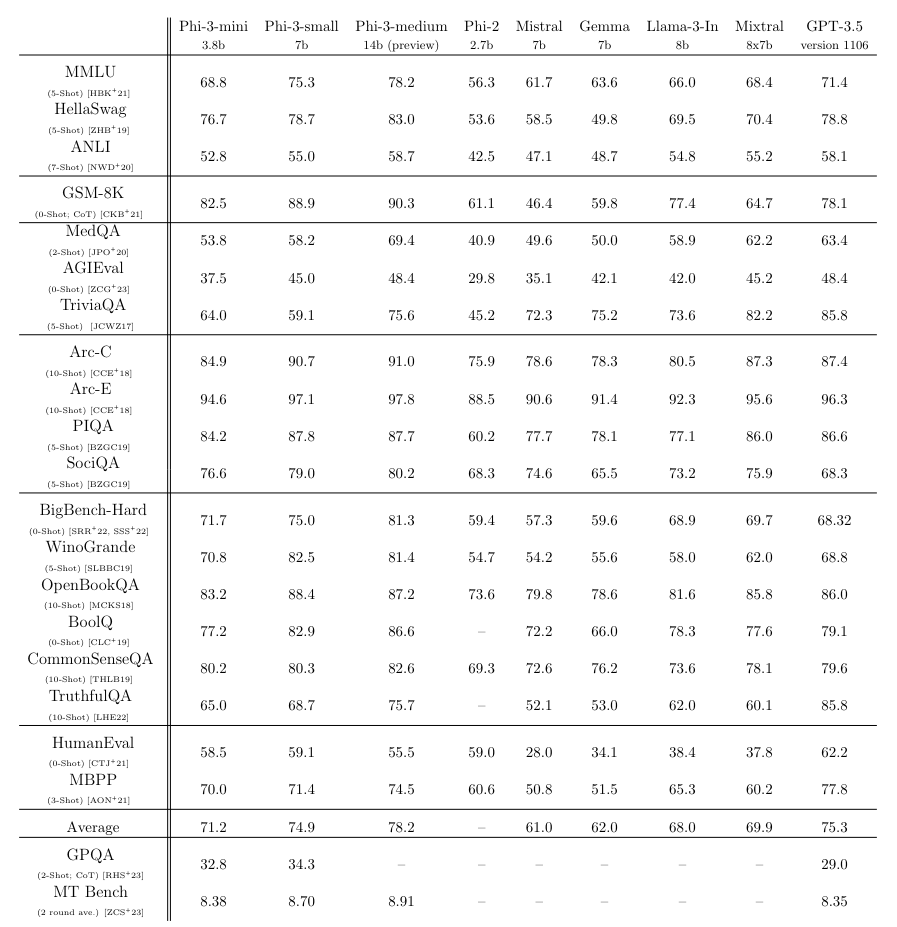

The Phi-3 mini model, with only 3.8 billion parameters, achieves 69 percent on the MMLU language comprehension benchmark and 8.38 points on the MT benchmark, according to Microsoft.

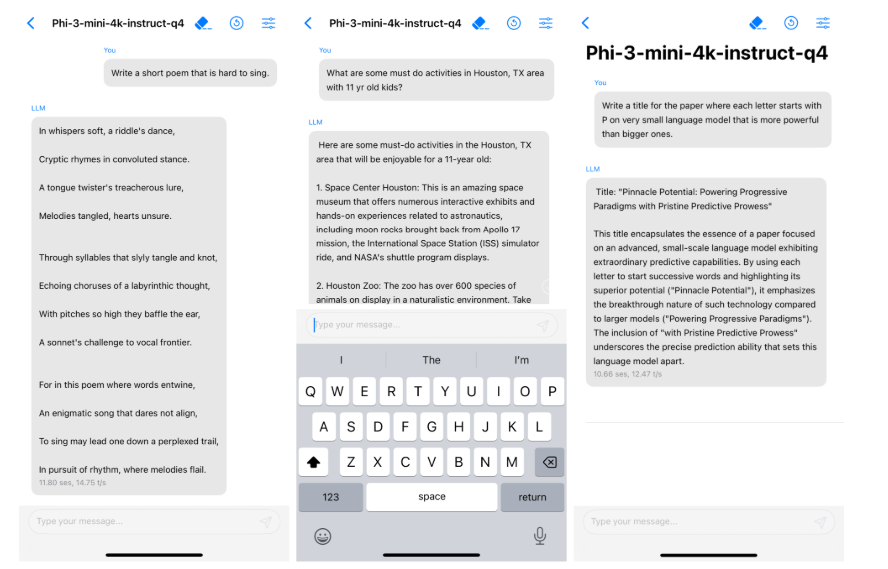

Thanks to its small size, Phi 3 can run locally on a standard smartphone with as little as 1.8 GB of memory, quantized to 4 bits, and achieving more than 12 tokens per second on an iPhone 14 with an A16 chip.

"It’s like fitting a supercomputer in a flip phone, but instead of breaking the phone, it just breaks the internet with its tiny, yet mighty, linguistic prowess," the developers jokingly had the model answer when asked how an AI model at the level of ChatGPT could run on a smartphone.

Get the most out of your training data with high-quality training data

According to Microsoft, the secret to Phi 3's performance lies solely in the training data set. This consists of heavily "education level"-filtered web and synthetic LLM-generated data and builds on the training method used in its predecessors, Phi 2 and Phi 1.

Microsoft emphasizes that the performance was achieved solely by optimizing the training data set. Instead of "wasting" Web data with information such as sports scores, the data set was brought closer to the "data optimum" for a compact model by focusing on knowledge and reasoning skills.

In the first phase of pre-training, mainly web data is used to let the model develop general knowledge and language understanding. In the second training phase, highly filtered, high-quality web data is combined with selected synthetic data to optimize the model's performance in specific areas such as logic and niche applications.

With the Phi models, Microsoft aims to enable high-quality but much more efficient and cost-effective AI models. Microsoft in particular needs cost-effective models to scale AI across its Windows and Office products and search to turn generative AI into a business model.

Phi 3 beats Llama 3 in many benchmarks

Phi-3-small with 7 billion parameters and Phi-3-medium with 14 billion parameters, both trained with 4.8 trillion tokens, perform similarly to Phi-3-mini in benchmarks with respect to same-class models.

They achieve 75 and 78 percent in the MMLU benchmark and 8.7 and 8.9 points in the MT benchmark. This puts them not far behind much larger models such as Meta's recently released 70-billion-parameter Llama 3. And Phi models outperform models in the same class in most cases (Phi 3 7b vs. Llama 3 8b).

However, perceived performance in applications and benchmark results do not necessarily match. It remains to be seen to what extent the model will be adopted by the open-source community.

Microsoft cites the Phi-3-mini's lower capacity for factual knowledge compared to larger models, e.g. in the TriviaQA benchmark, as a weakness. However, this can be compensated by the integration of a search engine. In addition, the training is mainly limited to the English language.

In terms of safety, Microsoft says it has taken a multi-step approach with alignment training, red teaming, automated testing, and independent reviews. This has significantly reduced the number of potentially harmful responses, the company says.

According to Microsoft, Phi 3 uses a similar block structure and the same tokenizer as Meta's Llama model to allow the open-source community to benefit as much as possible from Phi 3. This means that all packages developed for the Llama 2 model family can be directly adapted to Phi-3-mini.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.